An Introduction to Virtual Production

This article was writen by AI

WHAT IS VIRTUAL PRODUCTION

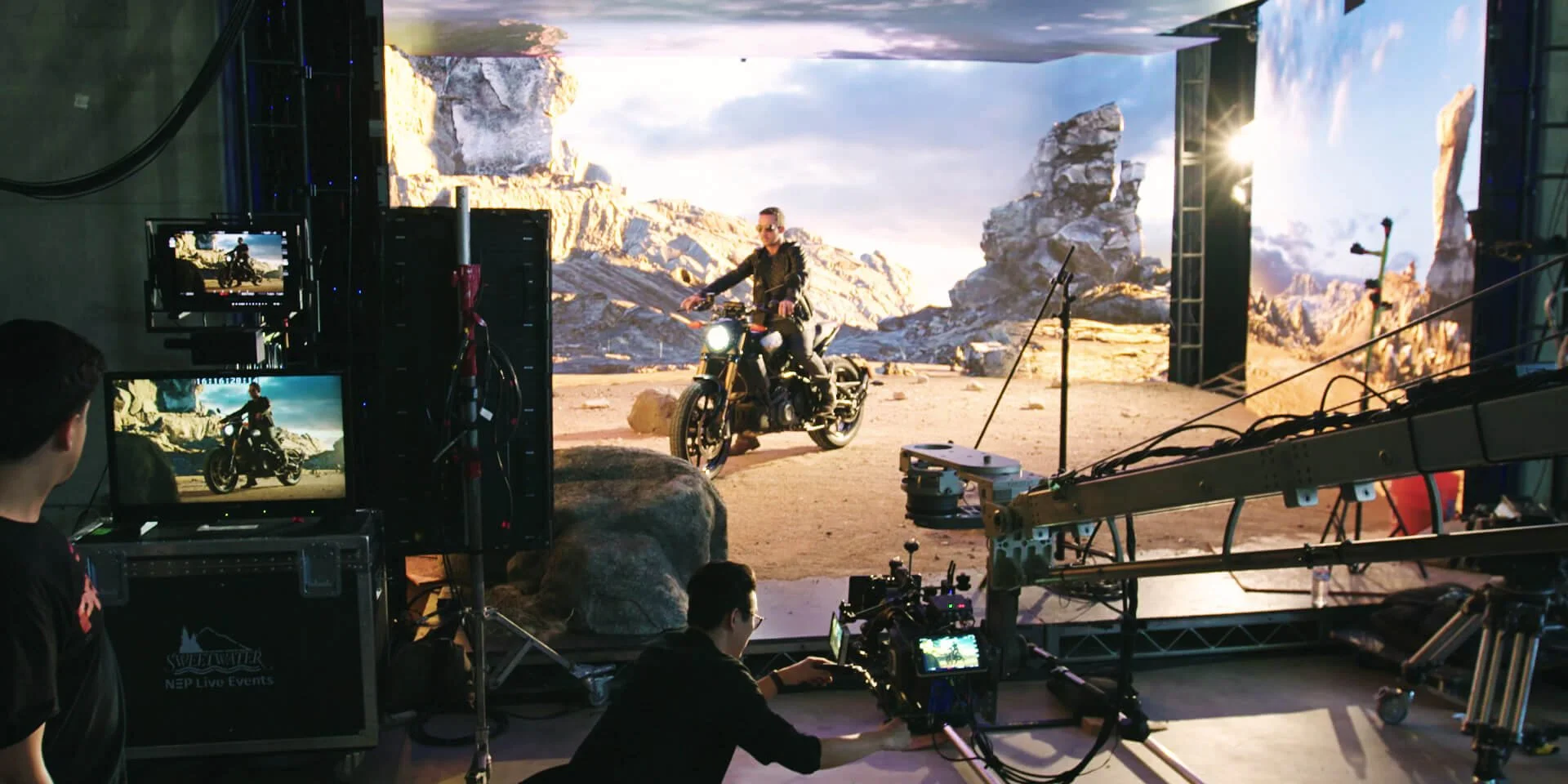

Virtual production is a filmmaking technique that uses real-time computer graphics and other digital tools to create and capture footage for use in movies, television shows, and other visual media. This technique allows filmmakers to combine live-action footage with computer-generated elements to create complex and realistic visual effects, without the need for traditional post-production techniques.

Virtual production typically involves the use of specialized hardware and software, such as motion capture systems, virtual cameras, and real-time rendering engines. This technology allows filmmakers to create and control digital environments and characters in real-time, allowing them to experiment with different shots and camera angles on the fly.

One of the key benefits of virtual production is that it allows filmmakers to create complex visual effects and environments in real-time, without the need for expensive and time-consuming post-production work. This can reduce the overall cost and time required to create a movie or television show, while also giving filmmakers more control and flexibility over the final product.

Overall, virtual production is a rapidly growing field that is changing the way visual media is created and consumed. By using cutting-edge technology and techniques, virtual production is making it possible to create more realistic and immersive visual experiences, and is opening up new possibilities for filmmakers and other visual creators.

HOW HAS VIRTUAL PRODUCTION EVOLVED OVER THE PAST YEARS?

Virtual production has been a rapidly evolving field over the past few years. One of the major developments in the field has been the increased use of real-time rendering technology, which allows filmmakers to see the results of their work in near real-time. This has made it possible to create highly realistic and immersive virtual environments that can be used for everything from pre-visualization to final post-production. Additionally, the use of motion capture technology has become more widespread in virtual production, allowing filmmakers to easily capture the movements of actors and translate them into the virtual world. These advances have made virtual production more accessible and affordable, and have opened up a whole new world of possibilities for filmmakers.

WHO ARE THE MOST RECOGNISABLE & SUCCESSFUL VIRTUAL PRODUCTION DIRECTORS & PRODUCERS?

There are many successful virtual production directors and producers working in the film and television industries today. Some of the most recognizable and successful individuals in this field include:

James Cameron, director of Avatar and Titanic, who is known for his pioneering work in virtual production and performance capture technology.

Peter Jackson, director of The Lord of the Rings and The Hobbit trilogies, who used virtual production techniques to create the epic fantasy worlds in these films.

Jon Favreau, director of The Lion King and The Mandalorian, who has used virtual production to create the stunning visuals in these productions.

Steven Spielberg, director of Jurassic Park and Indiana Jones, who has embraced virtual production technology in recent years to create more realistic and immersive visual effects.

In addition to these well-known directors, there are also many successful virtual production producers who are working behind the scenes to create the stunning visuals and effects that we see on screen. Some of the most notable names in this field include:

Kathleen Kennedy, president of Lucasfilm and producer of the Star Wars and Indiana Jones franchises, who has been a key advocate for the use of virtual production in these and other productions.

Joe Letteri, senior visual effects supervisor at Weta Digital, who has worked on many of the most successful virtual production projects in recent years, including Avatar and The Hobbit trilogies.

Christopher Nolan, director and producer of Inception and The Dark Knight trilogy, who has been a vocal supporter of using virtual production techniques to create more realistic and immersive visuals in his films.

Overall, there are many successful virtual production directors and producers working in the film and television industries today, and their work is helping to push the boundaries of what is possible in terms of visual effects and storytelling. These individuals are using cutting-edge technology and techniques to create stunning and immersive visual experiences, and their work is helping to shape the future of virtual production.

WHAT ARE THE ROLES & RESPONSIBILITIES OF A VIRTUAL PRODUCTION TEAM?

A virtual production team is typically made up of a diverse group of individuals with different roles and responsibilities. Some of the key members of a virtual production team might include:

The director, who is responsible for overseeing the creative vision of the project and directing the performances of the actors.

The virtual cinematographer, who is responsible for setting up and operating the virtual cameras used to capture the footage for the production.

The motion capture supervisor, who is responsible for overseeing the motion capture process and ensuring that the captured data is accurate and of high quality.

The visual effects supervisor, who is responsible for overseeing the creation of the digital effects and environments used in the production.

The virtual production supervisor, who is responsible for coordinating the various technical and creative aspects of the production to ensure that it runs smoothly and efficiently.

In addition to these key roles, a virtual production team might also include actors, stunt performers, makeup artists, and other individuals who are involved in the production process. The specific roles and responsibilities of a virtual production team will vary depending on the project and the specific needs of the production.

Overall, a virtual production team is a collaborative group of individuals with a wide range of skills and expertise, who work together to create complex and realistic visual effects and environments in real-time. By combining their technical and creative talents, a virtual production team can create stunning and immersive visuals that bring virtual worlds to life.

WHAT ARE THE COSTS ASSOCIATED WITH VIRTUAL PRODUCTION?

The cost of a virtual production project can vary widely depending on a number of factors, including the scale and complexity of the project, the technology and equipment used, and the duration of the production. Typically, the costs of a virtual production project can be broken down into two main categories: capital expenses (CAPEX) and operational expenses (OPEX).

Capital expenses, or CAPEX, are the upfront costs associated with a virtual production project, such as the cost of purchasing or leasing equipment and technology. These costs can include the cost of virtual cameras, motion capture systems, rendering engines, and other specialized hardware and software. CAPEX costs can also include the cost of building or renting a studio or other physical space to use for the production.

Operational expenses, or OPEX, are the ongoing costs associated with a virtual production project, such as the cost of labor, materials, and other resources used during the production. These costs can include the salaries of the production crew, the cost of costumes and props, and the cost of other consumables used during the production.

Overall, the cost of a virtual production project can vary greatly depending on the specific needs and requirements of the project. To get a better idea of the potential costs of a virtual production project, it is best to consult with a virtual production expert or specialist who can provide more detailed estimates and advice.

HOW CAN YOU REDUCe COSTS?

In general, the costs of a virtual production project can be significant, especially for large-scale productions that require a lot of specialized equipment and technology. However, many filmmakers and producers see the benefits of virtual production as outweighing the costs, since it allows for more flexibility and control during the production process, and can result in more realistic and immersive visual effects.

One of the ways that some virtual production teams have been able to reduce costs is by using more affordable and accessible technology. For example, some productions have used game engines, such as Unreal Engine or Unity, to create virtual environments and effects, rather than using more expensive and specialized tools.

Another way to reduce the costs of a virtual production project is by using a hybrid approach, where some elements of the production are created using traditional techniques and others are created using virtual production. This can help to minimize the need for expensive equipment and technology, while still allowing for the use of virtual production techniques where they are most effective.

Overall, while the costs of a virtual production project can be significant, there are a number of ways that filmmakers and producers can minimize these costs and still create high-quality virtual productions. By using a combination of traditional and virtual production techniques, and by taking advantage of more affordable technology, it is possible to create stunning and immersive virtual worlds without breaking the bank.

WHAT IS A BEST BUDGET SOLUTION FOR VIRTUAL PRODUCTION?

A budget solution for virtual production could involve using low-cost or free tools and software to create and edit virtual sets, characters, and other visual elements. For example, you could use Blender (a free and open-source 3D modeling software) to create and animate 3D assets, and OBS (a free and open-source live streaming software) to combine and stream the assets in real-time. You could also use a low-cost green screen and lighting setup to create a virtual environment, and a simple recording device to capture audio. With some creativity and resourcefulness, it is possible to create a functional virtual production setup on a budget.

Unreal Engine

Unreal Engine is a game engine developed by Epic Games that is widely used for creating interactive 3D content, including games, simulations, and visualizations. In the context of virtual production, Unreal Engine can be used to create real-time, interactive virtual sets and environments that can be used during filming. This allows filmmakers to capture scenes with virtual cameras, characters, and objects in a lifelike, immersive environment, without the need for traditional green screens or physical sets. Unreal Engine can also be used to create visual effects and other dynamic elements that can be integrated into the final video. Overall, Unreal Engine is a powerful tool for virtual production, offering a high level of realism and flexibility for creating virtual worlds.

HOW DO YOU SET UP A VIRTUAL SET IN UNREAL ENGINE?

To set up a virtual set in Unreal Engine, you first need to create a new level in your project. This can be done by clicking on the "File" menu and selecting the "New Level" option. In the "New Level" dialog, you can then choose the type of level you want to create, such as a blank level or a level based on a pre-made template.

Once you have created a new level, you can then begin building your virtual set by adding actors to the level. Actors are the objects and elements that make up a scene in Unreal Engine, such as characters, props, and environmental elements. You can add actors to your level by using the "Add New" button in the actor panel, and then selecting the actors you want to add from the available actor classes.

After you have added actors to your level, you can then use Unreal Engine's powerful suite of tools to customize and arrange them to create your virtual set. This can include modifying the actors' properties and appearance, using the engine's lighting and post-processing tools to create a realistic look and feel, and using the engine's blueprint system to create custom behaviors and interactions for your actors.

Once you have set up your virtual set, you can then use it to create your virtual production. This can be done by using Unreal Engine's camera and animation tools to create camera shots and movements, and using the Sequencer tool to record and edit the resulting footage. You can then export your virtual production for use in your final project.

Overall, the process of setting up a virtual set in Unreal Engine is a powerful and flexible way to create realistic and immersive environments for your virtual productions. By using the engine's actor and customization tools, you can create a wide variety of virtual sets and use them to create professional-quality virtual productions.

In addition to the steps outlined above, there are a few other considerations to keep in mind when setting up a virtual set in Unreal Engine. One of the key challenges of creating a virtual set is ensuring that it looks and feels realistic, which can require a high level of attention to detail and a good understanding of lighting and other visual techniques.

To create a realistic virtual set in Unreal Engine, it can be helpful to start by gathering reference material, such as photographs or video footage, of the real-world location or environment that you are trying to recreate. This can serve as a guide for creating your virtual set, and can help you to accurately capture the look and feel of the real-world location.

In addition to reference material, it can also be helpful to use Unreal Engine's visualization and preview tools to test and refine your virtual set before you start creating your final production. This can include using the engine's real-time rendering capabilities to see how your set looks and behaves in real-time, and using the engine's virtual camera tools to try out different camera angles and shots.

Overall, the process of setting up a virtual set in Unreal Engine requires a combination of creativity, technical skill, and attention to detail. By using the engine's tools and techniques, and taking the time to carefully plan and test your virtual set, you can create a realistic and immersive environment for your virtual productions.

How DO YOU SET UP VIRTUAL CAMERAS IN UNREAL ENGINE?

To set up a virtual camera in Unreal Engine, you first need to create a new camera actor in your scene. This can be done by clicking on the "Add New" button in the actor panel, and then selecting the "Camera" option from the list of available actor classes.

Once you have created a camera actor, you can then customize its properties to control its behavior and appearance. This can be done by selecting the camera actor and modifying its properties in the details panel on the right-hand side of the screen. Some of the key properties that you can adjust include the camera's field of view, its focus mode, and its lens settings.

In addition to adjusting the camera's properties, you can also use Unreal Engine's camera animation tools to create complex camera movements and shots. This can be done by using the "Matinee" editor, which allows you to create keyframe-based animations for your camera. You can also use Unreal Engine's blueprint system to create custom camera behaviors using visual scripting.

Overall, setting up virtual cameras in Unreal Engine is a straightforward process that allows you to create and customize your cameras to suit the needs of your project. By adjusting the camera's properties and using Unreal Engine's animation and blueprint tools, you can create professional-quality camera shots and movements for your virtual productions.

Once you have set up your virtual camera in Unreal Engine, you can then use it to capture footage for your virtual production. This can be done by selecting the camera actor in your scene and clicking on the "Play" button to start the Unreal Engine editor in play mode. This will allow you to move the camera and view the scene from the camera's perspective, just as you would on a physical film set.

To capture footage from the camera, you can use Unreal Engine's "Sequencer" tool to record a sequence of the camera's movements and the scene's visual elements. This can be done by opening the Sequencer editor and adding the camera and other actors to the timeline. You can then use the Sequencer's tools to record the camera's movements and other elements in the scene, and edit the resulting footage to create a polished final shot.

Once you have captured your footage, you can then export it for use in your final project. Unreal Engine allows you to export your footage in a variety of formats, including image sequences, video files, and more. You can also use Unreal Engine's rendering pipeline to apply post-processing effects and other visual enhancements to your footage, giving it a more professional look and feel.

Overall, the process of setting up and using virtual cameras in Unreal Engine is a powerful and flexible way to create professional-quality footage for your virtual productions. By using the engine's camera animation and sequencing tools, you can create complex and dynamic camera movements and shots that bring your virtual worlds to life.

CUEBRIC

How can AI IMAGE GENERATION BE USED In Virtual Production?

Tools such as Cuebric is the all-in-one A.I. image generation and editing tool that revolutionizes virtual production. Cuebric allows filmmakers, concept artists, and production studios to create stunning, fully segmented, inpainted, and Unreal Engine integrated virtual environments in seconds. A quick and cost-effective alternative and partner to CGI VFX production processes.

FEATURES

IMAGE GENERATION - Visualizing real-time the environment you imagine with text-to-image generative A.I. in any desired ratio.

SEGMENTATION - Transforming your 2D images into stunning 2.5 D environments.

INPAINTING - Automatically fill-in the gaps of your segmented images with A.I.

EDITING - Simple image editing tool to clean up the unwanted artifacts in the segmentation.

UPSCALING - Turning your newly created 2.5 D image into high-resolution 4K.

UE INTEGRATION - Export your segmented layers directly to Unreal Engine, ready-to-be filmed on a volume stage.

COMPUTER VISION

How can computer vision we used to track camera movement during Virtual Production?

Computer vision can be used to track camera movement during virtual production by using a technique called visual odometry. This involves using algorithms to analyze the images from a camera to estimate its motion and orientation relative to its surroundings. This information can then be used to accurately track the camera's movement in real-time, allowing for a more realistic and immersive virtual production experience.

In addition to visual odometry, there are a number of other techniques that can be used to track camera movement during virtual production. For example, a virtual production system might use motion capture technology to track the movements of the camera operator and use that information to control the virtual camera in the virtual environment. Another option is to use specialized tracking systems, such as markers or LEDs, that can be placed on the camera and tracked using infrared or optical sensors.

Overall, the use of computer vision and other tracking technologies in virtual production allows for a more seamless integration of the virtual and physical worlds, making it possible to create complex and realistic virtual environments in real-time. This can be useful in a variety of applications, including film and video production, gaming, and more.

WEBGL

HOW DOES WEBGL WORK WITH UNREAL ENGINE?

WebGL, or Web Graphics Library, is a JavaScript API that allows for the rendering of 3D graphics in web browsers. Unreal Engine includes support for WebGL, allowing developers to create and publish Unreal Engine projects that can run on the web using WebGL technology.

To use WebGL with Unreal Engine, developers first need to enable the WebGL export option in the engine's settings. This can be done by going to the "Plugins" section of the engine's settings, and then enabling the WebGL plugin. Once this plugin is enabled, developers can then export their Unreal Engine projects as WebGL applications.

When an Unreal Engine project is exported as a WebGL application, the engine will generate a package of files that includes the project's assets, code, and other necessary components. This package can then be uploaded to a web server and accessed by users through a web browser that supports WebGL.

When a user accesses the Unreal Engine project on the web, their browser will use WebGL to render the project's 3D graphics and other visual elements. This allows the project to run and be interacted with on the web, just as it would on a desktop computer running the Unreal Engine editor.

Overall, the integration of WebGL and Unreal Engine allows developers to create and publish their Unreal Engine projects on the web, making them accessible to a wider audience and enabling new possibilities for web-based 3D content.

In addition to the technical aspects of using WebGL with Unreal Engine, there are also a number of practical considerations to keep in mind. One of the main challenges of using WebGL with Unreal Engine is that the performance of the resulting web applications can vary depending on the user's hardware and the capabilities of their web browser.

To ensure that your Unreal Engine project runs smoothly on the web using WebGL, it is important to optimize your project's assets and code to minimize their impact on performance. This can involve reducing the complexity and number of assets used in the project, using efficient rendering techniques, and optimizing the project's code for the web.

Another consideration when using WebGL with Unreal Engine is the security of the resulting web applications. Since WebGL applications run in the user's web browser, they can potentially be accessed and manipulated by malicious actors. To prevent this, it is important to take steps to secure your Unreal Engine project when publishing it on the web using WebGL. This can include using secure servers, implementing authentication and access control mechanisms, and following best practices for web security.

Overall, while using WebGL with Unreal Engine can open up new possibilities for web-based 3D content, it is important to keep performance and security in mind when developing and publishing your projects on the web. By optimizing your project and implementing security measures, you can ensure that your Unreal Engine project runs smoothly and securely on the web using WebGL technology.

How can WebGL be used fOR VIRTUAL SETS?

WebGL can be used to create virtual sets for video production by rendering 3D models of the set and its components, such as the background, furniture, and props. This allows video editors to create a realistic-looking set that can be easily manipulated and changed according to the needs of the production. WebGL can also be used to create interactive elements within the virtual set, such as animations and special effects. This can help bring the set to life and make it more engaging for viewers.

How can WebGL be used TO TRACK CAMERA MOVEMENT IN VIRTUAL PRODUCTION?

WebGL is a JavaScript API that allows for the rendering of interactive 2D and 3D graphics within a web browser. In the context of virtual production, WebGL could be used to track camera movement by using computer vision algorithms to detect the movement of the camera and update the virtual scene accordingly in real-time. This would allow for a more immersive and interactive experience for the user, as the virtual world would respond to the movement of the camera in a natural and realistic way.

To implement this, the virtual production software would need to be designed to work with WebGL and include the necessary computer vision algorithms to detect and track camera movement. The software would also need to be able to communicate with the virtual world and update the scene in real-time as the camera moves. This could be achieved through the use of WebSockets or other technologies that allow for bi-directional communication between the software and the virtual world.

HOW IS VIRTUAL PRODUCTION LIkelY TO EVOLVE IN COMING YEARS?

It is difficult to predict exactly how virtual production will evolve over the coming years, as it is a rapidly changing field. However, it is likely that we will continue to see advances in real-time rendering and motion capture technology, which will make it possible to create even more realistic and immersive virtual environments. Additionally, the use of VR technology is likely to become more widespread in the field, and we may see the development of new VR tools and interfaces that make it even easier for filmmakers to create and explore virtual worlds. It is also possible that we will see the emergence of new technologies, such as augmented reality (AR), that will further expand the possibilities of virtual production.

Another area where we may see significant developments in virtual production is in the use of artificial intelligence (AI) and machine learning. These technologies have the potential to greatly enhance the capabilities of virtual production by enabling more sophisticated real-time rendering and motion capture, as well as more intuitive and intelligent virtual environments. For example, AI-powered virtual cameras could automatically select the best shots and camera angles, or virtual characters could be imbued with more complex and realistic behaviors. Overall, it is clear that virtual production is an exciting and rapidly evolving field, and the coming years are likely to bring many new developments and innovations.