AI Evaluation Metrics - Explainability

Definition:

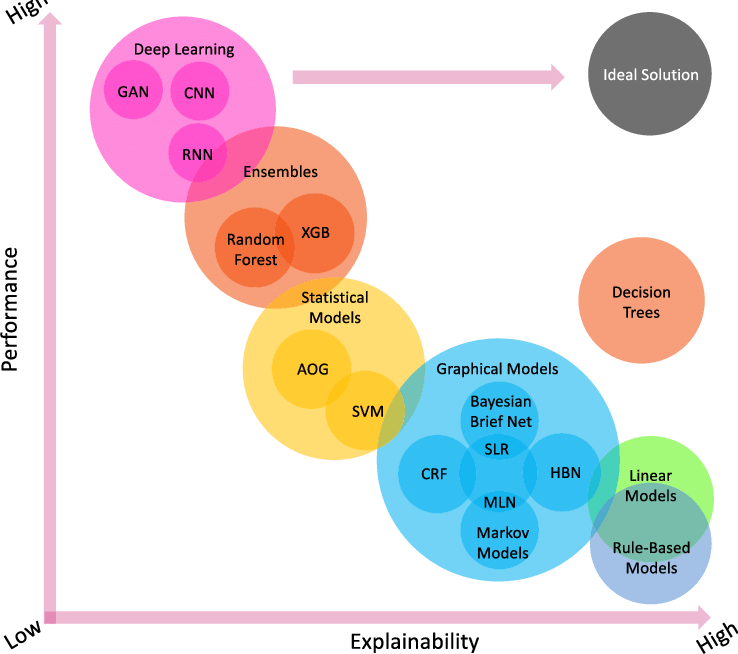

Explainability refers to the AI model’s ability to provide clear, understandable reasons for its decisions or recommendations, making outputs transparent to users and auditors.

Guide for Compliance Team and Engineers:

Purpose:

Ensure users and regulators can trust and understand the AI’s health advice and product recommendations, enabling accountability and informed decision-making.

For Compliance Team:

Define Explainability Requirements: Establish what level of explanation is needed based on user audience and regulatory guidelines (e.g., simple explanations for retail users; detailed for pharmacies).

Documentation: Maintain comprehensive documentation of model logic, decision rules, and explanation methods.

User Communication: Ensure chatbot interfaces present explanations in accessible language without jargon.

Audit Compliance: Verify that explainability practices meet standards like GDPR’s “right to explanation” and FDA transparency guidelines.

Feedback Loop: Collect user and stakeholder feedback on clarity and usefulness of explanations to improve communication.

For Engineers:

Implement Explainability Methods: Use techniques such as SHAP, LIME, attention visualization, or counterfactual explanations to generate insights into model decisions.

Integrate Explanations in UI: Develop chatbot responses that include concise, relevant explanations alongside advice or recommendations.

Test Understandability: Conduct user testing to ensure explanations are clear and actionable.

Maintain Logs: Store explanation outputs linked to each decision for audit and troubleshooting purposes.

Balance Performance: Ensure explainability features do not significantly degrade model latency or accuracy.