European Parliament Demands Unwaivable Right to Fair Pay for Creators in Generative AI Training

The European Parliament has released a landmark 175-page study on generative AI and copyright, signaling a major step forward in protecting content creators in the rapidly evolving AI landscape. Among its key recommendations is the introduction of a new unwaivable right to equitable remuneration for authors and rightsholders whose works are used to train generative AI systems.

The Core Proposal: A New Statutory Exception with Fair Compensation

The study proposes a new EU-level statutory exception to copyright, specifically designed to permit the training of generative AI systems on copyrighted works. Crucially, this would be coupled with an unwaivable right for creators to receive fair remuneration whenever their works are used in AI training.

This model acknowledges the reality that:

Individual licensing at AI scale is unworkable — the vast scale and speed of AI training cannot rely on traditional one-to-one licensing agreements.

Creators must not be excluded from value chains fueled by data, ensuring they share fairly in the economic benefits generated.

Why This Matters: Addressing a Structural Market Failure

The report highlights a systemic market failure caused by the industrial-scale use of human-created works in AI training:

Creators are structurally excluded from the financial gains of AI technologies trained on their content.

Current frameworks relying on opt-in permissions or voluntary collective schemes are insufficient to balance negotiating power between creators and AI developers.

Without statutory safeguards, there is a risk of undermining the economic agency of authors and destabilizing the creative ecosystem.

A Balanced, Long-Term Approach

Importantly, the proposed remuneration mechanism does not undermine short-term opt-in principles. Instead, it recognizes that existing copyright exceptions lack the structural capacity to enforce large-scale compliance necessary for generative AI training.

By coupling a statutory exception with an unwaivable remuneration right, the European Parliament aims to:

Restore minimum economic agency to authors.

Foster fairness and transparency in AI development.

Create a more sustainable and equitable AI copyright framework aligned with EU values.

What This Means for the Future

This bold recommendation could reshape the legal landscape for AI and creativity in Europe, ensuring that:

Content creators are fairly compensated when their works power generative AI.

AI development continues responsibly, respecting the rights and contributions of human authors.

The EU leads globally in crafting balanced regulations that protect cultural diversity and innovation.

The European Parliament’s study underscores the urgency of reform as generative AI technologies transform creative industries. By embedding fair remuneration rights into copyright law, the EU is charting a path toward an AI-driven future that respects and rewards human creativity.

Implementing an unwaivable right to equitable remuneration for content creators in generative AI training raises several significant challenges. Here’s a detailed breakdown:

1. Scalability and Complexity of Licensing

Massive scale of data: Generative AI models are trained on billions of pieces of content from countless creators across multiple jurisdictions. Managing licensing or remuneration at this scale is hugely complex.

Tracking and attribution: Identifying exactly which works have been used in training, and to what extent, is technically challenging. Training datasets are often proprietary and opaque.

Lack of machine-readable rights metadata: Existing systems for marking rights status (opt-in/opt-out) are inconsistent or absent, making automated compliance difficult.

2. Defining the Scope and Fair Remuneration

How to calculate fair pay? Determining what constitutes “equitable remuneration” is complex: Should payments be proportional to usage? Based on AI-generated revenue? Flat fees or royalties?

Disaggregating collective vs individual rights: Some works are managed by collective management organizations (CMOs), but many creators are independent or lack representation.

Potential market distortions: Setting remuneration levels too high could stifle AI innovation or raise barriers for smaller AI developers.

3. Legal and Regulatory Fragmentation

EU-wide harmonization: Copyright law varies between EU member states, and national implementations of copyright exceptions differ, creating fragmentation.

Cross-border enforcement: AI training datasets and outputs transcend borders, complicating enforcement and jurisdictional authority.

Coordination with existing AI regulations: Aligning remuneration rights with the AI Act’s transparency and governance requirements adds complexity.

4. Transparency and Auditing

Opaque AI training processes: AI developers often keep training data confidential for commercial and security reasons, making it hard to verify compliance.

Auditing usage: Robust mechanisms are needed to audit AI training datasets and financial flows transparently, requiring technical standards and oversight bodies.

Risk of circumvention: Without strong enforcement, companies might avoid paying by claiming datasets fall outside the scope or by using untraceable data sources.

5. Balancing Innovation and Creator Rights

Avoiding over-regulation: Excessive compliance burdens might inhibit AI innovation, especially for startups or open-source projects.

Ensuring “yellow-label” exemptions: The study suggests tiered compliance for non-commercial or low-scale AI projects, but defining these thresholds is tricky.

Maintaining openness and data accessibility: The EU must carefully balance protecting creators while not hindering data-driven innovation.

6. Stakeholder Coordination and Governance

Multiple stakeholders: Coordination is needed among creators, CMOs, AI developers, regulators, and enforcement agencies.

Creating effective institutional frameworks: Establishing dedicated EU-level bodies (e.g., AI & Copyright Unit) to oversee compliance requires political will and resources.

Managing disputes and litigation: The complexity will likely generate legal challenges around the scope, implementation, and calculation of remuneration.

1. Data Identification and Rights Tracking

Lack of standardized, machine-readable metadata: There is no universally adopted system to tag copyrighted works with clear licensing or opt-out status in a way that AI training systems can automatically recognize and respect.

Difficulty in identifying copyrighted material within massive datasets: Training datasets are often aggregated from billions of data points (text, images, audio, video) scraped from multiple sources without clear provenance.

Versioning and duplication detection: Detecting and managing duplicate or derivative content in training data is complex, especially when AI models can memorize and reproduce portions of original works.

2. Transparency and Traceability of Training Data

Opaque AI training processes: AI developers often treat training data and model architecture as proprietary, making it difficult to audit or verify the use of copyrighted material.

Insufficient dataset logging and traceability: Existing AI models rarely maintain comprehensive, accessible logs of exactly what data was used during training or how it influenced the model outputs.

Lack of robust watermarking or fingerprinting: Technical means to tag AI-generated outputs or training data to trace back usage are underdeveloped or not widely implemented.

3. Attribution of Usage and Quantification

Quantifying the extent of use: Determining how much a specific creator’s work contributed to the training process and influenced outputs is technically very challenging.

Attribution in multi-source datasets: Training data is a vast mix of many creators’ works; isolating individual contributions for fair remuneration requires advanced analytics.

Black-box nature of AI models: The internal workings of models (especially large neural networks) are not easily interpretable, complicating efforts to attribute influence or usage to particular inputs.

4. Implementing Automated Compliance Mechanisms

Developing opt-out and opt-in systems that scale: Current opt-out mechanisms (like robots.txt or metadata tags) are insufficient for large-scale AI training pipelines and lack enforceability.

Integration with AI training pipelines: Embedding compliance checks and rights clearance mechanisms directly into data ingestion and preprocessing workflows is complex.

Real-time or pre-training filtering: Technical feasibility of filtering out or excluding copyrighted content flagged by creators before or during training is limited.

5. Auditing and Enforcement Infrastructure

Designing audit systems capable of handling large datasets and models: Auditors need tools to inspect vast training corpora and model behavior to verify compliance, requiring scalable, automated solutions.

Secure, privacy-preserving data access: Auditing must balance transparency with protection of trade secrets and user data privacy.

Cross-platform interoperability: Standardized protocols are needed for sharing rights information, audit results, and remuneration data across platforms and jurisdictions.

6. Financial and Transactional Infrastructure

Collecting and distributing remuneration: Implementing automated payment systems linked to data usage analytics to fairly and efficiently compensate creators is non-trivial.

Integration with collective management organizations (CMOs): Systems must communicate and coordinate with existing rights management frameworks while enabling new data-driven payment flows.

Ensuring transparency and trust: The remuneration mechanism must be transparent, auditable, and resistant to fraud or misuse.

1. Metadata and Rights Management Systems

Rights tagging standards: Initiatives like DDEX (Digital Data Exchange) and IPI (Interested Party Information) provide frameworks for embedding rights and licensing metadata into digital assets, mostly in music and publishing. But these are not yet universal or detailed enough for AI training datasets.

Creative Commons Rights Expression Language (ccREL): Supports machine-readable licensing info but adoption is partial and not fully integrated into AI workflows.

ORCID for authors: Provides persistent identifiers for creators, enabling better attribution, but not directly tied to dataset usage in AI.

2. Data Provenance and Lineage Tools

Blockchain-based provenance: Some startups experiment with blockchain to create immutable records of content ownership and usage history (e.g., Verisart, Po.et). These can theoretically support attribution but face scalability and adoption hurdles.

Data lineage platforms: Tools like Apache Atlas or DataHub track data flow in enterprise systems but are not tailored for massive, heterogeneous AI training datasets or public internet content.

3. Transparency and Auditing Solutions

Dataset documentation frameworks: Concepts like Datasheets for Datasets and Model Cards encourage transparency about dataset contents and model training, aiding auditing. However, these are voluntary best practices, not enforced or standardized.

AI explainability tools: Frameworks like LIME or SHAP help interpret model outputs but don’t link outputs back to specific training data sources for remuneration.

4. Opt-Out and Licensing Platforms

Rights clearance platforms: Some companies offer services to clear rights for AI training, such as Getty Images’ licensing agreements with AI developers. These are case-by-case, costly, and not scalable for the entire web or creative commons.

Robots.txt and metadata opt-out: Traditional web mechanisms for content exclusion are widely used but technically limited and ineffective at scale for AI training.

5. Collective Management Organizations (CMOs) and Licensing Models

Some CMOs (e.g., GEMA in Germany) have begun exploring collective licensing or levy models for AI training usage.

However, these models are in early stages, often lack transparency, and are not integrated with automated tracking of usage data.

6. Emerging Standards and Pilot Projects

The European Digital Media Observatory (EDMO) is working on tools for content tracking and rights monitoring related to digital media but is not AI training-specific.

The European Parliament study suggests developing a unified, machine-readable permissions registry potentially overseen by EUIPO to facilitate rights management at scale — but this is still a proposal.

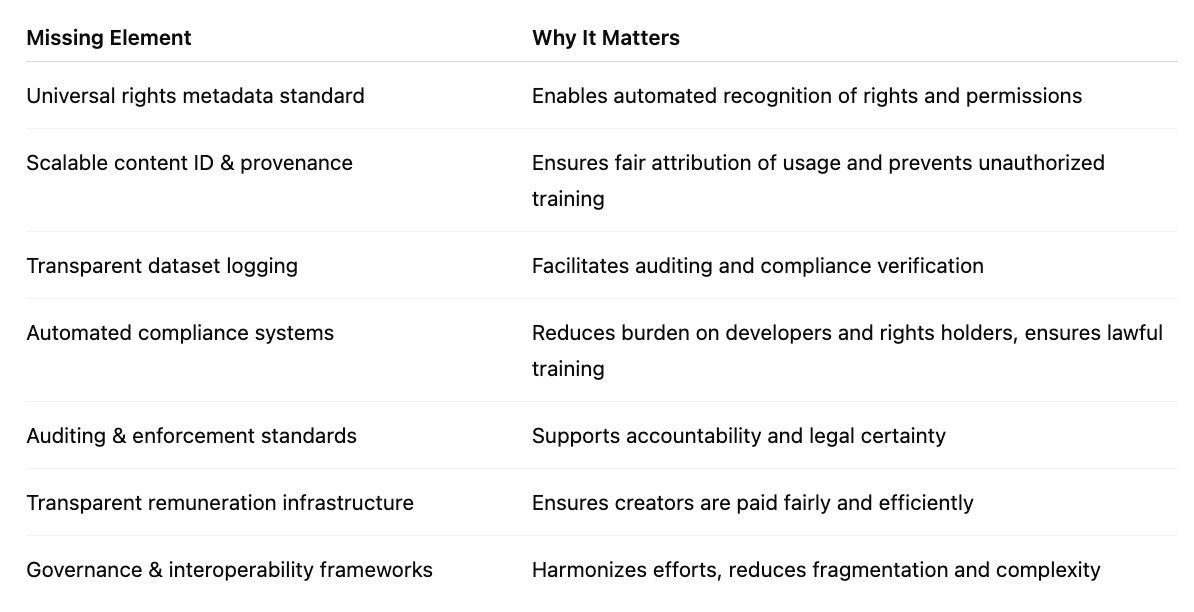

What’s missing in the market today to effectively implement the unwaivable right to equitable remuneration for creators in generative AI training:

1. Universal, Machine-Readable Rights Metadata Standard

No comprehensive, widely adopted metadata standard exists that:

Clearly expresses copyright ownership, licensing terms, and opt-in/opt-out status.

Is compatible with diverse media types (text, images, audio, video).

Can be seamlessly integrated into AI training data pipelines at web scale.

2. Scalable Content Identification and Provenance Tracking

Robust, scalable tools to:

Identify and verify copyrighted works within massive, heterogeneous training datasets.

Track usage provenance of millions to billions of individual content pieces in AI training.

Detect duplicates, derivatives, or unauthorized use automatically.

3. Transparent, Verifiable Dataset Logging

Standardized frameworks and tools for AI developers to:

Log and document exactly what data was used for training, including source and rights status.

Make these logs auditable by third parties or regulatory bodies while protecting trade secrets.

Link training inputs to AI model outputs for potential remuneration attribution.

4. Automated Compliance and Rights Management Systems

Systems that can:

Automatically filter or flag copyrighted content with opted-out or unlicensed status before training.

Integrate rights clearance workflows into AI data ingestion pipelines.

Facilitate collective licensing and remuneration calculations in real time or post-training.

5. Standardized Auditing and Enforcement Mechanisms

Technical and procedural standards for:

Independent audits of AI training datasets and model outputs.

Cross-jurisdictional enforcement that can handle complex digital supply chains.

Secure, privacy-preserving data access for regulators and CMOs.

6. Efficient, Transparent Remuneration Infrastructure

Payment systems linked to:

Verified usage data enabling fair, transparent compensation to individual creators or CMOs.

Scalable micropayments or collective royalties tied to AI training and output commercial use.

Real-time or periodic settlements with minimal administrative overhead.

7. Governance and Interoperability Frameworks

Cross-industry and cross-border cooperation platforms that:

Facilitate sharing of rights data, audit results, and remuneration flows.

Harmonize standards across different media sectors and jurisdictions.

Provide trusted certification or “trust frameworks” for compliance.