The Silicon Revolution: Transistors, AI, and the New Age of Knowledge

Transistors — The Bedrock of Modern Technology

1.1. What Are Transistors?

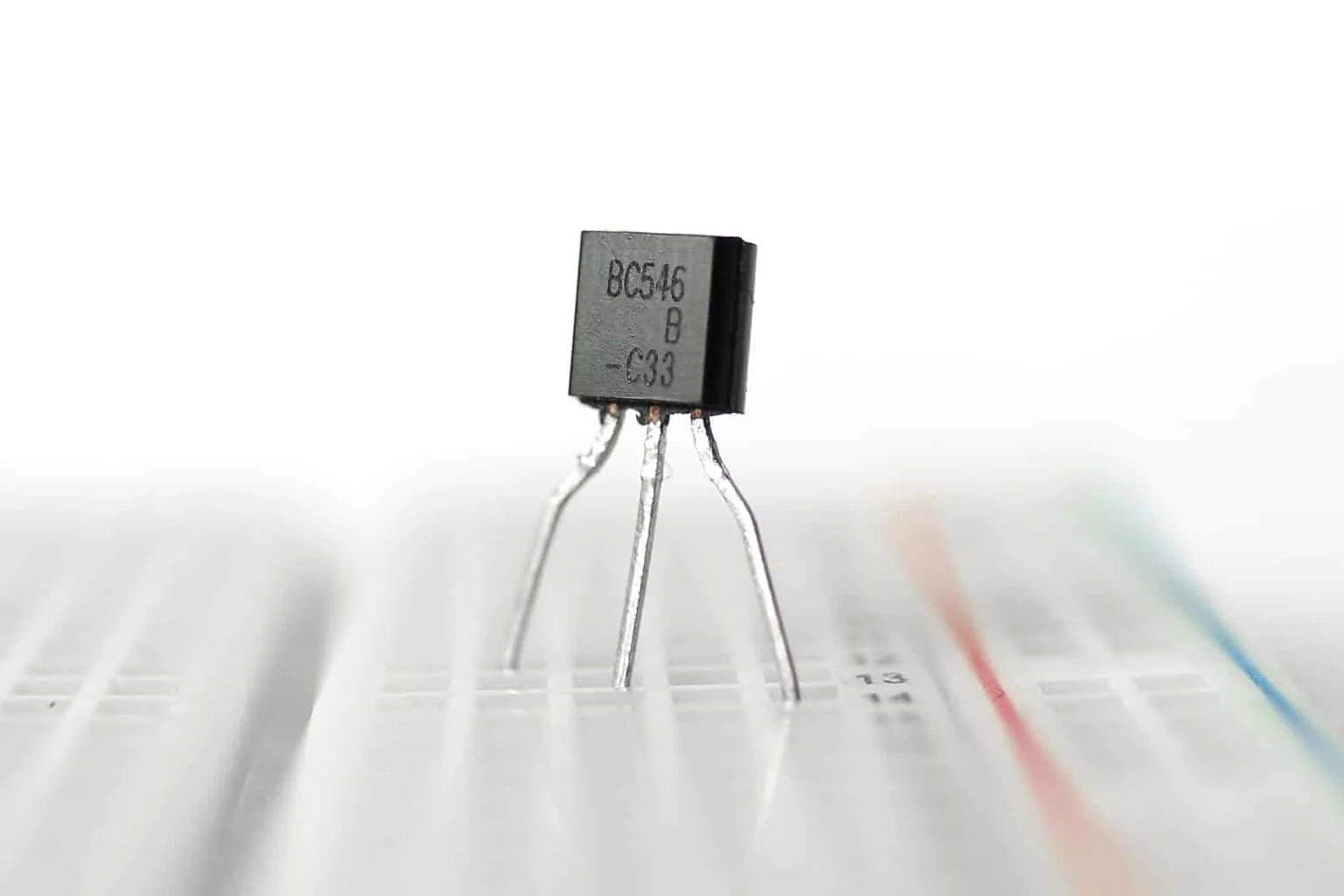

A transistor is a small semiconductor device that regulates the flow of electrical current in an electronic circuit. It can amplify signals, act as a switch, or modulate the flow of current, making it a fundamental building block of modern electronics. Transistors are used in virtually every electronic device, from smartphones to computers to televisions, enabling a level of performance and versatility that was once unimaginable.

At its core, a transistor has three primary components: the emitter, base, and collector. The emitter injects charge carriers (either electrons or holes, depending on the type of transistor), the base controls the flow of these carriers, and the collector collects the charge that flows through the transistor. By adjusting the base, a small electrical signal can control a larger one, which is how transistors function as amplifiers and switches.

These miniature devices can handle vast amounts of electrical signals, enabling the development of powerful electronic systems. In essence, transistors act as the brain of modern electronics, making it possible for devices to perform increasingly complex tasks with speed and precision.

1.2. The Evolution of Transistor Technology

The journey of transistor technology began in 1947, when John Bardeen, Walter Brattain, and William Shockley at Bell Labs invented the first transistor. Unlike the bulky, fragile vacuum tubes used in early electronics, the transistor was smaller, more durable, and more energy-efficient, marking the beginning of a new era in electronics.

Initially, transistors were made from germanium, a semiconductor material that, while effective, had limitations in terms of heat resistance and reliability. By the early 1950s, silicon emerged as the preferred material for transistors, thanks to its superior thermal stability and more abundant availability. This shift was pivotal, as silicon became the standard material for the production of most modern electronic devices.

Over time, the size of transistors was continually reduced, allowing for more transistors to be packed into smaller spaces. In the 1960s, integrated circuits (ICs) emerged, enabling multiple transistors to be built on a single chip. This marked a leap in computing power, as entire circuits could be miniaturized, leading to the development of the first microprocessors in the 1970s.

As transistors became smaller and more efficient, their potential applications expanded. The semiconductor industry embraced new manufacturing techniques, and by the 1980s, complementary metal-oxide-semiconductor (CMOS) technology revolutionized the design of transistors, offering both low power consumption and high-speed performance. CMOS transistors quickly became the standard in most digital electronics, from computers to cell phones.

1.3. How Transistors Became the Heart of Computing

Transistors are the core components that drive modern computing technology, and their evolution has been directly linked to the rise of the digital age. When transistors were first introduced, they quickly replaced the cumbersome and unreliable vacuum tubes in electronic circuits. By the 1960s, transistors became crucial in the design of logic circuits, the building blocks of digital computers. Their ability to switch between on and off states (binary 1s and 0s) was ideal for implementing the logic operations needed for computation.

In the early days of computing, computers were massive and slow, with each machine occupying entire rooms. However, as transistor technology advanced, it allowed for the creation of smaller and faster computers. The integration of more transistors into microchips (called integrated circuits) was key to the development of personal computers in the 1970s and 1980s. Moore’s Law, which predicts that the number of transistors on a chip would double approximately every two years, further propelled the explosive growth in computing power. This led to the miniaturization of computing and paved the way for innovations like personal computers, smartphones, and cloud computing.

With each new generation of transistors, the performance per unit of energy improved, enabling increasingly powerful machines that could handle ever-larger datasets, perform complex calculations, and run sophisticated algorithms. Artificial Intelligence (AI) and machine learning applications, for instance, are heavily reliant on transistor-powered computing systems to process vast amounts of data, train algorithms, and provide real-time responses.

In essence, transistors are the heart of modern computing. They enable the processing, storage, and transmission of information, forming the foundation of everything from everyday consumer electronics to advanced scientific research.

1.4. The Miniaturization Miracle: From Vacuum Tubes to Microchips

The transition from vacuum tubes to transistors represents one of the most significant advancements in technology history. Vacuum tubes, used in early electronic devices like radios and computers, were bulky, fragile, consumed significant amounts of power, and generated excessive heat. They were also prone to failure, limiting the reliability and scalability of electronic systems.

The invention of the transistor solved these problems by providing a much smaller, more durable, and energy-efficient alternative. Early transistors were larger and required more space than today’s counterparts, but their potential for miniaturization was immediately apparent. Over time, the size of transistors shrank, and their functionality increased exponentially.

In the 1960s, integrated circuits (ICs) allowed many transistors to be placed on a single chip, which led to a dramatic reduction in the size and cost of electronic components. The arrival of the microprocessor in the 1970s, a single chip containing thousands of transistors, further accelerated the miniaturization trend. By the late 1980s, we saw the introduction of personal computers that were not only affordable but also capable of performing complex computations in a fraction of the time it once took.

The ongoing trend of miniaturization — fueled by the shrinking size of transistors and advances in semiconductor manufacturing techniques — has allowed devices to become more powerful, more efficient, and more compact. This has enabled the development of mobile phones, laptops, wearables, and even AI-powered systems that fit into the palm of your hand. In fact, the transistors found in modern-day microchips are so small that millions of them can fit onto a single chip the size of a fingernail.

As transistor technology continues to evolve, there are now discussions around the physical limits of miniaturization. While traditional silicon-based transistors are approaching their size limits, new technologies such as quantum computing, neuromorphic computing, and 3D transistors are emerging to push the boundaries of computing power even further.

Moore’s Law: The Unseen Force Behind Exponential Growth

2.1. Understanding Moore’s Law and Its Origins

Moore’s Law, named after Gordon Moore, the co-founder of Intel, is one of the most influential concepts in the history of technology. In 1965, Moore observed that the number of transistors on an integrated circuit (IC) would double approximately every two years, leading to an exponential increase in computing power. This observation was based on the rapid advancements in semiconductor technology at the time and became a guiding principle for the semiconductor industry.

Moore’s Law was not a law of physics, but rather an empirical observation rooted in the growth patterns of technology. Initially, it referred specifically to the number of transistors that could be placed on a silicon chip. As transistors got smaller, more of them could be crammed onto a single chip, leading to increased processing power. Over time, Moore’s Law became shorthand for the overall trend of technological acceleration in computing, as the power of processors increased, the size of devices decreased, and prices continued to fall.

Moore’s original prediction stated that the number of transistors on a chip would double every year, but he revised it in 1975, predicting a doubling every two years. This more conservative prediction has largely held true, and it has become a key metric for the pace of progress in the semiconductor industry.

2.2. The Impact of Moore’s Law on Transistor Density

The true significance of Moore’s Law lies in its direct impact on transistor density. Transistor density refers to the number of transistors that can be packed into a given space, typically measured in transistors per square millimeter. As transistor density increases, more transistors can fit on a single chip, allowing for greater computational power without significantly increasing the chip’s size.

For example, in the early 1970s, Intel's 4004 microprocessor had around 2,300 transistors. By the mid-1990s, the Pentium Pro microprocessor housed over 5 million transistors, and today, modern microprocessors such as Intel’s Core i9 or AMD’s Ryzen 9 can contain billions of transistors — on the order of 10 billion or more, all packed into a space that’s only slightly larger than a coin.

The increase in transistor density has been the driving force behind the exponential growth in computing power. More transistors allow for more complex instructions, parallel processing, and faster execution, which in turn enables more sophisticated applications, from AI to cloud computing to virtual reality.

This trend has made it possible to reduce the size of electronic devices while increasing their functionality. Smartphones, for example, today house far more processing power than early supercomputers, all within the palm of your hand.

2.3. The Relationship Between Moore’s Law, Transistors, and Computing Power

Moore’s Law has been the unseen force driving the relationship between transistors and computing power. The basic principle is straightforward: the more transistors you can pack onto a chip, the more complex the tasks the chip can handle. This directly correlates to an increase in computing power — the ability of a computer to perform more operations per second.

To understand this relationship, consider how transistors function. A transistor acts as a switch in a circuit, turning on or off to represent binary 1s and 0s in a computer. As you add more transistors, you expand the number of logic gates that can be constructed, which means more complex calculations and data processing can happen in parallel. This is especially important for multi-core processors used in modern computing, where multiple sets of transistors work simultaneously to handle large volumes of data or perform complex algorithms like those found in AI and machine learning.

Moore’s Law effectively sets the pace for advancements in computing: as transistor density doubles, the cost per transistor decreases, making computing more affordable and accessible. The result is an ongoing exponential improvement in computing power, which has driven industries forward, enabling everything from personal computers to cloud computing and AI.

Moreover, Moore’s Law has also led to innovations like parallel processing and multi-core processors, where each core on a chip can operate on different data simultaneously, exponentially increasing performance. The shrinking transistor size has also helped in the development of specialized chips like GPUs and AI accelerators that handle highly parallel computations, such as those needed in machine learning and data processing.

2.4. The Slowdown of Moore’s Law: What’s Next?

Despite its decades-long success, Moore’s Law has shown signs of slowing down in recent years. As transistors approach the size of atomic scales (currently around 3-5 nanometers), we face several physical limitations. For instance, quantum effects like electron tunneling become more significant as transistors shrink further, leading to issues such as power leakage and heat dissipation. As a result, it has become increasingly difficult and expensive to continue scaling down transistor sizes at the same pace as before.

The economic and technological costs of continuing to shrink transistors are also growing. Semiconductor manufacturers like Intel, TSMC, and Samsung are now pushing the boundaries of nanotechnology, but the gains in performance are becoming less dramatic. For example, the leap from 14nm to 10nm transistors was much smaller in terms of performance increase compared to earlier reductions.

So, what’s next after Moore’s Law?

New Materials: Researchers are exploring new semiconductor materials, such as graphene, gallium nitride (GaN), and carbon nanotubes, which could offer higher performance and efficiency at smaller scales.

Quantum Computing: Quantum computing offers a potential leap in computational power, enabling systems to solve problems far beyond the capabilities of classical computers. However, quantum computing is still in its early stages and faces challenges such as stability and scalability.

Neuromorphic Computing: This approach mimics the structure and function of the human brain. By designing circuits and systems that operate more like biological neurons, neuromorphic chips can enable breakthroughs in artificial intelligence.

3D Transistors: Companies are developing new architectures where transistors are stacked vertically in 3D structures rather than spread out horizontally. This allows for more transistors in the same physical space and can potentially increase performance without shrinking transistors further.

As the scaling of traditional transistors slows down, the future of computing will likely depend on a combination of these emerging technologies. In addition, hardware acceleration techniques, such as specialized AI chips (e.g., Google’s TPUs or NVIDIA’s GPUs), are already being used to complement traditional computing power and drive performance for applications like deep learning.

Transistors and Artificial Intelligence: A Symbiotic Relationship

3.1. The Role of Transistors in the Birth of AI

The relationship between transistors and artificial intelligence (AI) is deeply intertwined, with transistors playing a fundamental role in the birth and evolution of AI technologies. In the mid-20th century, the advent of the transistor revolutionized the way electronic systems were designed, moving away from the bulky and inefficient vacuum tubes that had previously dominated computing hardware. This allowed for the development of smaller, faster, and more reliable computers — essential for the emerging field of AI.

The initial attempts at AI, such as the Turing Test and early neural networks, were constrained by the available computational power. Early computers, which relied on vacuum tubes or rudimentary transistors, lacked the processing speed and memory capacity required for complex problem-solving. However, the miniaturization of transistors in the 1960s and 1970s enabled the development of more capable machines, eventually paving the way for AI research to take off.

The advent of transistors made it possible to build machines that could handle the necessary operations for early AI algorithms. These machines were able to perform symbolic reasoning, a form of AI based on manipulating symbols to perform tasks like playing chess or solving mathematical problems. Transistors, in effect, provided the computing backbone that powered the foundational AI systems, from expert systems to early machine learning (ML) algorithms.

Without the exponential increase in transistor density and the advent of integrated circuits, AI would not have had the hardware it needed to evolve. Early AI algorithms and systems were only practical because of the increasing computing power that transistors enabled.

3.2. How Increasing Transistor Density Fuels AI’s Rise

As transistor density increased, so too did the potential for AI systems to grow more powerful. Moore's Law, which predicted the doubling of transistor density approximately every two years, directly fueled this growth. As transistors became smaller and more densely packed, computers became faster, more energy-efficient, and cost-effective, allowing for more sophisticated AI models to be developed.

The increase in transistor density is especially important for machine learning and deep learning. AI models require vast amounts of data to be processed in real time, and the more transistors available, the more parallel operations a computer can perform. In modern AI, neural networks — which are inspired by the structure of the human brain — involve hundreds of billions of operations to process data and adjust weights in the model during training.

The rise of deep learning algorithms, which power technologies like image recognition, natural language processing, and autonomous driving, is directly linked to the ability of hardware to handle such complex computations. GPUs (Graphics Processing Units), which are optimized for parallel computing, rely on high transistor density to simultaneously process thousands of computations per second. This capability would not be possible without the dense transistor architectures that have become standard in modern computing.

In short, as the number of transistors per chip has skyrocketed, AI systems have been able to perform increasingly complex tasks more efficiently. The more transistors available, the more data and tasks an AI system can handle in parallel, enabling the scaling of AI models to unprecedented levels.

3.3. From Simple Algorithms to Deep Learning: The Hardware Behind AI Evolution

AI has undergone an incredible evolution, progressing from simple rule-based algorithms to complex deep learning models that mimic human cognitive abilities. This transformation has been driven by both software innovations and hardware advancements, with transistors playing a central role in the latter.

Early AI algorithms in the mid-20th century were based on symbolic AI or expert systems that used human-defined rules to make decisions. These systems, while groundbreaking, were limited by the processing capabilities of the hardware they ran on, which relied on transistors but were still in their nascent stages of development.

The introduction of machine learning in the 1980s, and later the rise of deep learning in the 2000s, marked a significant leap forward. Deep learning networks, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), require vast amounts of data processing to learn from examples and make predictions. Training these networks involves optimizing millions (or even billions) of parameters, which is computationally intensive. The ability to train such networks is largely dependent on the parallel processing power provided by modern multi-core CPUs and GPUs, both of which are powered by dense transistor configurations.

Graphics Processing Units (GPUs), for example, contain thousands of smaller cores designed specifically for parallel computation, making them ideal for training deep learning models. This capability, fueled by the high transistor count in GPUs, has allowed AI to scale dramatically. Deep learning models such as OpenAI's GPT or Google's AlphaGo require specialized hardware capable of handling massive parallel computations, something that only became possible due to the advances in transistor density and semiconductor manufacturing techniques.

Thus, as transistors have evolved, so too has the ability to train and deploy more sophisticated AI models, pushing the boundaries of what AI can accomplish in areas like language translation, image generation, self-driving cars, and more.

3.4. The Future of AI: What More Transistors Could Mean for AGI (Artificial General Intelligence)

As we continue to push the limits of transistor density and computing power, we may be on the cusp of a new era in AI: Artificial General Intelligence (AGI) — machines that can perform any intellectual task that a human can. While current AI technologies are incredibly powerful, they are still narrow in scope, excelling only at specific tasks for which they have been trained. AGI, on the other hand, would require a system capable of understanding and learning from the full range of human experiences and adapting to a vast array of challenges.

Transistor density will continue to play a crucial role in the development of AGI. As AI systems become more complex, they will require even more computational power to model the intricate patterns of human cognition and decision-making. The hardware advancements driven by increasing transistor density will provide the processing power needed to simulate the cognitive flexibility that characterizes human intelligence.

The transition from narrow AI to AGI will also involve enhancing neural networks to handle increasingly complex tasks that go beyond pattern recognition, such as general reasoning, problem-solving, and abstract thinking. These capabilities require massive neural networks with billions of parameters — networks that require more computational power than ever before.

Moreover, the future of AGI will rely on specialized hardware designed to mimic the architecture of the human brain. Emerging technologies such as neuromorphic computing — which uses artificial neurons and synapses to simulate brain-like processing — could benefit greatly from further advancements in transistor technology. Neuromorphic chips, with their ability to perform highly parallel processing and energy-efficient learning, could be the key to unlocking AGI’s potential.

In essence, the future of AGI will likely hinge on the continued evolution of transistors and the development of hardware that supports more sophisticated AI models. As transistor technology pushes the boundaries of what’s computationally possible, AGI may no longer remain a distant dream but could become a reality within our lifetimes.

The Gutenberg Press and the Rise of the Digital Age

4.1. A Revolution in Knowledge: The Printing Press and Literature

The Gutenberg printing press, invented by Johannes Gutenberg in the mid-15th century, is one of the most significant technological innovations in human history. It revolutionized the way knowledge was produced, distributed, and consumed. Prior to the printing press, books were handwritten, often by monks or scribes, making them expensive, time-consuming to produce, and available only to the wealthy or religious elites. This severely limited the spread of knowledge and the development of literacy.

Gutenberg’s invention introduced movable type, a printing method that allowed books and documents to be mass-produced in a fraction of the time it took to transcribe them manually. As a result, books became more affordable, accessible, and widespread, which led to a dramatic shift in the landscape of knowledge dissemination. The printing press gave rise to new ideas, intellectual movements, and cultural revolutions, including the Renaissance, the Reformation, and the Scientific Revolution.

One of the most significant impacts of the printing press was its ability to make literature and scientific knowledge available to the masses. The spread of printed materials helped democratize education, allowing individuals to learn and think independently. It also led to a global exchange of ideas, laying the groundwork for the intellectual currents that would shape the modern world.

In essence, the printing press was a catalyst for the mass distribution of knowledge, enabling the free flow of information, inspiring new ways of thinking, and igniting movements that would change history forever. It set the stage for the Digital Age centuries later.

4.2. The Parallel: How Transistors Are Revolutionizing Information Processing

In many ways, the transistor — a tiny semiconductor device that regulates electrical current — has played a role in modern technology similar to that of the Gutenberg printing press in the 15th century. Just as the printing press made the production of books faster and more efficient, transistors have revolutionized how we process, store, and transmit information in the digital age.

The development of transistors in the late 1940s and 1950s was a monumental leap in electronics. Prior to transistors, vacuum tubes were used to amplify electrical signals and act as switches in electronic circuits. However, vacuum tubes were large, bulky, inefficient, and prone to failure. Transistors, by contrast, were smaller, more durable, and far more energy-efficient, making them ideal for miniaturizing electronic devices. Their impact was felt immediately in computing, communications, and consumer electronics.

As transistor density increased over the decades, culminating in the rise of microchips and integrated circuits, computing power grew exponentially. This allowed for the digital revolution that gave rise to computers, the internet, smartphones, and eventually artificial intelligence (AI). The transistor’s ability to switch electrical signals efficiently led to the development of digital systems, where information is processed in binary form (1s and 0s), enabling everything from simple arithmetic to complex algorithms.

The digital age owes much of its progress to transistor technology, which has transformed how we process data and allowed for the creation of devices that manage and transmit information at speeds unimaginable in the age of the printing press. Just as the printing press ignited a revolution in knowledge, the transistor ignited a revolution in information processing — accelerating the development of digital networks and information systems that define our modern world.

4.3. From Manuscripts to Microchips: Connecting the Past and Present

The journey from manuscripts to microchips illustrates the tremendous evolution of how knowledge is produced and shared. In the days before the printing press, handwritten manuscripts were painstakingly copied by scribes, often taking months or years to complete a single book. These manuscripts were precious and rare, limiting the spread of knowledge to a select few.

With the invention of the Gutenberg printing press, this method of transmission was replaced by the ability to mass-produce books quickly and efficiently. Printed books could now be distributed widely, allowing for the spread of knowledge across Europe and eventually the globe. This democratization of information was revolutionary in its own right, as it enabled the rise of literacy, education, and the exchange of ideas.

Fast forward to the 20th century, and the invention of the transistor in the late 1940s brought about the next great leap in the accessibility and speed of information transmission. The introduction of computers, powered by transistors, transformed how we process and interact with data. Manuscripts gave way to digital files, and libraries of books were stored in databases, accessible with just a few keystrokes.

Today, the internet — powered by semiconductor technologies and transistors — allows us to access nearly all of human knowledge from any device with an internet connection. The knowledge once locked away in manuscripts or printed books is now available at the click of a button, accessible to millions of people around the world in an instant. This digitization of knowledge mirrors the transformation set in motion by the printing press, but it is now amplified by transistor-powered systems that allow for the rapid exchange of information on a global scale.

The printing press and the transistor have both been catalysts in reshaping the ways humanity creates, stores, and distributes knowledge — with the latter laying the foundation for the Digital Revolution.

4.4. AI as the New Literary Force: A Digital Renaissance

Just as the printing press opened the door to new forms of literature, education, and communication, artificial intelligence (AI) represents the next phase of the digital renaissance. Today, AI is not only transforming how we interact with technology, but it is also starting to create new forms of literature and artistic expression. AI-generated art, poetry, and even novels are emerging as legitimate creative works, blurring the lines between human and machine creativity.

In the same way the printing press democratized access to literature, AI is democratizing creativity. Machine learning algorithms and natural language processing (NLP) models, such as OpenAI’s GPT series, are capable of generating text that mimics human writing styles, creating stories, poetry, or even entire books. These advancements challenge traditional notions of authorship and creativity, allowing anyone with access to an AI model to produce high-quality content, much like the printing press enabled anyone with a printing press to share their ideas.

Furthermore, AI is enabling a new era of personalized literature. AI-driven recommendation systems allow readers to discover books, articles, and papers tailored to their specific interests. In the realm of education, AI tutors can help students learn at their own pace, offering personalized learning experiences based on individual strengths and weaknesses.

The Digital Renaissance is in full swing, and AI is at its heart. Just as the printing press brought about a new age of literature and intellectual growth, AI is now poised to redefine creativity, producing works that are not only based on human input but are also capable of evolving autonomously.

Estimating the Scale: How Many Transistors Have Been Made?

5.1. The Importance of Estimating Transistor Production

Transistors are the fundamental building blocks of modern electronics, driving everything from computers to smartphones to AI systems. Understanding how many transistors have been produced over time is not just an academic exercise; it is crucial for gaining insights into the growth and impact of the semiconductor industry, as well as forecasting the future of technology development.

Estimates of transistor production provide a way to gauge the evolution of computing power, the progress of miniaturization, and the overall scale of global electronics manufacturing. Since the invention of the transistor in 1947, the number of transistors produced has skyrocketed, reflecting the dramatic changes in how we process, store, and transmit information. By estimating how many transistors have been made, we can better understand how far we've come and where we are headed in terms of technology scaling.

Furthermore, transistor production is a critical indicator of the economic health of the technology sector. As transistors become smaller, cheaper, and more powerful, they help to drive the economies of scale that have led to the widespread availability of cutting-edge devices. Transistor estimates also help in understanding the environmental impact of semiconductor manufacturing and the energy efficiency improvements that have occurred over time.

5.2. Historical Growth of the Semiconductor Industry

The story of the semiconductor industry is a tale of exponential growth, largely driven by the increasing transistor density on integrated circuits (ICs). Since the first transistor was invented at Bell Labs in 1947, the production of transistors has grown at an astonishing rate, fueled by advancements in technology, manufacturing techniques, and demand for consumer electronics.

The 1950s and 1960s marked the beginning of transistor adoption, with early transistors used in radios, computers, and communication equipment. By the 1970s, integrated circuits (ICs) allowed for the miniaturization of transistors, making it possible to fit multiple transistors on a single silicon chip. This scaling process accelerated throughout the 1980s and 1990s as Moore’s Law took hold, predicting that the number of transistors on a chip would double approximately every two years, leading to rapid increases in computational power and decreases in cost.

From less than 10 transistors on early chips in the 1950s to billions of transistors on modern microprocessors, the semiconductor industry has scaled remarkably. For example, the Intel 4004 microprocessor, released in 1971, had just 2,300 transistors, while today’s Intel Core i9 processor features 10 billion transistors on a single chip.

The growth in transistor production mirrors the rise of consumer electronics, computing devices, and more recently, smartphones, artificial intelligence, and cloud computing. In the early 2000s, the industry saw the advent of multi-core processors, which allowed for even greater performance, as multiple cores (each with millions of transistors) could perform parallel computations.

Today, the industry faces new challenges, such as quantum computing and the physical limits of transistor scaling, but the historical trajectory of transistor production has been a key driver of technological innovation for decades.

5.3. Memory Chips, Logic Chips, and Other Devices: Where Transistors Are Made

Transistors are made in several types of semiconductor devices, each serving a different function. Understanding where these transistors are used — and how many are produced — is essential to estimating the total number of transistors ever made. The two most prominent categories are memory chips and logic chips, but transistors also appear in a variety of other devices, such as sensors, power devices, and displays.

Memory Chips:

Memory chips, such as DRAM (Dynamic Random Access Memory) and NAND flash, are some of the largest consumers of transistors. These chips store and retrieve data and are found in virtually all electronic devices, from computers to smartphones.

The density of transistors in memory chips has grown dramatically over the years. For instance, in modern DRAM chips, billions of transistors are packed into a small chip, enabling larger storage capacities and faster data access speeds.

Estimates show that the DRAM market alone has shipped over 16 billion gigabytes of memory since the 1990s, contributing to a significant portion of transistor production.

Logic Chips:

Logic chips, such as microprocessors and graphics processing units (GPUs), perform the computations that drive everything from personal computers to artificial intelligence. These chips are made up of millions to billions of transistors that perform complex operations.

The growth of transistor production in logic chips has been directly tied to the rise of computing, with multi-core processors and AI accelerators pushing the demand for more transistors to increase computational power.

Major manufacturers, such as Intel, AMD, NVIDIA, and Apple, produce chips with billions of transistors for everything from personal computing to gaming, cloud computing, and machine learning.

Other Devices:

While memory and logic chips dominate transistor production, other devices like sensors, displays, and power management chips also consume transistors.

For example, sensor chips used in smartphones, wearables, and autonomous vehicles are packed with small transistors to handle data processing and wireless communication.

Similarly, power transistors are used in power supply circuits, voltage regulators, and electric vehicles, contributing significantly to transistor production, especially as energy efficiency and electric mobility continue to grow.

Each category of semiconductor device represents a significant chunk of transistor production. Memory chips, due to their high density, account for a large portion of the total transistors ever made, but logic chips — especially those used in computing and AI — are growing at a rapid pace as the demand for faster and more powerful devices increases.

5.4. The Impact of Transistor Scaling on Technology and Society

The scaling of transistors over the past several decades has had profound effects on both technology and society. As transistor size continues to shrink, we see an increase in computing power while also lowering costs. This has led to a proliferation of digital technologies, transforming industries, economies, and lifestyles worldwide.

Technological Impact:

Miniaturization: The shrinking of transistors has enabled the creation of incredibly small and powerful devices. Smartphones, laptops, and wearables are all products of transistor miniaturization, and these devices have revolutionized how we communicate, work, and live.

Computing Power: With each new generation of transistors, computing systems become more powerful and capable of handling increasingly complex tasks. This has fueled advancements in artificial intelligence, big data analytics, cloud computing, and autonomous systems.

New Industries: Transistor scaling has also led to the creation of new industries. The semiconductor and electronics industries are now multi-trillion-dollar sectors that drive everything from consumer electronics to advanced manufacturing.

Societal Impact:

Digital Transformation: The rise of affordable, high-performance computing has enabled the digitalization of society. From the internet of things (IoT) to e-commerce, social media, and healthcare innovations, the effects of transistor scaling are visible in virtually every aspect of modern life.

Access to Information: The internet — powered by semiconductor technologies — has democratized access to information. It has revolutionized education, communication, and global connectivity, making knowledge more accessible than ever before.

Automation and AI: Transistor scaling has been a key enabler of automation and artificial intelligence. AI-powered systems can analyze vast amounts of data at lightning speeds, transforming industries such as healthcare, finance, and transportation. As transistor density increases, the potential for more advanced AI and machine learning applications continues to grow.

The continued scaling of transistors will continue to accelerate innovation, creating new technologies and applications that we can scarcely imagine today. As we move closer to the limits of conventional transistor scaling, new materials, designs, and architectures will need to be explored, ensuring that the transistor’s impact on society will remain profound for generations to come.

Creating a Framework for Estimating Transistor Production

6.1. Categorizing Semiconductor Devices: Memory, Logic, and Others

In order to estimate the total number of transistors ever produced, it is essential to categorize the semiconductor devices where these transistors are used. Each type of device plays a different role in the broader technological ecosystem and contributes differently to transistor production.

Memory Chips:

Memory chips, including DRAM (Dynamic Random Access Memory), NAND flash, and SRAM (Static Random Access Memory), are some of the largest consumers of transistors. These devices store and retrieve data in computers, smartphones, and other electronic systems.

Memory chips are typically high-density devices, meaning that they pack a large number of transistors into a relatively small area. For example, modern DRAM chips can contain billions of transistors per chip, and NAND flash memory used in solid-state drives (SSDs) can also contain a large number of transistors.

Logic Chips:

Logic chips include microprocessors, graphics processing units (GPUs), and application-specific integrated circuits (ASICs). These chips perform the calculations and operations that power computers, gaming consoles, AI models, and much more.

Logic chips tend to have higher transistor density than earlier devices because they need to handle complex operations and parallel processing. For instance, modern Intel Core i9 processors have billions of transistors in a single chip.

Other Devices:

Other semiconductor devices include sensors, displays, power devices, and communication chips. While these devices may not be as transistor-heavy as memory and logic chips, they still use transistors to handle signal processing, power regulation, and data transmission.

Power devices, such as those found in electric vehicles (EVs) or solar power systems, and sensor chips used in IoT devices are increasingly important and contribute to transistor production, though they typically consume fewer transistors per device than memory or logic chips.

To estimate the total number of transistors produced, it’s crucial to examine how these different categories contribute to the overall semiconductor market and allocate production estimates accordingly.

6.2. Understanding Growth Models and Trends in Semiconductor Manufacturing

The semiconductor industry has experienced exponential growth since the invention of the transistor in the late 1940s, following a well-documented growth model. Understanding the historical trends and growth models is essential for estimating transistor production.

Moore’s Law:

Moore’s Law, formulated by Gordon Moore in 1965, predicts that the number of transistors on a chip would double every two years, leading to a steady increase in transistor density. This exponential growth has largely held true, driving the improvement in computing power, data storage, and electronics miniaturization.

Over the years, this growth has been a key predictor of transistor production, as manufacturers have consistently developed new ways to pack more transistors into smaller, more efficient chips.

Manufacturing Trends:

The semiconductor manufacturing process has advanced with increasingly sophisticated techniques, such as photolithography, that allow for the precise creation of smaller transistors.

As the industry moves closer to the physical limits of traditional silicon-based transistor scaling, there has been a growing emphasis on alternative materials like graphene, gallium nitride (GaN), and quantum dots, which could help continue transistor scaling beyond the limits of silicon.

Advanced fabrication techniques, such as 3D stacking and multi-layer chip designs, are enabling the production of more transistors in the same physical space, offering a path forward as we approach the limits of two-dimensional scaling.

Market Growth:

The growth of consumer electronics, cloud computing, AI applications, and mobile devices has driven an ever-increasing demand for more transistors. As these technologies evolve, so does the demand for high-performance chips, leading to a corresponding increase in transistor production.

The rise of specialized chips like GPUs for machine learning and AI accelerators for deep learning has further fueled the growth in transistor production, as these devices require massive numbers of transistors to handle their parallel computing needs.

By understanding the growth models of transistor production and market trends, we can develop an effective approach to estimating total transistor production across different device categories.

6.3. Estimating Transistor Density: How Many Are In Each Device?

To estimate the total number of transistors produced, it’s crucial to calculate the transistor density — the number of transistors that fit into a unit of space, typically measured in transistors per square millimeter. Estimating the transistor density for each type of device provides the foundation for extrapolating total transistor production.

Memory Chips:

DRAM: The transistor density in modern DRAM chips can be as high as 10 billion transistors per gigabyte (GB). As memory chip sizes increase, so does the number of transistors needed to store and process data.

NAND Flash: NAND flash memory used in SSDs and smartphones also has high transistor density, with estimates ranging from 2 billion to 5 billion transistors per GB, depending on the generation of the chip.

Logic Chips:

Microprocessors: Modern microprocessors, like the Intel Core i9 or AMD Ryzen 9, can contain between 10 billion and 20 billion transistors, depending on the architecture and manufacturing process. As transistor size continues to shrink, more transistors can be packed into the same physical space.

GPUs: Graphics processing units (GPUs), such as those used in gaming and AI acceleration, are even more densely packed with transistors. A high-end GPU can contain over 50 billion transistors to handle complex parallel computations.

Other Devices:

Sensors: Sensors used in smartphones, wearables, and IoT devices tend to have lower transistor densities, ranging from hundreds to thousands of transistors depending on the complexity of the sensor.

Power Devices: Power devices used in electric vehicles or solar energy systems generally contain fewer transistors (in the thousands to hundreds of thousands), but they still play a role in overall transistor production.

By estimating the transistor density of each device category and combining it with production figures, we can get a clearer picture of how many transistors have been produced over time.

6.4. A New Framework for Total Transistor Production: A Step-by-Step Approach

To estimate the total number of transistors ever produced, we can create a step-by-step framework that incorporates device categories, growth models, and transistor density. Here’s how it would work:

Step 1: Categorize Devices:

Break down the semiconductor devices into three major categories: memory chips, logic chips, and other devices (including sensors, power devices, etc.).

Step 2: Gather Historical Data:

Collect production data for each category, such as the total amount of memory shipped, the number of chips produced (logic chips), and the total units for other devices. This data is typically available through market research firms and semiconductor industry reports.

Step 3: Estimate Transistor Density for Each Category:

Use estimates for transistor density for each type of device. This can vary based on technology generation, with advanced chips having higher densities than older or specialized devices.

Step 4: Apply Growth Models:

Use historical growth models, such as Moore’s Law, to estimate the annual growth rate in transistor production for each category. For example, assume 65% annual growth for memory chips over the last few decades, based on the trends in transistor density and market demand.

Step 5: Extrapolate Total Production:

Multiply the annual production estimates by the transistor density for each category to estimate the number of transistors produced each year. Sum these figures over the years to estimate the total transistor production for each category.

Step 6: Total Transistor Production:

Add the estimates from all categories (memory chips, logic chips, and other devices) to get the total number of transistors produced over time. This will give a comprehensive view of global transistor production.

By following these steps, we can develop a robust estimate of total transistor production, helping us understand the scale of the semiconductor industry and its impact on technology and society.

Beyond Moore’s Law: The Future of Computing and AI

7.1. The Limits of Current Hardware and Transistor Scaling

For decades, Moore’s Law has been the driving force behind the exponential growth of computing power. The prediction that transistor density would double approximately every two years has led to ever-smaller, faster, and more powerful chips. This has fueled the rapid development of personal computers, smartphones, cloud computing, and artificial intelligence (AI). However, as we approach the physical limits of transistor scaling, the pace of progress is slowing, and the future of computing is increasingly uncertain.

The primary limitation facing traditional silicon-based transistors is the size. As transistors shrink to nanometer-scale (currently around 3-5nm), we begin to encounter quantum effects like electron tunneling, where electrons can jump across tiny barriers in ways that disrupt the normal functioning of a transistor. These effects increase power leakage, reduce the efficiency of transistors, and make further miniaturization both challenging and expensive.

Additionally, while smaller transistors pack more processing power into a chip, they also generate more heat, which requires sophisticated cooling solutions that can be both complex and costly. The energy efficiency improvements that once accompanied transistor scaling have also plateaued. These challenges indicate that traditional methods of improving processor performance — simply shrinking the size of transistors — can no longer sustain the same rate of progress we've seen in the past.

As transistor scaling hits its limits, the semiconductor industry must explore alternative technologies to continue meeting the growing demand for computational power, especially as AI and machine learning models demand more from hardware.

7.2. Alternative Technologies: Quantum Computing, Neuromorphic Chips, and Beyond

To push the boundaries of computing beyond the constraints of Moore’s Law, researchers and engineers are turning to alternative technologies that offer different approaches to processing information. Some of the most promising technologies include quantum computing and neuromorphic chips.

Quantum Computing:

Quantum computing represents a radical departure from classical computing. Instead of using binary bits to represent information (0s and 1s), quantum computers use quantum bits or qubits, which can exist in multiple states simultaneously thanks to the principles of quantum mechanics.

Quantum computers hold the potential to solve certain problems much faster than classical computers, particularly in fields like cryptography, optimization, simulation, and artificial intelligence. For example, they could perform tasks like simulating molecular structures for drug discovery or training AI models exponentially faster.

While quantum computing holds immense promise, the technology is still in its early stages. Building stable qubits and scaling quantum processors to handle complex, real-world problems remains a significant challenge. However, companies like IBM, Google, and Microsoft are making significant strides, and quantum supremacy — the point at which quantum computers can outperform classical computers in specific tasks — is already being demonstrated in controlled experiments.

Neuromorphic Computing:

Neuromorphic computing is inspired by the structure and function of the human brain. It involves designing hardware that mimics the behavior of neurons and synapses, enabling systems to process information more like biological systems do.

Neuromorphic chips are designed to be energy-efficient and capable of parallel processing, similar to how the brain handles information. These chips are particularly well-suited for AI applications, as they can process large amounts of data simultaneously and learn from experience in a more brain-like fashion.

Companies like Intel with their Loihi chip and IBM with TrueNorth have developed neuromorphic processors that offer significant improvements in energy efficiency and learning capabilities. These chips can be used for tasks like pattern recognition, robotics, and autonomous systems, representing an important step forward for AI hardware.

Photonic Computing:

Another alternative technology is photonic computing, which uses light instead of electrons to perform computations. Photonic processors can theoretically provide faster data transmission and lower power consumption than conventional electronic circuits.

Researchers are exploring how to integrate photonics with existing silicon technologies to create hybrid processors that use light for communication within the chip, enabling faster and more energy-efficient systems. This could be particularly useful in data centers and high-performance computing environments.

7.3. AI’s Dependency on Hardware: How the Next Era of Computing Will Shape AGI

As artificial intelligence continues to evolve, it is becoming increasingly clear that its growth and advancements are inextricably linked to the hardware it runs on. Deep learning models, such as neural networks, require massive computational resources for training and inference. AI has become hardware-dependent, and the progress of AI research is directly tied to hardware capabilities.

In the current era, GPUs and specialized hardware like TPUs (Tensor Processing Units) have become critical components in accelerating AI workloads. These processors are designed to handle the immense number of parallel calculations required for tasks like training large-scale neural networks and processing vast datasets.

However, the next era of computing will require more than just incremental improvements in traditional transistor-based hardware. For Artificial General Intelligence (AGI) — AI that can perform any intellectual task that a human being can — the computing power needed will surpass what is achievable by current hardware architectures.

The shift toward neuromorphic computing, quantum computing, and photonic computing will allow AI systems to handle tasks that are more akin to human-like reasoning and cognitive flexibility. These technologies will enable AI to not only process large volumes of data more efficiently but also to perform tasks involving abstract thinking, problem-solving, and adaptation — all of which are crucial for AGI.

As hardware advances, AI will become increasingly capable of mimicking general intelligence, and it is these next-generation computing technologies that will provide the foundation for the arrival of AGI.

7.4. The Race Toward Artificial General Intelligence: What Role Will Transistors Play?

The quest for Artificial General Intelligence (AGI) is one of the most ambitious goals of AI research, and hardware — particularly transistors — will play a key role in making this vision a reality. AGI requires a system that is capable of performing a wide range of cognitive tasks that humans can do, such as learning, reasoning, problem-solving, and creativity. To achieve AGI, AI systems must be capable of processing massive amounts of data, adapting to new situations, and reasoning about the world in ways that go beyond narrow, task-specific capabilities.

While current transistor-based computing systems are powerful enough to handle narrow AI tasks, they are not yet capable of scaling to the complexity needed for AGI. The next phase of computing will need to move beyond the limits of traditional silicon-based transistors and explore alternative computing architectures that can simulate the cognitive flexibility of the human brain.

In this context, transistors will still play a foundational role — but as we reach the physical limits of conventional transistor scaling, the future of AGI will depend on emerging technologies like neuromorphic chips and quantum computers. These alternative computing paradigms will complement traditional transistor-based systems by enabling more efficient, brain-like processing capabilities.

While transistor scaling is likely to continue for the next several years, the long-term future of AGI will rely on hardware that can handle the complexity and scale of human-level intelligence. Quantum computing, with its ability to process information in parallel at unprecedented speeds, could be a key player in the development of AGI, as it offers the potential for exponentially faster processing of large datasets. Meanwhile, neuromorphic chips could mimic the structure of the human brain, offering the adaptive learning capabilities that are crucial for AGI.

Ultimately, transistors will remain at the heart of computing for the foreseeable future, but the next breakthrough in computing hardware will likely come from the fusion of traditional transistor-based systems with quantum, neuromorphic, and photonic technologies.

The Road to AGI: Hardware and Beyond

8.1. What Is AGI and Why Does It Matter?

Artificial General Intelligence (AGI) refers to a type of artificial intelligence that is capable of performing any intellectual task that a human being can. Unlike narrow AI, which excels at specific tasks like playing chess, recognizing faces, or translating languages, AGI would have the cognitive flexibility to learn, reason, and adapt across a wide range of domains.

The importance of AGI lies in its potential to revolutionize virtually every aspect of society, from healthcare and education to economics and governance. With AGI, machines could potentially make complex decisions, understand nuanced contexts, and solve problems that are currently beyond the reach of even the most advanced AI systems.

AGI also promises to break the boundaries between human cognition and machine processing, allowing for a deeper symbiosis between humans and machines. With AGI, we could accelerate scientific discovery, optimize industries, and create personalized solutions for global challenges like climate change, health crises, and economic inequality.

However, achieving AGI is a monumental challenge that requires not just breakthroughs in software and algorithms, but also a leap forward in hardware technology. As we move closer to AGI, understanding the computational requirements and how hardware, especially transistors, fits into the equation is crucial.

8.2. Transistors as the Backbone of AGI Hardware

Transistors have been the backbone of computing hardware for more than half a century, and they will continue to play a pivotal role in the development of AGI. At their core, transistors are electronic switches that process and store data in digital form. Their ability to turn on and off (representing 1s and 0s) allows them to perform the logic operations that are fundamental to all modern computing systems, including AI.

For AGI to become a reality, the hardware that supports it must handle massive amounts of data, make rapid computations, and adapt in real time. While AGI systems will require a combination of hardware innovations, the basic principle of transistor-based circuits will remain central. Here’s why:

Parallel Processing:

AGI will require the ability to perform numerous tasks simultaneously — from problem-solving and learning to understanding complex, real-world data. Transistor-based systems, especially GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units), excel at parallel processing, which is essential for training and running deep learning models. These chips, designed to handle thousands of simultaneous computations, are already at the heart of AI systems, and they will continue to be integral in AGI development.

Energy Efficiency:

AGI systems will need to process vast quantities of data without consuming excessive amounts of energy. While quantum computers and neuromorphic chips hold promise for improving energy efficiency in certain tasks, silicon-based transistors are still the most energy-efficient choice for high-performance computing. As transistor density continues to increase, more computational power can be packed into smaller, more energy-efficient chips.

Moore’s Law and Scalability:

Moore’s Law, which states that the number of transistors on a chip doubles approximately every two years, has been a driving force in the exponential increase in computational power. While Moore’s Law is slowing down as transistors approach their physical limits, advances in semiconductor manufacturing — such as 3D stacking and chiplets — continue to push the boundaries of how many transistors can be packed into a given space, enabling AGI systems to scale in terms of computational power.

Stability and Reliability:

As we approach the goal of AGI, the reliability and stability of hardware become crucial. Transistors, especially those used in integrated circuits (ICs), are well-established, time-tested components. Their robustness and dependability make them an ideal foundation upon which to build the next generation of computing systems required for AGI.

Despite the exciting developments in alternative computing technologies, transistors will likely remain at the core of AGI hardware for the foreseeable future, with specialized chips enhancing their capabilities.

8.3. Scaling the Brains of Machines: The Computational Requirements for AGI

To build AGI, the hardware must meet several critical computational requirements:

Massive Parallelism:

AGI requires the ability to process thousands, if not millions, of data points at once. This means hardware must be able to perform computations in parallel to simulate the way the human brain processes information. While the brain uses around 86 billion neurons and trillions of synapses to process information, an AGI system needs to handle similar complexity. GPUs, TPUs, and neuromorphic chips, which can perform parallel operations at scale, are already being used to simulate neural networks, a key component of AI.

Real-Time Processing:

AGI systems will need to make decisions and adapt to new information in real time. This requires not only powerful computational hardware but also ultra-low-latency connections between different hardware components. Transistor-based systems excel at processing data quickly, and optical interconnects (using light instead of electrical signals for communication between chips) are being explored to reduce latency even further.

Adaptive Learning:

One of the hallmarks of AGI is its ability to learn autonomously from new experiences, adapt to different environments, and make decisions without human intervention. This requires the hardware to handle both short-term memory (for immediate decision-making) and long-term memory (for accumulating knowledge over time). Modern memory chips (e.g., DRAM and NAND flash) are already capable of storing vast amounts of data, but AGI will demand even more storage capacity and faster access speeds to process and retain the massive volumes of data it encounters.

Generalization:

Unlike narrow AI, which excels at specific tasks, AGI must be capable of generalizing across different domains. This requires hardware capable of running multi-faceted algorithms that can process various types of input, from text and images to sensory data and real-time environmental feedback.

In summary, AGI requires a massive leap in computational power, and while transistors will remain crucial in this journey, hardware architectures that facilitate parallel processing, fast data transfer, and adaptive learning will be essential.

8.4. What’s Next for Transistors in the Era of AGI?

While transistors have served as the foundation of computing for over half a century, their role in the development of AGI is evolving. As transistor scaling begins to reach its limits, the focus is shifting to new materials, advanced architectures, and alternative computing paradigms to power the next generation of computing systems.

Beyond Silicon:

As traditional silicon transistors approach their physical limits, there is growing interest in alternative materials such as graphene, gallium nitride (GaN), and carbon nanotubes. These materials have the potential to offer better performance, lower power consumption, and greater scalability than silicon, providing the foundation for the next generation of transistors used in AGI hardware.

3D Transistor Architectures:

3D stacking allows for multiple layers of transistor circuits to be stacked on top of each other, significantly increasing the transistor count in a single chip without the need for shrinking the individual transistors further. This approach could provide the computational density required to handle the complex workloads of AGI systems.

Quantum Computing:

Quantum computing offers a radical departure from traditional transistor-based systems. By leveraging the principles of quantum mechanics, quantum computers use qubits that can represent multiple states simultaneously, allowing for massively parallel computations. Quantum computing could potentially accelerate certain tasks crucial for AGI, such as optimization, machine learning, and decision-making. However, the technology is still in its infancy and may complement, rather than replace, traditional transistor-based computing for AGI development.

Neuromorphic and Brain-Inspired Computing:

Neuromorphic computing seeks to replicate the structure and function of the human brain, using artificial neurons and synapses to mimic cognitive processes. Neuromorphic chips could provide the adaptive learning capabilities needed for AGI, offering a more energy-efficient and brain-like approach to computation. As research progresses, these chips could integrate with transistor-based systems to offer the power and flexibility needed for AGI.

The Ethical and Societal Implications of AI and AGI

9.1. The Role of Technology in Shaping Society

Throughout history, technology has played a central role in shaping human society. From the invention of the wheel to the advent of the printing press, each breakthrough has altered the way we live, communicate, and interact with one another. In recent decades, artificial intelligence (AI) and its more advanced counterpart, Artificial General Intelligence (AGI), have begun to transform the fabric of society at an unprecedented pace. These technologies promise to revolutionize industries, healthcare, transportation, education, and nearly every other aspect of modern life.

AI systems, powered by transistors and advanced computing hardware, have already begun influencing how we interact with technology daily, from smartphones and voice assistants to predictive analytics and automated customer service. As AI continues to evolve, it will increasingly affect decision-making, personal autonomy, and social structures.

AGI, which has the potential to replicate human cognitive abilities across a broad spectrum of tasks, promises to reshape society even more fundamentally. Its impact on economic systems, social structures, and human relationships will depend on how these technologies are developed, regulated, and integrated into society. As AI and AGI systems become more autonomous and capable, they will challenge traditional notions of work, governance, and human rights. These technologies will not only change how we interact with the world, but also raise profound questions about who holds power, how knowledge is controlled, and what it means to be human.

9.2. Ethical Considerations in AI Development

As AI and AGI technologies become more embedded in society, there are significant ethical considerations that must be addressed. These include bias in algorithms, transparency in decision-making, and the autonomy of AI systems. Key ethical concerns include:

Bias and Fairness:

AI systems are often trained on large datasets that reflect historical inequalities and biases in society. If these datasets contain biased information, the AI models built on them can perpetuate those biases, leading to unfair outcomes. For example, AI used in hiring, criminal justice, or loan approval systems can inadvertently discriminate against marginalized groups if the underlying data is biased.

Ensuring that AI is fair and unbiased requires careful consideration of the data used to train AI systems, as well as ongoing monitoring to identify and correct any unintended discriminatory effects.

Transparency and Accountability:

As AI systems are increasingly relied upon to make critical decisions, from medical diagnoses to legal judgments, it becomes essential that these systems are transparent and accountable. Decisions made by AI algorithms should be explainable to the people affected by them, and there should be clear accountability when AI systems make mistakes or cause harm.

The black-box nature of some AI models, where decisions are made without human-understandable explanations, raises significant concerns about trust and liability. As AI moves closer to AGI, ensuring that these systems can be understood and scrutinized will be crucial for preventing abuses of power and ensuring public confidence in AI.

Autonomy and Control:

One of the greatest ethical challenges in AGI development is the question of control. If AGI becomes capable of making its own decisions and taking actions independently of human oversight, who is responsible for its actions? Ensuring that AGI systems are developed in a way that maintains human control and aligns with human values will be crucial for avoiding harmful consequences.

Ethical discussions around autonomy also involve the question of AI rights and whether, as AI becomes more advanced, it should be granted some form of legal or ethical status. These discussions will require careful consideration of the potential risks and benefits of highly autonomous AI.

9.3. The Future of Work: Automation, Transistors, and Human Labor

One of the most profound impacts of AI and AGI will be on the future of work. Automation, driven by AI, is already transforming industries by replacing repetitive, manual tasks with machines that can perform them more efficiently and accurately. As AGI systems approach the ability to perform human-like cognitive tasks, they could eventually replace jobs that require not just physical labor, but intellectual work as well.

Job Displacement:

As AI systems take over more tasks in manufacturing, service industries, and even creative fields, there is a growing concern about widespread job displacement. Transistor-powered automation systems can perform a variety of tasks that were once considered inherently human, such as writing reports, diagnosing medical conditions, or managing investment portfolios.

The question of how society will adapt to this disruption in the labor market is crucial. Will there be a shift towards universal basic income or other social safety nets? How will workers transition from jobs that are replaced by machines to new roles created by the evolving economy?

The Changing Nature of Work:

While automation will undoubtedly displace some jobs, it is also likely to create new opportunities, especially in sectors that involve AI development, robotics, data science, and human-machine collaboration. AI systems can augment human capabilities, leading to a shift in the nature of work rather than its complete elimination.

However, these new jobs will require different skills and education, emphasizing the need for retraining programs to help workers transition into new roles. The challenge will be ensuring that workers are empowered to thrive in an increasingly automated world and that the benefits of AI-driven productivity are shared across society.

9.4. Preparing for AGI: Social, Political, and Economic Impact

The development of AGI will likely have disruptive consequences across multiple dimensions of society, with far-reaching implications for social structures, politics, and the economy.

Social Impact:

AGI has the potential to redefine human relationships and human identity. As AGI systems become more autonomous, there are ethical questions about the role of humans in a world where machines can perform almost all cognitive tasks. Will AGI become an extension of human capabilities, or will it challenge the fundamental nature of what it means to be human?

AGI could also exacerbate social inequalities if access to AGI-driven benefits is restricted to wealthy individuals or countries. Ensuring that AGI technologies are distributed equitably will be essential to prevent further social stratification.

Political Impact:

AGI will likely reshape the political landscape in profound ways. Governments may find themselves facing new challenges in terms of regulating and controlling AGI development. Should AGI systems be subject to the same laws and ethics as humans? Who controls AGI systems, and how can their actions be monitored and held accountable?

Additionally, AGI has the potential to change the balance of global power. Countries or companies that control advanced AGI technologies could gain enormous political and economic power, creating geopolitical tensions and potentially sparking AI arms races.

Economic Impact:

Economically, AGI could be a game-changer. It could lead to massive productivity increases and potentially solve complex problems in areas like climate change, medicine, and energy production. However, it could also result in economic dislocation as traditional jobs are automated, and industries are disrupted.

The redistribution of wealth will be a major issue. If AGI can generate massive economic value, how will that value be shared? Will society embrace new economic models to ensure that the benefits of AGI are distributed fairly, or will there be rising tension as the divide between the wealthy and the underprivileged grows?