AI-Driven Simulated Patient Interactions for Medical & Healthcare Training

1. Executive Summary

Large Language Models (LLMs) such as ChatGPT are shifting from optional study companions to core infrastructure inside medical education. Eight recent peer-reviewed studies and educator-facing analyses converge on one conclusion: virtual standardized patients powered by ChatGPT significantly accelerate communication, history-taking, decision-making, and empathy training, while reducing reliance on expensive physical simulations.

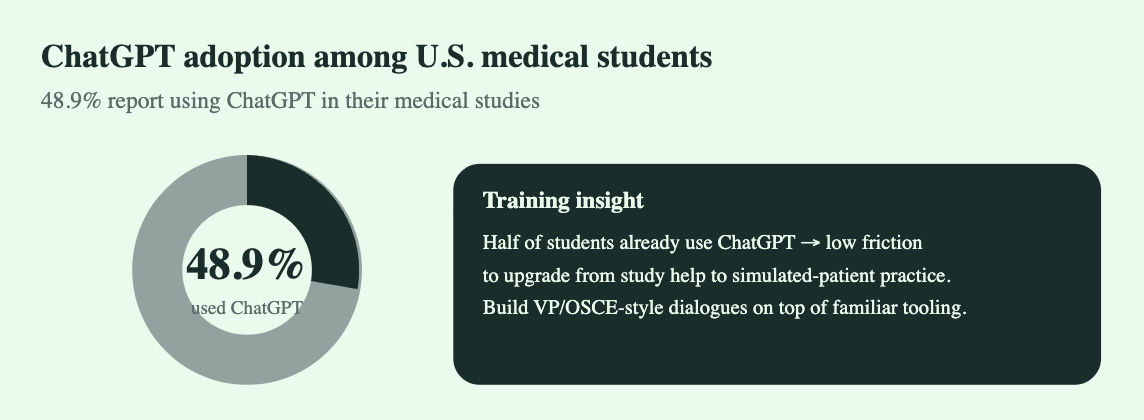

48.9% of U.S. medical students surveyed said they have used ChatGPT in their medical studies

In a survey of 415 students across 28 North American med schools, 52% reported using ChatGPT for medical school coursework

n a survey of 415 students across 28 North American med schools, 52% reported using ChatGPT for medical school coursework.

This whitepaper distills what the research actually proves, what it doesn’t, where AI-driven simulated patients perform well, where they fail, and how institutions can implement them safely.

2. Background: Why Healthcare Training Is Shifting to LLMs

Traditional simulation training depends on:

Human standardized patients (expensive, inconsistent)

Faculty time (limited)

Physical simulation centers (high CapEx)

Modern constraints — rising class sizes, shrinking budgets, increasing competency expectations — make scaling traditional patient simulations unsustainable.

LLMs, when configured correctly, can:

Role-play realistic patient personas

Provide branching interviews

Respond empathetically in text or voice

Offer immediate feedback on technique

Adapt difficulty to student level

Simulate rare or complex conditions on demand

This creates infinite, low-cost, high-fidelity practice loops.

3. Literature Review & Evidence Synthesis

3.1 AI as a Virtual Standardized Patient (VSP)

Source: “Clinical Simulation with ChatGPT: A Revolution in Medical Education?”

A GPT-4-based system simulated an acute coronary syndrome case.

Key finding: Medical students rated the interaction highly realistic, particularly the model’s emotional responses and adaptive questioning.

Implication: ChatGPT can replicate many of the teaching benefits traditionally requiring human actors.

3.2 Virtual Bedside Teaching Across Regions

Source: “Using ChatGPT for Medical Education: The Technical Perspective” (HKU/NUS)

Explored ChatGPT as the backend for virtual bedside encounters.

Key finding: Students reported improved confidence in history-taking and clinical reasoning.

Implication: LLM-based patients work across culturally diverse contexts and make bedside teaching more accessible.

3.3 Global Adoption & Attitudes

Source: “Current Status of ChatGPT Use in Medical Education”

Comprehensive review of 2023–2025 research.

Key finding: Students overwhelmingly view ChatGPT as beneficial for learning, with simulation activities ranked among top use cases.

Implication: Institutional adoption is accelerating due to bottom-up student pressure + top-down cost efficiency.

3.4 LLM Standardized Patients for Intern Physicians

Source: “AI-powered Standardised Patients: ChatGPT-4o’s Impact on Interns”

Evaluated performance on clinical case management tasks.

Key finding: Interns using the AI VSP demonstrated improved diagnostic reasoning and structured clinical thinking.

Implication: VSPs aren’t just for early training — they boost higher-level clinical judgment as well.

3.5 Mixed Reality + ChatGPT Virtual Patients

Source: JMIR Research “Integrating GPT-Based AI into Virtual Patients (MFR Training)”

Used GPT as the conversational engine inside a mixed-reality trauma scenario.

Key finding: Realistic patient communication significantly increased scenario immersion.

Implication: LLMs are becoming foundational for next-gen XR clinical simulations.

3.6 Empathy & Communication Training

Source: Springer “ChatGPT as a Virtual Patient: Empathic Expressions During History Taking”

Based on 659 interactions.

Key finding: ChatGPT demonstrated high consistency in empathetic, patient-centered dialogue. Students found it useful for practicing rapport and autonomy-supportive communication.

Implication: LLMs can equalize empathy-training quality without requiring trained actors.

3.7 Risks & Limitations Review

Source: “ChatGPT in Healthcare Education: A Double-Edged Sword”

Key finding: Major challenges include hallucinations, over-trust, lack of source transparency, variable clinical accuracy, and ethical concerns.

Implication: Without strong guardrails, VSP deployments can mislead or mis-train students.

3.8 Educator Adoption Perspective

Source: Lecturio “Using ChatGPT for Virtual Patients & Cases”

Key finding: Educators adopt LLM virtual patients primarily for convenience and consistency, particularly for case creation and OSCE prep.

Implication: Faculty see LLMs as extensions of traditional case-writing, not replacements.

4. Key Benefits Identified Across All Studies

4.1 Skill Acceleration

Faster improvement in history-taking

Better structure in clinical interviews

Higher confidence in differential diagnosis

4.2 Repeatability & Accessibility

Infinite practice cycles

On-demand cases (common or rare)

Suitable for early and late-stage learners

4.3 Emotional Realism

Studies consistently report:

Natural empathetic expressions

Realistic patient personas

Adaptable tone based on context

4.4 Cost Efficiency

Traditional simulation centers cost millions annually.

LLM-driven simulation reduces:

Faculty load

Standardized patient expenses

Infrastructure requirements

4.5 Scalability

Supports:

Massive cohorts

Remote learning

Cross-university collaboration

5. Risks & Ethical Considerations

5.1 Clinical Accuracy & Hallucinations

LLMs can produce incorrect medical facts.

Mitigation: Clinical guardrails, curated prompts, retrieval systems.

5.2 Over-Reliance

Students may mistake LLM replies as gold standard medical behavior.

Mitigation: Faculty-reviewed rubrics and structured debriefs.

5.3 Bias & Cultural Blind Spots

LLMs inherit training data biases.

Mitigation: Diverse prompting, demographic parameter controls.

5.4 Data Privacy

Any student-entered patient data must be synthetic or anonymized.

Mitigation: Strict privacy guidelines inside educational deployments.

6. Implementation Framework for Institutions

6.1 Phase 1 — Foundation

Select primary use case: history-taking, empathy, diagnostic reasoning

Set clinical accuracy constraints

Use GPT-4.1 or GPT-4o models with medical instruction tuning

6.2 Phase 2 — Virtual Patient Engine

Core capabilities:

Realistic persona behaviors

Emotionally adaptive responses

Multi-turn branching interview logic

Difficulty scaling

OSCE-mapped competencies

Safety + hallucination mitigation layer

6.3 Phase 3 — Integration

LMS integration (Moodle, Canvas)

Simulation lab workflows

Scoring & feedback dashboards

Faculty oversight modules

6.4 Phase 4 — Assessment

Use standard frameworks:

Calgary–Cambridge model

AAMC EPAs

OSCE checklists

Communication scoring rubrics

7. Global Adoption Outlook (2025–2028)

Near-Term (12 months)

All major medical schools will adopt some form of LLM simulation

Hybrid OSCEs combining human SP + AI SP become standard

XR + LLM training modules rise

Mid-Term (24–48 months)

AI Standardized Patients become a required curricular component

National exams integrate LLM-based practice modules

Research shifts from feasibility → optimization → accreditation readiness

8. Conclusion

The evidence is overwhelming: LLM-powered virtual patients are becoming one of the most impactful innovations in medical education since simulation centers were introduced. The technology is not a replacement for clinical training — it is an amplifier that allows every student to practice more, practice earlier, and practice safer.

The institutions that adopt this now will gain:

Better skilled graduates

Reduced training costs

Scalable simulation infrastructure

A competitive edge in medical education quality

This is a structural shift, not a temporary trend.