AI VISIBILITY AUDITS & SCORING

Measure. Optimise. Amplify—Turn AI outputs into measurable impact.

Pain Point

Tracking AI visibility can feel like a guessing game. Different teams measure success differently, and it’s hard to know which AI outputs are truly driving relevance, authority, and conversion. Without a standard approach, you risk wasted effort, inconsistent results, and missed opportunities.

Our Solution

Introducing AI Visibility Scoring Frameworks—a standardized, data-driven approach to measure and optimise your AI content and outputs. With a clear framework, every team can speak the same language and focus on what actually drives impact.

How We Do It

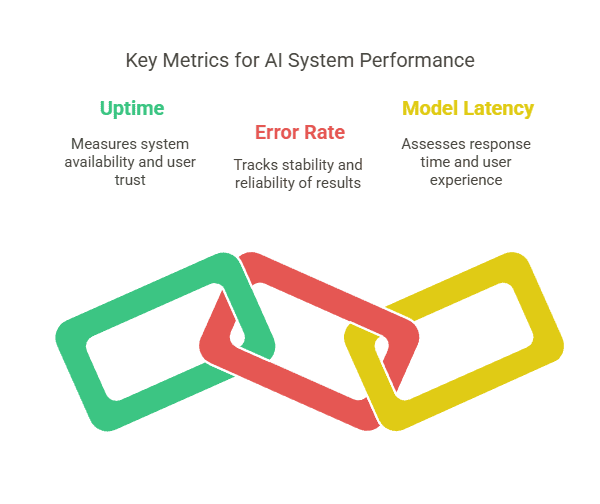

Define Your Scores: We create a composite scoring system across relevance, authority, and conversion, tailored to your business priorities.

Audit Existing AI Outputs: We evaluate every piece of AI-generated content, listings, or recommendations against the framework.

Optimise & Iterate: Using insights from the scoring, we refine your AI outputs to boost relevance, enhance credibility, and increase conversions.

Automate & Monitor: Continuous measurement ensures AI visibility grows sustainably over time.

What You Get

A single, unified visibility score for all AI outputs.

Clear visibility into where your AI excels and where it underperforms.

Prioritized action plans for optimisation based on data.

A repeatable, scalable system to monitor and improve AI visibility across teams.

The Outcome

Transform AI visibility from guesswork into measurable growth. Your AI outputs become more relevant, authoritative, and conversion-focused—driving real business impact while giving your teams confidence in every decision.

AI VISIBILITY DASHBOARDS

Unified AI Visibility across every major LLM

Pain Point

In today’s AI-driven world, your brand and content can appear across multiple large language models—ChatGPT, Gemini, Perplexity, Copilot—but tracking performance is scattered, inconsistent, and time-consuming. Without a unified view, you’re left guessing which content works, which prompts drive results, and where opportunities are slipping away.

Our Solution

Cross-LLM Insights centralizes your AI visibility, giving you a single source of truth across all major LLMs. We aggregate, standardize, and analyze metrics so you can understand exactly how your content performs—anywhere AI engages your audience.

How We Do It

Aggregate Metrics: Pull data from ChatGPT, Gemini, Perplexity, Copilot, and other emerging LLMs.

Standardize Visibility: Normalize outputs across platforms for easy comparison.

Analyze Patterns: Identify top-performing prompts, content formats, and visibility gaps.

Actionable Dashboards: Provide real-time insights, alerts, and recommendations.

What You Get

A centralized dashboard of AI visibility metrics across all major LLMs

Performance reports highlighting trends, opportunities, and risks

Data-driven insights to optimize content and prompts for maximum reach

Alerts and notifications for sudden shifts in AI visibility

The Outcome

With Cross-LLM Insights, you move from fragmented, reactive tracking to proactive, strategic AI visibility management. You’ll know what’s working, make informed decisions faster, and ensure your brand and content consistently appear where it matters—across every major LLM.