LLM-Driven Fraud Detection in Banking: How ChatGPT-Class Systems Transform Anomaly Analysis & Investigation Workflows

Executive Summary

Financial institutions worldwide are under intensifying pressure to counter increasingly sophisticated fraud schemes—ranging from transaction-pattern anomalies to identity theft, mule accounts, synthetic fraud, account takeovers, and real-time social-engineering attacks.

Traditional machine-learning fraud systems remain effective for high-volume anomaly detection but lack explainability, contextual reasoning, and human-readable narratives. This is the gap where ChatGPT-style Large Language Models (LLMs) are becoming central to fraud teams.

Across industry, surveys and research show:

73% of financial institutions use AI for fraud detection (IBM, ECB sources).

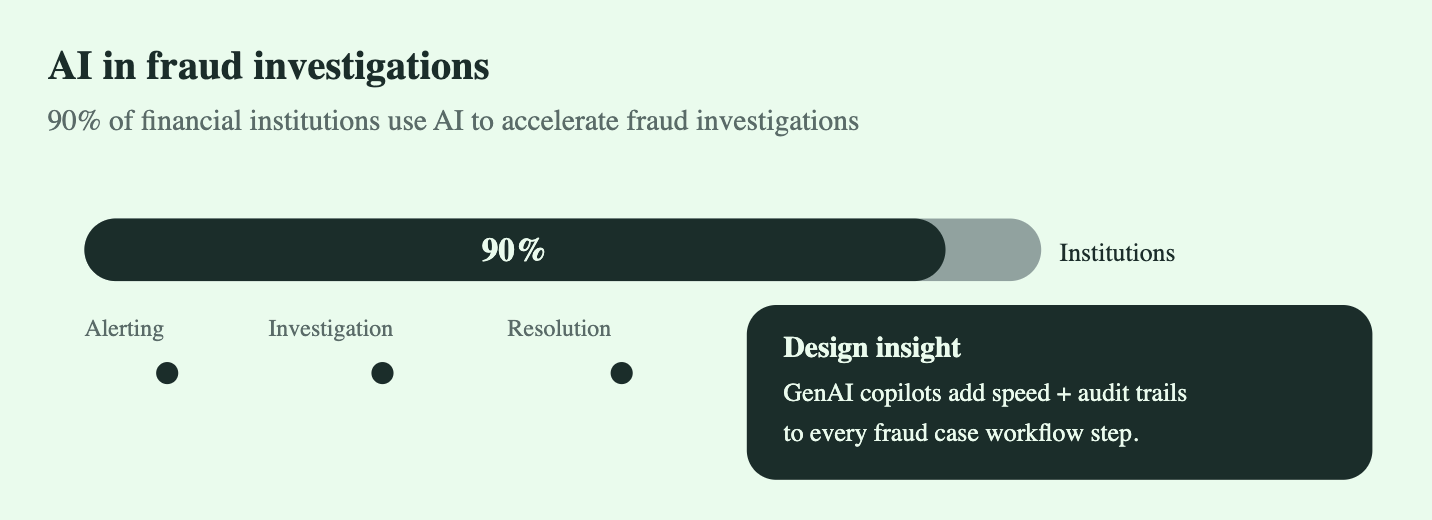

90% leverage AI to accelerate fraud investigations and detect new fraud patterns in real time.

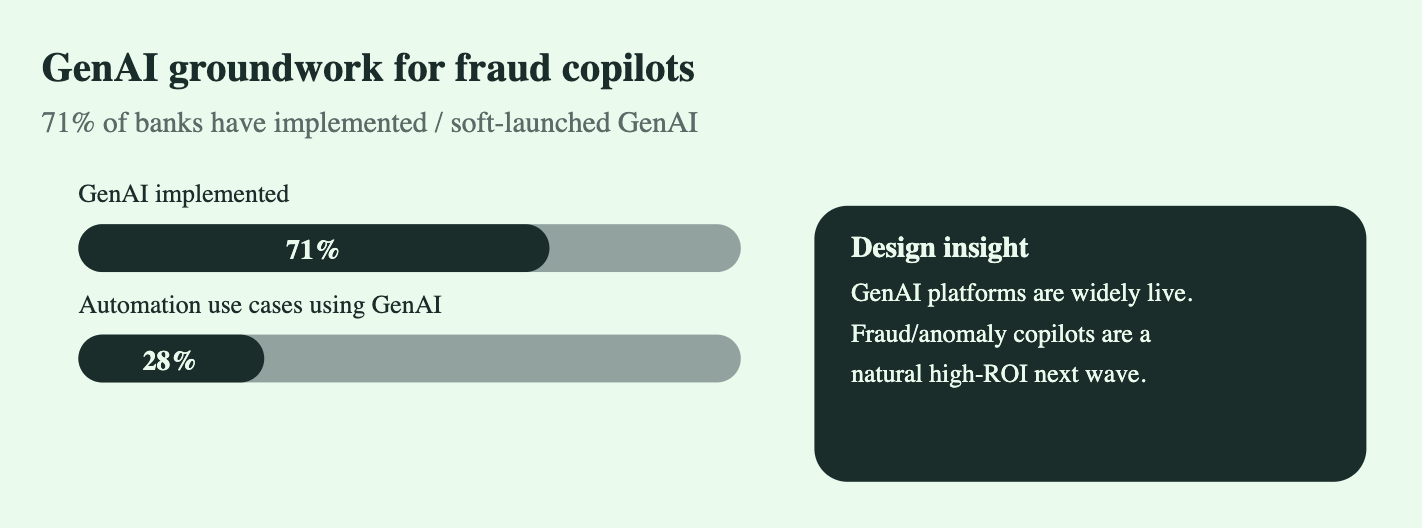

71% of banks have already implemented or soft-launched GenAI solutions, with fraud and risk among the top three workloads.

This whitepaper synthesizes insights from industry research, academic reviews, and regulatory analyses to explain how LLMs elevate fraud detection systems—especially in anomaly analysis, investigator workflows, and narrative generation.

A 2024 global survey found that 73% of financial institutions are already using AI for fraud detection (and 74% for broader financial-crime detection).

A 2025 industry report states that 90% of financial institutions use AI to speed up fraud investigations and detect new fraud tactics in real time.

A 2025 EY banking survey reports 71% of banks have implemented or soft-launched GenAI capabilities, and 28% of current automation use cases now leverage GenAI/agentic AI.

1. Introduction: The Shift Toward AI-Enhanced Fraud Detection

Fraud in financial services has become increasingly pattern-driven, multi-channel, and behavioural, making rules-based systems insufficient.

The reviewed articles converge on three realities:

Fraud operations have become too complex for static rules or human triage alone.

AI is already embedded in major banks’ fraud infrastructure.

LLMs now serve as the cognitive interface between ML models and investigators, making decisions more transparent and faster to act upon.

ChatGPT-class models are not replacing fraud scoring engines—they are augmenting them by:

Explaining anomalies

Summarizing cases

Linking cross-account behaviour

Drafting SAR/STR narratives

Surfacing hidden patterns across unstructured logs

This is why the financial industry is rapidly adopting them.

2. Current Landscape of AI in Fraud Detection

2.1 AI Adoption Across Financial Institutions

Based on IBM, ECB, and systematic reviews, AI adoption is now:

Mainstream in transaction-monitoring systems

Expanding into behavioural biometrics

Integrated with AML for unified fraud-risk frameworks

The literature notes that AI’s strengths include:

Real-time processing of millions of events

Pattern detection that adapts to new fraud strategies

Multi-source data ingestion (transactions, devices, user behaviour, session analytics)

2.2 Drivers Behind AI Adoption

Across regions (UAE, Qatar, U.S., EU), banks adopt AI for:

Speed: Faster alert triage, especially during peaks

Accuracy: Lower false-positives reduce investigator fatigue

Scalability: Handling exponential transaction growth

Regulatory pressure: Expectation of modern surveillance systems

But despite its power, AI alone still leaves interpretation gaps—and this is where LLMs enter.

3. Rise of LLMs in Fraud Detection

3.1 Why LLMs are Ideal for Fraud Workflows

Based on Taktile, InvestGlass, and SSRN findings, LLMs solve a long-standing issue in fraud operations:

Traditional ML detects fraud — LLMs explain fraud.

LLMs enhance workflows through:

Natural-language summaries of suspicious patterns

Cross-case linking (e.g., “These 4 accounts share the same device signature”)

Contextual reasoning across structured + unstructured logs

Conversational querying (“Why did model M42 flag this account?”)

Drafting SAR/STR compliance reports

Auto-generating investigator notes

This dramatically reduces investigation time.

3.2 Key Capabilities Noted Across Articles

4. Techniques & Approaches in AI/LLM-Driven Fraud Detection

A cross-study synthesis from arXiv, IJSRA, ECB, and IBM reveals three major layers:

4.1 Layer 1 — Core Machine Learning Detection Models

(Traditional AI foundation)

Supervised learning (XGBoost, LightGBM, CatBoost)

Unsupervised anomaly detection (Autoencoders, Isolation Forest)

Neural sequence models for transaction timelines

Behavioural biometrics (session velocity, mouse patterns, device fingerprinting)

These models generate the initial detection signals, but cannot explain themselves.

4.2 Layer 2 — LLM “Cognition & Explanation Layer”

(Where ChatGPT-class models shine)

LLMs interpret detected anomalies and add meaning by:

Reading transaction histories

Detecting suspicious chains of events

Explaining why behaviour deviates from normal patterns

Generating next-step recommendations for investigators

Producing narrative summaries for audit/regulatory teams

This “interpretation layer” is the missing link in many current systems.

4.3 Layer 3 — Investigator Copilot Interfaces

Articles describe emerging interfaces such as:

Chat-based investigation consoles

Fraud-agent copilots with search + summarization

Automated SAR/STR drafting modules

Interactive timelines with LLM-generated annotations

Banks are shifting from dashboards → copilots.

5. Regulatory, Ethical & Operational Considerations

Research (ECB, UAE/Qatar academic studies) identifies the major constraints:

5.1 Transparency and Explainability

Regulators demand “model explainability.”

LLMs help satisfy this by:

Converting anomalies into explainable narratives

Clarifying decision paths

Highlighting risk-factors contributing to fraud scores

5.2 Fairness & Bias

Studies outline risks when AI models:

Over-flag certain demographics

Learn biases from historical fraud labels

LLMs must be trained and governed with strong guardrails.

5.3 Data Privacy & Security

LLMs can only operate with:

Encrypted context

Strict data-minimisation

Region-specific compliance (GDPR, MAS TRM, RBI norms)

5.4 Operational Reliability

Banks must mitigate:

Hallucinations

Misinterpretation of fraudulent vs legitimate customer behaviour

Over-reliance on AI narratives without human review

Most articles recommend “AI + human” hybrid investigation models.

6. Challenges in Deploying LLM-Driven Fraud Systems

Across articles, the main challenges are:

Integration with legacy fraud engines

High governance requirements

Latency constraints in real-time fraud systems

Need for secure on-prem or VPC-hosted LLMs

Lack of labelled datasets for fine-tuning

Risk of regulatory non-compliance without explanation layers

LLMs solve many—but not all—of these.

7. Future Outlook (2025–2028)

All sources predict accelerating adoption with three big shifts:

7.1 Fraud Investigators Will Use AI First, Then Act

LLM copilots become the default entry point for fraud analysts.

7.2 Unified AML + Fraud + Risk Copilots

Banks will shift away from siloed platforms and toward unified GenAI copilots.

7.3 Agentic AI in Fraud Workflows

Next-generation systems will:

Pull evidence from multiple systems

Cross-reference case history

Suggest optimal action

Create audit trails automatically

7.4 Multi-modal Fraud Detection

Future LLMs will analyze:

Voice phishing calls

Screenshots

Video KYC

Biometrics

Behavioural telemetry

This makes fraud harder for attackers and easier for banks.

Conclusion

Fraud detection is one of the highest-ROI applications of AI and LLMs in financial services.

The existing ML layer is now enhanced by a new LLM cognitive layer, enabling deeper reasoning, faster investigations, and far more interpretable outputs.

Banks that combine:

ML anomaly detection

LLM-based explanation + workflow automation

Regulatory-grade transparency

…will lead the next generation of fraud-resilient financial infrastructure.

This is the direction nearly all reviewed articles indicate—and 2025 marks the tipping point for large-scale GenAI deployment in fraud and AML.