Battle of the Beauty Brands – Comparative AI Visibility Analysis

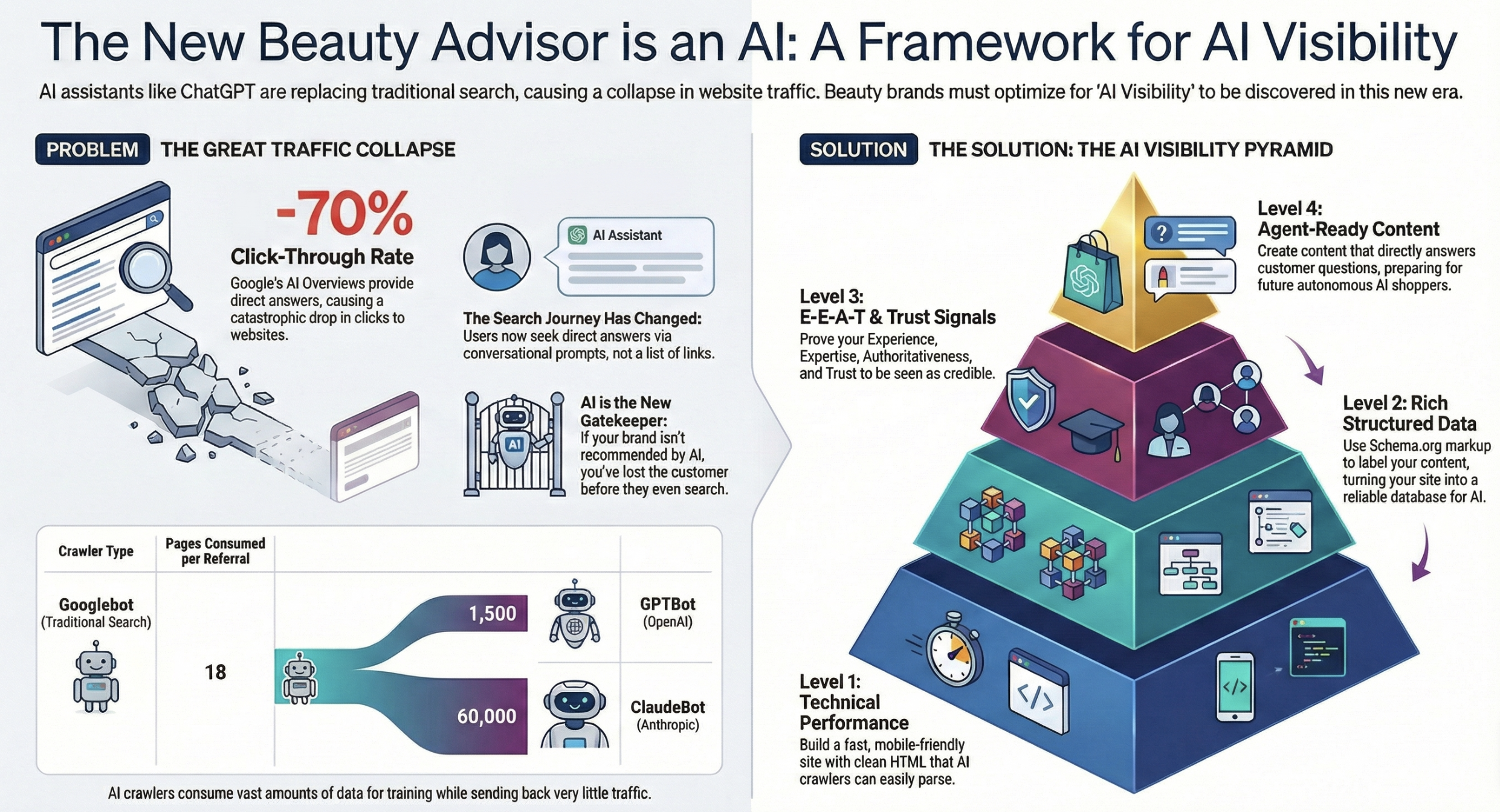

The beauty industry is experiencing a "tectonic shift" in consumer discovery. The traditional search funnel has collapsed into a "zero-click economy" where AI platforms like ChatGPT, Google Gemini, and Perplexity serve as the primary beauty advisors,,. In this environment, traditional SEO is no longer sufficient; brands must optimize for AI Visibility—the ability to be cited, recommended, and prioritized by Large Language Models (LLMs),.

This report analyzes the AI visibility performance of major beauty brands. The data reveals a critical "Visibility Paradox": while many heritage brands achieve high recognition when searched for by name (Mention Rate), they frequently fail to dominate broader, generic category conversations (Share of Voice), often ceding ground to competitors who have mastered the "trust stack" of AI citations,.

Based on the sources, the $743 billion figure refers to the staggering financial loss the U.S. fashion industry incurred in 2023 due to returns, representing 14.6% of all purchases.

This figure quantifies a massive "confidence gap" in digital commerce—the disconnect between what a consumer hopes they are buying and what actually arrives. In the absence of physical interaction (the "dressing room" experience), consumers lack confidence in fit, texture, and suitability, leading to a crisis of reverse logistics.

Here is a breakdown of this $743 billion gap and how AI is being deployed to close it:

1. The Scale of the Crisis

The gap in purchase confidence is most visible in the disparity between physical and digital return rates. While return rates for brick-and-mortar stores average 8-10%, online fashion return rates have soared to 30-40%.

• The Cost of "Maybe": When consumers lack the confidence that an item is right for them, they over-order and return. Handling these returns costs retailers up to 66% of the original item’s price, covering transport, inspection, repackaging, and resale.

• Devaluation: The time lag in processing these returns creates "blind spots" where stock sits in warehouses depreciating. Delayed processing results in stock markdowns of 25-50%, further eroding profitability.

2. Operational Consequences

The $743 billion loss is not just in lost sales, but in the operational chaos required to bridge the gap between a return and a resale:

• Repair Requirements: Approximately 50-60% of returned goods require repairs before they can be resold, driving up labor costs.

• Environmental Impact: This gap also represents an environmental crisis. Reverse logistics accounts for 750,000 tonnes of CO2 emissions annually in the UK alone, with transport contributing nearly 50% of total emissions in the returns process.

3. Closing the Gap with AI

To recover this $743 billion, brands are utilizing AI to increase consumer confidence before the purchase and optimize logistics after the return.

A. Pre-Purchase Confidence (Virtual Try-On) In the beauty sector, AI tools are actively narrowing this gap by simulating the physical trial.

• Virtual Try-On: Consumers who engage with AI makeup software (virtual try-ons) are around 40% more likely to buy the product, effectively bridging the gap between virtual trials and physical sales.

• Reducing Price Sensitivity: These tools are particularly effective for consumers who are price-sensitive or have body image concerns, providing the confidence needed to commit to a purchase without the ability to physically test the product,.

B. Post-Purchase Optimization (Predictive Analytics) Brands are using AI to predict and mitigate the volume of returns before they happen.

• Forecasting Returns: AI-driven demand planning can predict which products are most likely to be returned, allowing brands to adjust stock levels accordingly. This technology has helped brands cut inventory waste by up to 20%.

• Automated Recovery: Automated warehouses using RFID and blockchain can now process 500-600 returned items per hour, ensuring goods are resold quickly before they lose value or require markdowns.

Based on the provided reports and whitepapers, here is an analysis of the collapse of the referral economy and the end of the "old web."

The Collapse of the Referral Economy

The "Old Web" was built on a foundational, implicit contract: creators and businesses produced high-quality content, and in exchange, search engines (primarily Google) delivered qualified traffic to their websites. This symbiotic relationship—the engine of the referral economy—has fundamentally broken.

We are witnessing a systemic failure of this model, replaced by an environment where AI systems ingest content to retain users rather than refer them.

1. The Rise of the "Zero-Click" Economy

The primary symptom of this collapse is the "Zero-Click" Search Engine Results Page (SERP). The goal of traditional SEO was to rank high enough to earn a click. Today, that currency has been devalued because the majority of searches no longer result in a visit to an external website.

• The Stats: Nearly 60% of all searches now end without a click, as users get their answers directly on the search page or within an AI chat interface.

• The Consequence: When AI provides a synthesized answer (e.g., Google’s AI Overviews), click-through rates for organic results plummet. Studies show drops in click-through rates ranging from 18% to over 50% for top-ranking positions when an AI overview is present.

• The Dead End: Users are significantly more likely to end their search session entirely after reading an AI-generated summary, perceiving the answer as "good enough" and terminating the journey of discovery that used to drive traffic to brand sites.

2. The Asymmetry of Extraction

The relationship between content creators and digital platforms has shifted from partnership to extraction.

• New Crawlers: Unlike traditional search bots (like Googlebot) that indexed content to rank links, modern AI crawlers (like OpenAI’s GPTBot or Anthropic’s ClaudeBot) harvest data to train models.

• The Ratio Imbalance: In the old economy, a bot might crawl 18 pages to send one visitor. Today, AI crawlers operate on an industrial data-mining scale, with some models consuming an estimated 1,500 to 60,000 pages for every single referral they send back to a publisher.

• The Result: Brands and publishers are effectively subsidizing the intelligence of the very platforms that are displacing them, creating a "lose-lose" scenario where value is extracted without compensation in the form of traffic.

3. The "AI Shelf": Visibility vs. Invisibility

In the old web, being on "Page 2" of Google was bad; in the new AI web, if you aren't the primary answer, you don't exist.

• The Collapse of Choice: AI-driven search compresses the traditional list of "10 blue links" into a single, curated answer or a short list of 3–5 recommendations.

• The Binary Outcome: There is no "Page 2" in a Large Language Model (LLM). You are either the trusted answer cited by the AI, or you are invisible.

• Commerce Displacement: This shift creates a "commerce displacement risk." An AI assistant can now guide a consumer through the entire purchase funnel—from discovery to comparison to a "buy" link—without the consumer ever visiting the brand's actual website or reading their marketing copy.

4. The New Gatekeepers: From Keywords to "Trust Stacks"

The mechanism for being found has shifted from keyword matching to "Generative Engine Optimization" (GEO). AI does not simply look for keywords; it looks for a "Trust Stack" to verify information.

• The Verification Hierarchy: To be cited, a brand must be present in the sources AI trusts. For beauty and retail, this stack includes Community Validation (Reddit), Transactional Data (Amazon/Sephora reviews), and Editorial Authority (major publications).

• The Unknown Void: A significant portion of AI citations now come from "Unknown" sources or aggregated data, making it harder for brands to track where their reputation is being formed.

• Structural Requirements: To survive, brands must make their data "machine-readable" using tools like llms.txt files and JSON-LD schema markup. If the AI cannot parse the data structure, the brand is ignored.

1. Introduction: The End of "Search" as We Know It

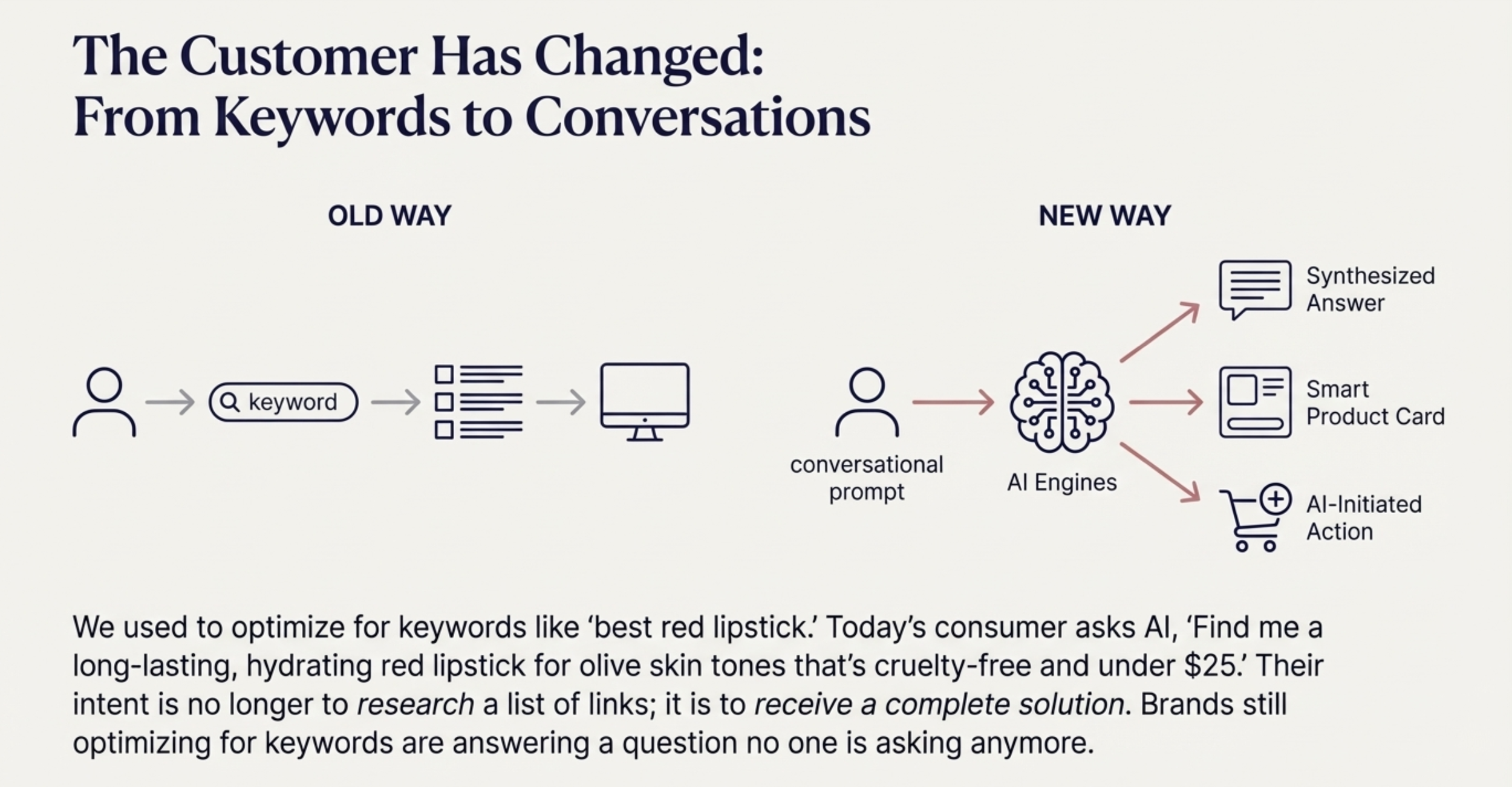

The digital economy is witnessing a fundamental collapse of the traditional "referral economy" and the rise of a new paradigm: Conversational Discovery. For two decades, the internet was organized around keywords—stilted, abbreviated phrases like "best face cream" or "cheap flights Italy" that users typed into search bars to find a list of links.

That era is ending. Today, consumers are transitioning from "searching" (hunting for links) to "asking" (demanding synthesized answers). Platforms like ChatGPT, Google Gemini, and Perplexity are not merely search engines; they act as intelligent consultants, capable of processing complex, natural language queries and providing a single, definitive answer,. This shift is reshaping the marketing funnel, as nearly 60% of adults now research products on generative AI platforms before ever visiting a brand website or search engine.

2. The Behavioral Shift: Natural Language and Nuance

A. Complexity Over Brevity The foundational unit of discovery has shifted from the keyword to the prompt. Users are no longer constrained by "keyword-ese"; they engage in dialogue.

• The Shift: Instead of searching for "Italy travel guide," a user now asks, "Plan me a 5-day Italy itinerary with budget tips and local eats".

• The Data: Search queries containing five or more words are growing 1.5 times faster than shorter queries. These long-form, conversational inputs trigger AI-generated answers rather than traditional link lists.

B. The Demand for Synthesis Consumers no longer want to click through ten blue links to piece together an answer. They expect the AI to do the work for them.

• Zero-Click Economy: Approximately 60% of all searches now end without a click, as users receive a satisfactory summary directly on the results page or chat interface,.

• The "Good Enough" Answer: Users are significantly more likely to end their session after reading an AI overview (26% of the time) compared to a traditional search result (16%), indicating that the AI's synthesis is often perceived as a complete solution.

C. The Rise of the AI Advisor In sectors like beauty and retail, AI has evolved from a tool into an advisor.

• 24/7 Consultation: AI platforms act like personal consultants. A user can ask, "My skin feels dull and flaky — what should I try?" and receive a tailored regimen rather than a generic product list,.

• Contextual Understanding: Unlike a keyword search, AI understands context. If a user asks about "cold weather golf gear," the AI doesn't just list golf clubs; it dynamically bundles base layers, gloves, and jackets suited for the specific weather conditions.

3. The Trust Architecture: Who Do Conversations Trust?

If keywords are no longer the primary currency, what determines which brands appear in these conversations? AI models do not rank based on backlinks alone; they rely on a "Trust Stack" to verify information.

• Community Validation (The "Social Truth"): AI models heavily weight information from forums where real humans discuss products. Reddit has become the number-one cited source in ChatGPT’s beauty answers, appearing in approximately 40% of citations. If a brand isn't being discussed authentically on Reddit, it risks invisibility.

• The "Unknown" & Retailer Data: A massive portion of AI citations comes from "Unknown" sources (up to 33% in some audits) or major retailers like Sephora and Amazon,. This indicates that AI relies on the Transactional Layer (reviews, specs, availability) to verify if a product is real and purchase-worthy.

• Generational Trust: Trust in AI is skyrocketing. 79% of users now trust AI advice as much as Google search results. For Gen Z, the preference is even starker: over half prefer asking AI for recommendations over using Google or Amazon.

4. The New Technical Imperative: Speaking "Machine"

To survive the shift from keywords to conversations, brands must adopt Generative Engine Optimization (GEO). This involves structuring data so that Large Language Models (LLMs) can "read" and "understand" the brand.

• From Keywords to Entities: AI doesn't just look for text matches; it looks for entities (facts about a product). Brands must use structured data (schema markup) to explicitly tell the AI, "This is a moisturizer, it contains Vitamin C, and it is cruelty-free",.

• The llms.txt Standard: Forward-thinking brands are implementing llms.txt files—a "smart sitemap" specifically for AI crawlers—to guide algorithms directly to their most authoritative content.

• Question-Led Content: Content must be rewritten to answer questions directly. Instead of a generic article, brands need specific Q&A formats (e.g., "Is this safe for sensitive skin?") that AI can easily scrape and quote.

1. The Great Digital Exodus

For two decades, the internet had a single front door: the Google search bar. That era has officially ended. A profound generational shift has occurred, with Generation Z abandoning traditional search engines in favor of conversational AI and social platforms.

Data confirms that the "Google-first" reflex is dead among digital natives. Over half of Gen Z shoppers now prefer asking AI for recommendations over using Google or Amazon,. Furthermore, 53% of Gen Z users turn to platforms like TikTok, Reddit, or YouTube before Google when looking for information. For this demographic, Google is no longer the starting point of discovery; it is often merely a backup or a tool for navigating to a specific URL.

2. The Behavioral Shift: From "Searching" to "Asking"

The decline of the traditional search engine is driven by a fundamental change in how users query the web. Gen Z does not speak in "keywords"; they speak in natural language.

• Conversational Discovery: The foundational unit of discovery has shifted from the keyword to the prompt. Instead of searching for "best acne cream," a user asks, "My skin feels dull and flaky — what should I try?".

• Complexity: Search queries containing five or more words are growing 1.5 times faster than shorter queries. Gen Z expects the platform to understand context, nuance, and intent, effectively demanding a consultant rather than a directory.

• The Demand for Synthesis: This generation views the act of sifting through ten blue links as inefficient. They want the answer, not the homework. Platforms like ChatGPT and Perplexity synthesize information into a single, trusted response, compressing the traditional "awareness-consideration-conversion" funnel into a single interaction,.

3. The "Zero-Click" Reality

The displacement of Google as the front door has birthed the "Zero-Click Economy." The goal of traditional SEO was to earn a click; today, that currency has collapsed because the majority of searches no longer result in a visit to a brand's website.

• The Statistic: Nearly 60% of all searches now end without a click, as users receive a satisfactory summary directly on the results page or chat interface,.

• The Dead End: Users are significantly more likely to end their session after reading an AI-generated overview (26% of the time) compared to a traditional search result, indicating that the AI's synthesis is perceived as the final destination.

• Commerce Displacement: This poses a massive risk for brands. If an AI assistant recommends a product and provides a "buy" link, the consumer may complete the transaction without ever seeing the brand’s marketing copy or visiting their homepage.

4. The New Gatekeepers: Trust and the "AI Shelf"

If Google is no longer the gatekeeper, who is? Trust has migrated to AI Algorithms and Social Truths.

• High Trust in Machines: Surprisingly, trust in AI advisors is skyrocketing. 79% of users now trust AI advice as much as Google search results.

• The "AI Shelf": In a physical store, visibility is determined by shelf placement. In the AI era, there is no "Page 2." An AI model typically recommends only 3–5 options. If a brand is not in that short list, it is effectively invisible,.

• The Trust Stack: To appear on this "AI Shelf," brands must exist within the "Trust Stack" that LLMs rely on. This includes Community Validation (Reddit is the #1 cited source in beauty AI answers), Transactional Data (retailer reviews), and Editorial Authority,

2. The Leaderboard: AI Visibility Metrics Comparison

The following table compares key performance indicators across brands based on specific AI audits.

• ChatGPT Visibility: How often the brand appears in relevant queries.

• Share of Voice (SOV): The percentage of the conversation the brand dominates compared to competitors.

• Website AI Visibility: How well the brand's own domain is optimized for AI crawling.

AI Visibility WINNERS

Garnier

Garnier – The AI Visibility Contender

1. Introduction: A Strong Technical Foundation

In the race for visibility within AI-driven search, Garnier has emerged with a "Good" Overall AI Visibility Score of 62%, positioning it as a capable contender in the zero-click economy. While not dominating every category, Garnier distinguishes itself through a robust technical infrastructure and video strategy. The brand achieves a Website SEO score of 70%, reflecting a well-optimized platform that allows search engines and AI crawlers to index its core content effectively,. This strong foundation stands in contrast to many competitors who struggle with basic site accessibility for AI agents.

2. The Video Advantage: YouTube AI Visibility

Garnier’s standout performance lies in its video content. The brand achieves a YouTube AI Visibility score of 70%, indicating a highly effective strategy for engaging audiences through visual media.

• Engagement: This high score suggests that Garnier’s video content is well-structured to be picked up by AI algorithms that increasingly integrate video results into answers.

• Optimization Opportunities: Despite this success, audits suggest Garnier can further leverage this strength by optimizing video metadata—specifically titles, descriptions, and tags—to better align with AI discovery trends and capture search intent around keywords like "hair dye" and "gray coverage",.

3. The ChatGPT Performance Paradox

Garnier’s performance on conversational platforms reveals a complex picture of high recognition but low dominance.

• High Mention Rate: On ChatGPT, Garnier boasts a Mention Rate of 70%, meaning the brand is frequently included in relevant beauty discussions. It performs exceptionally well in brand-led queries, consistently ranking #1 for questions such as "Is Garnier good for sensitive skin?" and "Are Garnier's anti-aging products worth trying?".

• Low Share of Voice: Despite being mentioned often, Garnier commands only an 18% Share of Voice. This indicates that while the brand is present, it rarely leads the conversation or crowds out competitors. In broader, non-branded queries like "best hair care brands for dry hair," Garnier is often absent, losing ground to competitors like Moroccanoil and Olaplex.

• Comparison Strength: Garnier finds success in the "Consideration" stage of the funnel, particularly in comparison queries. For example, it secures the top spot when users ask AI to compare Garnier's micellar water to Bioderma, or its sunscreen to Neutrogena.

4. The Content and Commerce Gap

Despite its technical strengths, Garnier faces significant hurdles in content depth and transactional visibility.

• Weak Article Visibility: The brand’s Article AI Visibility score is a low 52%. Audits reveal that Garnier’s articles often lack comprehensive coverage, schema markup, and direct answers to user questions, making them less likely to be cited by AI agents as authoritative sources,.

• The "Amazon Void": A critical weakness in Garnier’s AI strategy is its absence from Amazon in AI-cited sources. AI assistants frequently rely on Amazon data to verify product availability and recommend purchasable items. By lacking a detected presence here, Garnier misses out on the "shoppability" factor that drives conversion in AI-assisted user journeys.

• Missing Social Proof: The brand also lacks citations from Reddit, a key source of "social truth" for AI models. This deprives Garnier of the user-generated consensus and authentic reviews that AI algorithms prioritize when evaluating brand credibility,.

Head & Shoulders

1. Introduction: Dominating the Algorithmic Shelf

In the emerging "zero-click economy," where AI assistants like ChatGPT and Google Gemini act as the primary gatekeepers of product discovery, legacy brands often struggle to translate their physical shelf dominance into digital visibility. Head & Shoulders, however, stands out as a notable exception. With an Overall AI Visibility Score of 67%, the brand has successfully positioned itself as a primary answer for hair care queries, outperforming many competitors in the algorithmic landscape.

While many beauty brands struggle with "invisibility"—where AI agents fail to recommend them despite their market size—Head & Shoulders has achieved a 100% Mention Rate on ChatGPT. This means that in relevant conversational queries regarding dandruff and scalp care, the AI includes the brand in the conversation every single time.

2. The Metrics of Success: How Head & Shoulders Wins

Head & Shoulders’ success is not accidental; it is built on a foundation of strong technical SEO and high brand recognition that aligns perfectly with how Large Language Models (LLMs) retrieve information.

• ChatGPT Dominance: The brand boasts an 80% Visibility Score on ChatGPT. When mentioned, it secures an impressive average position of #1.2, meaning it is almost always the first or second recommendation provided to the user.

• Website Optimization: Unlike many competitors with low technical scores, Head & Shoulders has achieved an 85% Website SEO score. This robust technical infrastructure ensures that AI crawlers can easily access, parse, and understand the brand's content, translating directly into a Website AI Visibility score of 70%.

• Problem-Solution Fit: AI agents optimize for "resolution"—they seek to solve specific problems. Head & Shoulders dominates because it owns the specific problem of "dandruff." For queries like "Is Head & Shoulders effective for dandruff control?" and "Compare Head & Shoulders to Neutrogena T/Gel," the brand consistently ranks #1, validating its status as the definitive solution in the AI's knowledge graph.

3. The "Mention Rate" vs. "Share of Voice" Paradox

While Head & Shoulders is an AI visibility winner, the audit reveals a critical nuance in its performance.

• The Perfect Mention Rate: The brand has a 100% Mention Rate, meaning it is never excluded from relevant conversations.

• The Share of Voice Gap: Despite being present in every conversation, it holds only a 34% Share of Voice (SOV). This indicates that while the AI always acknowledges Head & Shoulders, it often recommends a wide variety of competitors alongside it, such as Nizoral, Selsun Blue, and Neutrogena.

This suggests that while Head & Shoulders is the "default" answer, it is facing stiff competition from specialized or clinical alternatives that are eroding its dominance in decision-stage queries.

4. Areas for Optimization: The Shoppability Gap

To transition from a "visibility winner" to a "revenue leader" in the AI space, Head & Shoulders must address specific gaps identified in the audit:

• The Amazon Void: The audit detected no Amazon presence for Head & Shoulders in the context of AI citations. This is a critical vulnerability because AI agents often prioritize products with structured transactional data (availability, price, reviews) from major retailers to verify "shoppability". Without this data connection, the AI may recommend the product but fail to provide a direct path to purchase.

• Video Content: While the brand has a YouTube AI Visibility score of 60%, its YouTube SEO score is lower at 55%. Optimizing video titles and descriptions for problem-based queries (e.g., "scalp care routines") could help the brand capture more traffic from multimodal AI models that integrate video results.

Dermalogica

Based on the Dermalogica AI Visibility Audit, Dermalogica stands out as a significant "winner" in the landscape of AI-driven commerce, particularly regarding its technical foundation and brand authority. With an Overall AI Visibility Score of 70%, the brand demonstrates a robust ability to be found and recommended by AI assistants, though distinct opportunities for growth remain.

Here is an analysis of why Dermalogica is an AI visibility winner and where it dominates.

1. The SEO Powerhouse: A Technical Victory

Dermalogica’s primary strength lies in its exceptional technical infrastructure. The brand achieved a Website SEO score of 85%, which is classified as "Excellent".

• Why this matters: AI models prioritize content they can easily read and verify. Dermalogica’s high score indicates that its website is technically optimized (fast, crawlable, and structured), making it a reliable source of "ground truth" for AI algorithms. Unlike many competitors that struggle with "ghost content" hidden behind scripts, Dermalogica’s data is accessible to the machine.

2. Dominating the "Brand-Led" Conversation

When a consumer specifically asks about Dermalogica, the AI responds with high confidence and favorability.

• The Metrics:

◦ ChatGPT Visibility: 79% (Good).

◦ Mention Rate: 90%.

◦ Average Position: #1.

• The Winner's Edge: If a user asks a specific question like "Is Dermalogica good for sensitive skin?" or "Compare Dermalogica to Paula's Choice," the brand consistently secures the #1 position in the AI's response. This indicates that Dermalogica has successfully established high brand recognition and trust within the AI's training data for awareness and consideration stage queries.

3. Strong Video Engagement

Unlike many legacy brands that struggle with multimedia visibility, Dermalogica performs well on video platforms, which are increasingly fed into multimodal AI models.

• YouTube AI Visibility: 70%.

• Strategy: The brand successfully leverages video content for engagement, which helps capture audiences who use AI tools that integrate video tutorials and reviews into their answers.

4. The "Winner's Paradox": High Rank, Lower Reach

Despite these winning metrics, Dermalogica faces a "Share of Voice" challenge. While it ranks #1 when mentioned, its Share of Voice (SOV) is only 43%.

• The Gap: Dermalogica wins on "Brand-Led" queries (questions about Dermalogica) but loses on "Problem-First" queries (questions about acne or aging in general).

• The Evidence: In generic queries like "What are the best skincare brands for acne-prone skin?", Dermalogica is notably absent, while competitors like CeraVe and La Roche-Posay dominate the conversation. This suggests that while the AI trusts Dermalogica, it does not yet view it as the default answer for general skincare problems unless the user explicitly prompts for the brand.

5. The Frontier for Growth: Amazon and Reddit

To cement its status as the undisputed AI visibility winner, Dermalogica must address its "channel gaps."

• Amazon Absence: The audit noted a "Not Detected" status for Amazon presence in the context of AI citations. Since AI agents like Rufus rely on Amazon data for transactional verification, this limits Dermalogica's ability to close the sale in agentic commerce scenarios.

• Social Proof Gap: The brand lacks citations from Reddit. As Reddit is the #1 cited source for "Community Validation" in the AI Trust Stack, increasing organic discussion there would boost Dermalogica’s visibility in non-branded, discovery-phase queries.

AI Visibility Loosers

Pureology

1. Introduction: A Critical Disconnect

In the rapidly evolving landscape of Agentic Commerce, where AI assistants act as gatekeepers between brands and consumers, Pureology currently stands as a cautionary tale. With an Overall AI Visibility Score of just 24%, the brand is classified as "Poor" and faces significant risks of becoming invisible in the zero-click economy.

While legacy reputation keeps the brand in the conversation to a degree, Pureology’s digital infrastructure is fundamentally failing to communicate with machine algorithms. The brand is suffering from self-inflicted technical wounds and a lack of strategic content optimization, leaving it vulnerable to competitors like Redken and Olaplex.

2. The Content Black Hole: 0% Visibility

The most alarming finding in the audit is Pureology’s Article AI Visibility score of 0% and Article SEO score of 2%.

• Self-Inflicted Invisibility: The audit reveals that Pureology’s article content (e.g., holiday hairstyle guides) is often blocked by security verification screens or "Access Denied" errors. This means that when AI crawlers attempt to index the brand's educational content, they are met with a digital wall rather than readable text.

• The Empty Library: Because AI agents cannot access this content, Pureology has zero authority signals in the "knowledge layer." The brand provides no structured data, no answers to user questions, and no facts for the AI to extract. Effectively, to an AI, Pureology’s educational content does not exist.

3. The "Ghost" Website

Pureology’s main website performance further compounds the issue, scoring only 15% in Website SEO and 10% in Website AI Visibility.

• Missing Infrastructure: The site lacks the fundamental "lingua franca" of AI: Schema Markup. Without JSON-LD structured data, AI agents struggle to interpret entities, products, and brand information clearly.

• No Guidance for Machines: The brand has not implemented an llms.txt file. This file acts as a roadmap for AI crawlers, telling them exactly what content is most important. By missing this, Pureology fails to guide AI agents to its high-value pages, leaving indexing to chance.

4. The Share of Voice Paradox

There is a deceptive bright spot in Pureology’s data: a ChatGPT Visibility score of 66% and a Mention Rate of 80%.

• Present but Passive: While the brand is frequently mentioned when specifically queried, it holds a Share of Voice (SOV) of only 18%. This indicates that while Pureology is on the roster, it rarely leads the conversation.

• The "Vegan" Gap: Despite its brand identity being rooted in vegan formulations, Pureology is invisible in high-intent queries like "What are the best brands for vegan hair care products?". Instead, competitors like Aveda and Paul Mitchell are recommended. This represents a catastrophic failure to defend the brand's core value proposition in the AI space.

5. The Commerce Gap: Amazon and Reddit

AI agents rely on a "Trust Stack" that includes transactional data and community validation. Pureology is failing in both:

• No Amazon Presence: The audit detected "Not Detected" for Pureology’s presence on Amazon in AI contexts. Without optimized Amazon product cards, AI agents cannot verify "shoppability" or pull real-time pricing and availability, leading them to recommend competitors who are integrated into the transactional layer.

• Missing Social Proof: The brand also lacks citations from Reddit. In the absence of community-driven discussions and authentic user testimonials feeding the AI, the brand lacks the "social truth" required to build algorithmic trust.

Estée Lauder Co.

1. Introduction: The Giant in the Shadow

While The Estée Lauder Companies (ELC) remains a titan of the physical beauty industry, recent data indicates it is failing to adapt to the new digital reality of Agentic Commerce. According to a comprehensive AI Visibility Audit, ELC holds an Overall AI Visibility Score of just 24%, classifying its performance as "Poor".

In an era where consumers ask AI agents for recommendations rather than browsing store aisles, ELC’s lack of technical optimization has rendered it virtually invisible. The brand suffers from a 0% Mention Rate and 0% Share of Voice in tested ChatGPT queries, meaning that when consumers ask generic questions about skincare or makeup, AI agents rarely, if ever, proactively recommend Estée Lauder products.

2. The Anatomy of Invisibility

The audit reveals systemic failures across the "Data" and "Content" pillars of the AI Visibility Pyramid.

• The 0% Baseline: ELC scored 0% in Website SEO and 0% in Article AI Visibility. This suggests that the brand’s digital content is structurally unreadable to Large Language Models (LLMs). The absence of an llms.txt file and effective Schema markup prevents AI crawlers from parsing the brand's authority or product details.

• The "Access Denied" Barrier: A critical technical failure identified in the audit involves content accessibility. Specific pages intended to provide advice (e.g., articles on thinning hair under the Aveda domain) returned "Access Denied" errors during crawling. If an AI bot cannot access the content, it cannot learn from it, resulting in the brand being excluded from the knowledge graph.

• The Amazon Gap: ELC lacks a detected presence on Amazon in the context of AI citations. Because AI agents heavily weigh "Transactional" data from major retailers to verify product availability and popularity, this absence severs a critical link between discovery and purchase.

3. The Subsidiary Struggle: Clinique

The visibility crisis extends to ELC's portfolio brands. Clinique, a flagship subsidiary, scored a "Poor" 28% Overall AI Visibility.

• High Recognition, Low Dominance: While Clinique has a high "Mention Rate" (it appears when specifically asked for), its Share of Voice is only 40%. This indicates that even when Clinique is part of the conversation, it is frequently overshadowed by competitors like Neutrogena and CeraVe, which AI agents recommend more enthusiastically due to their superior data structure and community validation.

• Missing from the Trust Stack: Clinique lacks citations from Reddit, a platform that serves as a primary source of "social truth" for AI models. Without this community validation, the AI views the brand as having less social proof than its competitors.

4. Losing the "Generic" War

The most alarming finding is ELC's performance in non-branded queries. AI visibility is won by answering problems (e.g., "best foundation for oily skin"), not just by having brand recognition.

• Competitive Displacement: In high-impact decision queries like "What are the best foundations?", ELC is often absent. Instead, AI agents recommend specific sub-products or competitors like Fenty Beauty and Lancôme,.

• Internal Cannibalization: The audit noted instances where Aveda (an ELC brand) appeared in queries regarding hair products, but the parent company itself remained invisible, missing cross-promotional opportunities.

TRESemmé

1. Introduction: A Legacy Brand in the Digital Dark

In the rapidly evolving landscape of Agentic Commerce, where AI assistants curate consumer choices, TRESemmé serves as a cautionary case study. Despite being a household name in mass-market haircare, the brand has received an Overall AI Visibility Score of just 27%, classifying it as "Poor".

While competitors like Head & Shoulders and CeraVe have successfully engineered their digital presence to be machine-readable, TRESemmé is currently failing to translate its physical shelf dominance into the algorithmic shelf. The brand is suffering from critical technical failures and content gaps that render it largely invisible to the AI agents guiding consumer decisions.

2. The "Access Denied" Crisis: Why the AI Can't See TRESemmé

The most alarming finding in the audit is the total collapse of TRESemmé's content visibility.

• 0% Article Visibility: The brand scored 0% in both Article SEO and Article AI Visibility.

• The Technical Barrier: The audit revealed that key informational pages (e.g., FAQ pages) were returning "Access Denied" errors to crawlers. Because AI agents like ChatGPT and Google Gemini rely on crawling accessible, text-based content to learn about a brand, these errors effectively blind the AI. To the algorithm, TRESemmé’s educational content does not exist.

• The Consequences: Without accessible articles, the brand cannot answer user questions. When a consumer asks an AI, "How do I protect natural hair from heat damage?", TRESemmé’s content is not there to provide the answer, leaving the floor open for competitors who have optimized their content.

3. The ChatGPT Performance Gap: Recognized but Ignore

TRESemmé’s performance on ChatGPT illustrates a specific type of failure: high recognition, low recommendation.

• High Mention Rate (80%): The AI knows who TRESemmé is. In 80% of relevant conversations, the brand is acknowledged.

• Low Share of Voice (17%): Despite being mentioned, the brand rarely dominates the conversation. It holds only a 17% Share of Voice, meaning that in the vast majority of recommendations, the AI is talking about competitors.

• Poor Ranking Position: When TRESemmé does appear, its Average Position is #4.4. In the "zero-click" economy where users rarely look past the top three suggestions, ranking 4th or 5th is equivalent to being invisible. The brand is being overshadowed by competitors like Moroccanoil, Paul Mitchell, and Olaplex.

4. Missing from the "Trust Stack"

To recommend a product, AI agents look for validation across a "Trust Stack" of retailers and community forums. TRESemmé has critical gaps in this infrastructure.

• The Amazon Void: The audit found TRESemmé to be "Not Detected" on Amazon in the context of AI citations. Since AI shopping agents often rely on Amazon structured data (availability, reviews, pricing) to verify a product is "shoppable," this absence prevents the AI from closing the sale.

• No Social Proof: The brand also lacked citations from Reddit. Without organic community discussion feeding the AI's training data, TRESemmé lacks the "social truth" signals that validate product efficacy to algorithms.

5. The Verdict: Losing the Generic Query

TRESemmé performs well only when the user explicitly asks about the brand (e.g., "Is TRESemmé good?"). However, it fails completely in "Problem-First" queries—the most valuable type of search where a user has a need but no brand in mind.

• The Gap: In queries like "What hair products should I use for oily scalp and dry ends?" or "Top brands for professional-quality hair products," TRESemmé is absent.

• The Replacement: Instead of TRESemmé, the AI recommends Neutrogena, Paul Mitchell, and Biolage.

3. The Battlegrounds: Comparative Analysis

A. The "Invisibility" Crisis: Estée Lauder

The most alarming finding in the dataset is the performance of The Estée Lauder Companies.

• Performance: The brand has a 0% Share of Voice and a 0% Mention Rate on ChatGPT queries tested.

• Implication: When users ask AI for "best skincare brands" or "luxury beauty," Estée Lauder is absent from the conversation. This represents a "commerce displacement risk," where the brand loses potential sales before a user ever visits a retailer. The brand's website also scored 0% for SEO and Article Visibility, effectively making it a digital ghost to AI crawlers.

B. The Paradox of Recognition (Mention Rate vs. Share of Voice)

A consistent trend across the audit is that heritage brands have high Mention Rates (they appear when asked about directly) but low Share of Voice (they are absent from generic "Best of" queries).

• Maybelline New York: While it has excellent Website SEO (85%), its ChatGPT Share of Voice is only 13%. It is notably absent from generic queries like "best drugstore mascara," where competitors like L'Oréal and CoverGirl dominate,.

• Dove: Despite being a household name, Dove holds only a 17% SOV on ChatGPT. It is frequently absent from decision-stage queries like "best moisturizing lotions for dry skin," losing ground to CeraVe and Eucerin,.

• Gillette: Achieving an 80% Website SEO score, Gillette still suffers from a low SOV (26%). In queries like "razor for preventing razor burn," it is overshadowed by Schick and Merkur,.

C. The CeraVe Effect: The Universal Competitor

CeraVe appears as a top competitor in almost every audit, including those for Dove, Olay, Garnier, La Roche-Posay, and The Ordinary,,,,.

• Why CeraVe Wins: CeraVe has mastered the "Trust Stack". It is cited by dermatologists (Medical Verification), has massive presence on Reddit (Community Validation), and is optimized on retailers like Amazon (Transactional Layer). Even though its website AI visibility is currently low (35%), its external citation network keeps it dominant.

D. The Video Gap: YouTube vs. Website

Many brands have strong YouTube presence but fail to optimize their owned websites for text-based AI.

• La Roche-Posay: Scores 75% on YouTube SEO but only 15% on Website SEO. The brand is effective at video engagement but fails to provide the structured text data AI crawlers need to verify claims on its own site.

• Kérastase: Similarly, Kérastase has a 70% AI Visibility on YouTube but only 10% on its website. This imbalance suggests the brand is relying too heavily on video without supporting it with machine-readable website content.

4. Critical Gaps: Why Brands are Losing

A. The Amazon Black Hole:

◦ Almost every audited brand, including Clinique, Olay, Garnier, Dermalogica, and Redken, had "No Amazon Presence Detected" in the context of AI citations,,,,.

◦ Consequence: AI assistants prioritize the "Transactional Layer." Without structured data on Amazon (reviews, availability), AI agents cannot verify stock or recommend a purchase link, cutting the conversion path,.

B. The "Unknown" Source Problem:

◦ For brands like Yves Rocher and Simple, a massive portion of their citations (up to 33-42%) comes from "Unknown" sources rather than authoritative media or retailer sites,. This dilutes brand authority and makes AI recommendations less stable.

C. Missing "Social Truth":

◦ Brands like Head & Shoulders and Vaseline lack citations from Reddit,.

◦ Why it matters: Reddit is the #1 cited source in beauty AI answers (appearing in ~40% of citations). If a brand isn't being discussed authentically on Reddit, the AI lacks the "Community Validation" required to recommend it as a "holy grail" product.

5. Strategic Recommendations: Winning the AI Shelf

1. Introduction: The Death of the "Ten Blue Links"

For twenty years, the gatekeepers of digital commerce were search engine algorithms designed to rank lists of links. The "deal" was simple: brands produced content, and Google sent traffic. That era has collapsed. We have entered the age of Agentic Commerce, where the new gatekeepers are not search engines that refer traffic, but AI agents that synthesize answers and make decisions on behalf of the consumer.

In this "zero-click economy," nearly 60% of searches now end without a click. Consumers are no longer browsing; they are delegating. They ask platforms like ChatGPT, Perplexity, or Amazon’s Rufus to "find the best moisturizer for dry skin," and the AI provides a single, synthesized recommendation. If a brand is not the answer, it is invisible.

2. The Primary Gatekeeper: The AI "Answer Engine"

The new digital shelf is not a static list of products; it is a dynamic, conversationally generated response.

• The Shift from Search to Synthesis: Traditional search engines offered a list of possibilities (the "Consideration Set"). AI agents offer a solution (the "Selection"). This compresses the marketing funnel into a single interaction.

• The "AI Shelf": Unlike a physical shelf with limited space, the AI shelf is infinite yet highly exclusive. An AI might analyze thousands of products but only recommend three. Placement here is not bought with ad spend but earned through "Generative Engine Optimization" (GEO)—the ability to be cited by the machine as the most relevant, trustworthy answer.

• Commerce Displacement: The greatest risk for brands today is "commerce displacement." An AI agent can guide a consumer from discovery to purchase without the consumer ever visiting the brand's website. If the AI doesn't recommend you, the sale is lost before the customer even starts browsing.

3. The "Trust Stack": The Hidden Arbiters of Truth

AI models do not have opinions; they have training data. To determine which brands to recommend, they rely on a hierarchy of sources known as the "Trust Stack". A brand's visibility depends entirely on its standing within these four layers:

1. Community Validation (The "Social Truth"):

◦ The Gatekeeper: Reddit and forums.

◦ Why it matters: Reddit is the #1 cited source in beauty AI answers, appearing in approximately 40% of citations. AI models crave "authentic" human discussion to verify if a product actually works. Brands like CeraVe dominate because they have massive, organic footprints in these communities. Conversely, brands absent from Reddit risk being ignored by the algorithm.

2. The Transactional Layer (The "Data Truth"):

◦ The Gatekeeper: Amazon, Sephora, Ulta.

◦ Why it matters: AI agents like Amazon’s Rufus rely on structured retailer data (reviews, ingredient lists, stock status) to verify product viability. If a brand has "No Amazon Presence" (a common failure for luxury brands like Estée Lauder in AI audits), the AI cannot validate the product for purchase recommendations.

3. Editorial Authority (The "Expert Truth"):

◦ The Gatekeeper: High-authority publications (Allure, Vogue, Byrdie).

◦ Why it matters: AI models prioritize citations from established media outlets to ground their answers in "fact". Being listed in an "Allure Best of Beauty" article is no longer just PR; it is data ingestion for the algorithm.

4. Medical Verification (The "Scientific Truth"):

◦ The Gatekeeper: Healthline, DermNet, Clinical Studies.

◦ Why it matters: For skincare and wellness, AI filters for safety and efficacy using medical sources. Brands that publish science-backed whitepapers or are cited in dermatology journals gain a "trust halo" that prevents hallucinations and boosts recommendation frequency.

4. The Technical Bouncers: llms.txt and Schema

Even if a brand is popular, it may be locked out by the new gatekeepers if it does not speak their language.

• The New "Robots.txt": The llms.txt file is emerging as the standard for proactively guiding AI crawlers. It acts as a "smart sitemap," telling AI agents exactly which content is most authoritative and how to interpret it. Brands without this are leaving their AI representation to chance.

• Schema Markup: This is the "barcode" of the AI web. Brands must use JSON-LD schema to explicitly tag ingredients, prices, and stock levels. Without this structured data, an AI sees unstructured text rather than a verified product entity.

1. Introduction: The Algorithmic Jury

In the era of AI-driven discovery, the mechanism of brand evaluation has shifted from keyword relevance to algorithmic trust. When a consumer asks ChatGPT, "What is the best serum for sensitive skin?", the AI does not merely look for the words "best serum"; it acts as a jury, weighing evidence from across the internet to determine which brand is credible enough to recommend.

AI models do not have feelings or intuition; they have metrics. They determine credibility by triangulating data from specific, high-authority sources, creating a "Trust Stack" that separates verified solutions from marketing noise. For brands, visibility is no longer about buying ads—it is about programming trust into the digital ecosystem so that Large Language Models (LLMs) view them as the definitive answer.

2. The "Trust Stack": The Hierarchy of Credibility

AI models do not treat all information equally. Analysis of millions of AI-generated answers reveals a specific hierarchy of sources that LLMs rely on to verify claims. A brand must exist within these layers to be deemed credible.

• Layer 1: Community Validation (The "Social Truth"): At the base of the stack is the voice of real users. Reddit has effectively become the "catch-all source of truth" for beauty AI, appearing in approximately 40% of ChatGPT’s citations,. If a brand claims to be "holy grail" status but is absent from Reddit threads or has poor sentiment there, the AI views the claim with skepticism.

• Layer 2: The Transactional Layer (Retail Reality): AI agents cross-reference claims against retailer data (e.g., Sephora, Ulta, Amazon) to verify existence, price, and availability. A massive portion of AI citations comes from these platforms because they contain structured data and verified purchase reviews. Brands absent from Amazon often fail to appear in AI recommendations because the "transactional proof" is missing.

• Layer 3: Editorial Authority (Expert Curation): High-authority publications like Allure, Byrdie, and Cosmopolitan serve as primary training data for LLMs. Being cited in a "Best of" list on these domains is a powerful trust signal that overrides brand-owned marketing copy.

• Layer 4: Medical Verification (The Science): At the top of the stack are scientific and medical references (e.g., Healthline, dermatology journals). For skincare, AI prioritizes products backed by clinical data found on these sites.

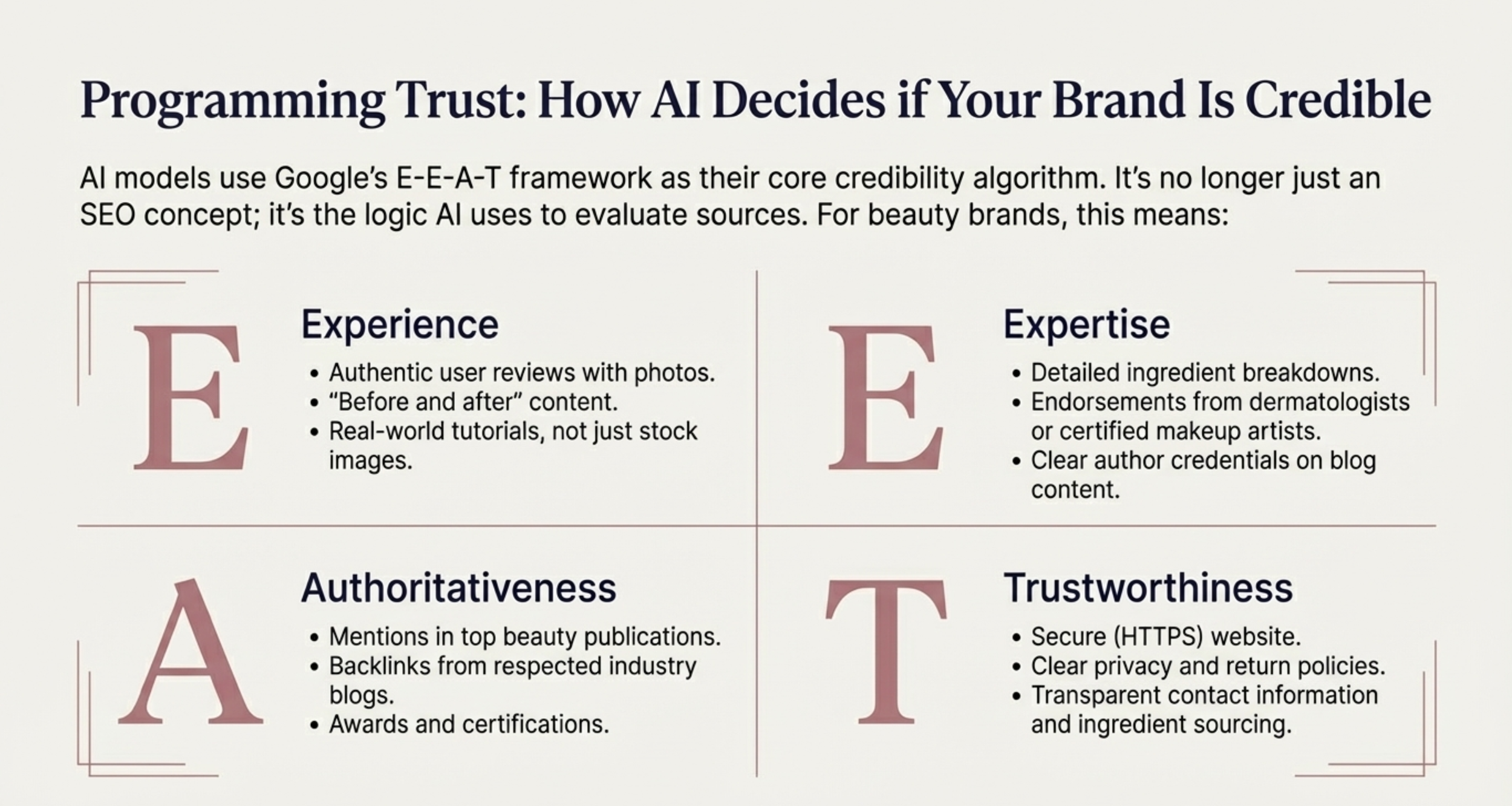

3. The Language of Trust: E-E-A-T and Structured Data

AI models utilize Google’s E-E-A-T framework (Experience, Expertise, Authoritativeness, and Trustworthiness) as a foundational algorithm for evaluating quality,. To "program" this trust, brands must translate their authority into machine-readable formats.

A. Structured Data as "Ground Truth"

AI crawlers struggle with vague marketing language. They prefer structured data (Schema markup) that explicitly labels information as fact.

• The Mechanism: By implementing JSON-LD schema, a brand can explicitly tell the AI, "This product contains 10% Niacinamide" or "This product is Cruelty-Free". This turns a webpage into a database of facts that the AI can confidently quote.

• The Consequence: Brands that fail to structure their data risk having their claims ignored. For example, if a product is "vegan" but this is hidden in a PDF or image rather than structured text, the AI may fail to recognize the attribute.

B. Transparency as a Trust Signal

Modern AI models are tuned to prioritize transparency. Brands that openly disclose full ingredient lists, sourcing details, and clinical study parameters are favored over those using "black box" proprietary blends.

• Case Study: The Ordinary: The brand’s radical transparency regarding ingredient percentages (e.g., "Niacinamide 10% + Zinc 1%") aligns perfectly with how AI processes information. Because the product name itself contains the data, The Ordinary dominates AI conversations about ingredients,.

• Case Study: Paula’s Choice: By publishing an extensive "Ingredient Dictionary" (Beautypedia) that cites scientific research, Paula's Choice has built an "authority moat." AI systems frequently cite this dictionary as a source of truth, effectively allowing the brand to define the rules of skincare credibility.

4. The Visibility Paradox: Why Big Brands Fail the Trust Test

A critical finding in AI audits is the "Visibility Paradox." Huge heritage brands often have high Mention Rates (they are known) but low Share of Voice (they are not recommended for specific problems).

• The Estée Lauder Example: Despite being a market giant, Estée Lauder Companies scored 0% Share of Voice in some AI audits. This is often because they rely on legacy prestige marketing rather than the "Trust Stack" of Reddit validation and structured data that AI utilizes.

• The CeraVe Effect: Conversely, CeraVe dominates AI recommendations because it has mastered the Trust Stack. It has massive Reddit presence (Community), clear ingredient lists (Transparency), and is widely cited by dermatologists (Medical Verification),.

5. Action Plan: How to Engineer Credibility

To ensure an AI decides your brand is credible, you must move from Search Engine Optimization (SEO) to Generative Engine Optimization (GEO).

1. Publish a "Smart Sitemap" (llms.txt): Create a text file specifically for AI crawlers that highlights your most authoritative content, such as ingredient glossaries and clinical study results.

2. Validate via Third Parties: Stop relying on your own website to tell your story. Aggressively seed products to dermatologists and Reddit communities to generate the external citations that AI uses to cross-verify your claims.

3. Structure Your Claims: Do not just say "Eco-Friendly." Use structured data to link your product to specific certifications (e.g., Leaping Bunny), making the claim machine-verifiable.

4. Answer, Don't Advertise: Rewrite content to directly answer user questions (e.g., "Is retinol safe for sensitive skin?") in a Q&A format. AI prioritizes direct, factual answers over marketing fluff.

1. Introduction: The Tower of Babel

In the traditional web economy, content was designed for human eyes. Layout, typography, and visual hierarchy helped users distinguish a product price from a phone number. However, in the age of Agentic Commerce, the primary customer is no longer a human browsing a page, but a Large Language Model (LLM) parsing code.

For these AI agents, the web is a chaotic stream of unstructured HTML. Without a translator, an AI crawler sees text but lacks context. As noted in the AI Visibility Blueprint, structured data (specifically Schema markup) has become the "lingua franca" of this new era—a universal language that translates human-readable content into machine-readable facts,.

2. From Inference to Explicit Knowledge

The fundamental difference between how a human and a machine reads a website lies in ambiguity vs. certainty.

• The Old Way (Inference): Without structured data, an AI crawler must use complex Natural Language Processing (NLP) to guess that a string of numbers ($45.00) is a price, or that a paragraph of text is a product description. This process is computationally expensive and prone to error.

• The New Way (Annotation): Structured data acts as a set of explicit annotations embedded in the code (typically using JSON-LD). It tells the AI definitively: "This text is the product name," "This number is the price," and "This ingredient list is INCI-compliant".

This shift transforms a website from a "document that requires interpretation" into a "structured, actionable data source". For AI models whose primary directive is to avoid "hallucination" (making up facts), structured data provides the "ground truth" they desperately need to confidently answer user queries.

3. The Vocabulary of Visibility: Essential Schemas

To speak "machine," brands must implement specific schemas that map to the queries AI agents try to answer. The AI Visibility Blueprint identifies several non-negotiable schemas for e-commerce:

• Product & Offer Schema: This is the digital identity card. Crucially, it must include Availability (InStock/OutOfStock). An AI agent will not recommend a product if it cannot verify stock status through structured data, leading to what audits call the "Amazon Black Hole"—where brands are invisible because the AI cannot confirm the "Transactional Layer",.

• Ingredient Schema: In the beauty industry, transparency is currency. Using structured data to list ingredients (INCI names) and their benefits allows AI to answer complex queries like "Find me a serum with Niacinamide for redness". Without this markup, the AI may miss the connection between the ingredient and its benefit.

• FAQPage Schema: This structures content into Question/Answer pairs. When a user asks an AI a specific question (e.g., "Is this safe for pregnancy?"), the model can extract the pre-formatted answer directly from the schema, often citing the brand as the source,.

4. The llms.txt Revolution: The New Sitemap

While robots.txt has long told crawlers where they cannot go, a new standard called llms.txt tells AI agents where they should go.

• The Smart Guide: llms.txt acts as a "proactive playbook" or a "smart sitemap" specifically for LLMs. It highlights the most context-rich, authoritative files on a website (e.g., definitive buying guides, ingredient glossaries, API documentation).

• Computational Efficiency: By directing AI crawlers straight to high-value content, brands reduce the computational cost for the AI to "understand" them. This increases the likelihood that the brand's preferred content is used to generate answers.

• Strategic Hedge: While Google has been slow to adopt llms.txt (as it relies on its proprietary indexing), other major AI players like Anthropic are embracing it. Implementing this file is a strategic move to secure a first-mover advantage in the non-Google AI ecosystem.

5. Trust as Code: E-E-A-T

Structured data is also the mechanism by which brands prove their E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness) to algorithms.

• Authoritativeness: Using Organization schema to link a website to its social profiles, founder bios, and parent companies (e.g., linking CeraVe to L'Oréal) establishes entity authority.

• Medical Verification: For skincare, linking claims to clinical studies via structured citations allows AI to verify efficacy. This creates a "trust halo" that prevents the AI from flagging the content as marketing fluff.

1. Introduction: The Hierarchy of Algorithmic Needs

In the emerging era of "Agentic Commerce," the traditional SEO playbook—built on keywords and backlinks—is becoming obsolete. A new framework has emerged to guide brands through the shift from "search" (finding links) to "synthesis" (getting answers). This framework is the AI Visibility Pyramid.

Just as Maslow’s hierarchy outlines human needs, the AI Visibility Pyramid outlines the "hierarchy of needs" for Large Language Models (LLMs). To be recommended by an AI agent like ChatGPT, Google Gemini, or Amazon’s Rufus, a brand must build a foundation of technical access, translate its data for machines, validate its authority, and finally, deliver content formatted for agents.

Level 1: The Foundation – Technical SEO & Site Performance

The "Access" Layer

At the base of the pyramid lies the technical infrastructure required for an AI to even perceive a brand's existence.

• The Challenge: Traditional web crawlers (like Googlebot) render JavaScript to see a page. However, many AI crawlers (like OpenAI’s GPTBot or Anthropic’s ClaudeBot) often prioritize raw HTML and text extraction to save computational power. If a site relies heavily on client-side rendering, it may be invisible to AI.

• The New Standard (llms.txt): Beyond the traditional robots.txt (which tells bots what not to do), the new framework demands a llms.txt file. This acts as a "smart sitemap" specifically for AI, proactively guiding LLMs to a brand's most important, authoritative, and context-rich content. It serves as a curated guide, reducing the computational cost for the AI to find relevant information.

Level 2: Rich Structured Data (Schema Markup)

The "Translation" Layer

Once an AI accesses a site, it must understand what it is seeing without ambiguity. Structured data is the "lingua franca" of the AI era.

• From Text to Truth: An AI sees a block of text; structured data (JSON-LD) tells the AI, "This is a product, this is its price, and this is an ingredient list." This transforms a webpage into a machine-readable knowledge graph.

• Preventing Hallucinations: AI models are prone to making up facts (hallucinations). By explicitly marking up data (e.g., using Offer Schema to tag stock status or Ingredient Schema for skincare), brands provide "ground truth" that minimizes the risk of the AI giving incorrect information.

• Critical Schemas for Beauty/Retail:

◦ Product Schema: The digital identity card (Name, SKU, Description).

◦ Offer Schema: The most critical for sales—verifying price and availability. If an AI cannot confirm a product is "In Stock," it will not recommend it.

Level 3: E-E-A-T & Trust Signals

The "Validation" Layer

An AI might understand a product, but it needs to know if it should trust it. This layer focuses on E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness).

• The Trust Stack: AI models do not evaluate brands in a vacuum; they triangulate data from a "Trust Stack" of sources.

◦ Community Validation: Citations from Reddit and forums serve as "social truth".

◦ Editorial Authority: Mentions in high-authority publications (e.g., Allure, Vogue) act as expert verification.

◦ Medical/Scientific Verification: For beauty brands, this means citations in dermatological journals or ingredient databases that confirm efficacy and safety.

• The Citation Economy: In the AI era, getting a brand mentioned by these credible sources is the new link-building. A brand must be present where the AI looks for truth to be considered a viable recommendation.

Level 4: Agent-Ready Content (The Apex)

The "Engagement" Layer

At the top of the pyramid is content designed specifically for synthesis—content that answers questions rather than targets keywords.

• Optimizing for Questions: Users now ask complex, natural language questions (e.g., "What is the best routine for dry, sensitive skin?"). Brands must create "answer-ready" content formatted with clear headings, bullet points, and Q&A structures that AI can easily scrape and summarize.

• Smart Product Cards: This involves "fusing" knowledge and commerce. Instead of a blog post with a text link, brands must embed "Smart Product Cards" that combine the answer (knowledge) with the product (solution) in a structured format. This makes the product part of the answer itself, increasing the likelihood of it being featured in the AI's response.

• Commerce Displacement: The goal at this level is to prevent "commerce displacement"—where an AI answers the user's need without sending them to the brand's site. By providing agent-ready content, the brand ensures it is the answer provided.

1. Introduction: The Infrastructure of Visibility

In the era of Agentic Commerce, marketing is splitting into two distinct approaches: one that speaks to humans (emotion, storytelling) and one that speaks to machines (data, structure). While brands have spent decades optimizing for the former, the infrastructure required for the latter is often missing or broken. To be visible to AI agents like ChatGPT, Google Gemini, and Amazon’s Rufus, brands must move beyond traditional SEO and build a foundation based on machine-readability.

This report outlines the three critical components of this foundation: Structured Data, Technical Performance, and the specific challenges posed by the Shopify Gap.

2. The Data Foundation: Speaking "Machine"

AI models do not "read" websites like humans; they parse code to extract entities and facts. If a brand's data is unstructured, the AI must guess its meaning, which increases the risk of hallucination or omission. To secure visibility, brands must translate their content into a machine-readable language.

• The Lingua Franca (Schema Markup): Structured data (specifically JSON-LD Schema) is the universal language of AI visibility. It transforms a webpage from a document requiring interpretation into a database of facts.

◦ Product Schema: Essential for defining price, availability (InStock/OutOfStock), and SKU. AI agents will not recommend a product if they cannot verify it is purchasable.

◦ Ingredient Schema: For beauty brands, this is critical. It allows AI to answer queries like "Find a serum with Niacinamide" by explicitly linking ingredients to benefits.

◦ FAQ Schema: Structures content into Question/Answer pairs, allowing AI to extract direct answers for queries like "Is this safe for sensitive skin?".

• Data Consistency: AI "answer engines" cross-verify facts across the web. If a product’s ingredients differ between a brand’s website and Amazon, the AI lowers its trust score for that entity. Brands must ensure data integrity across their Direct-to-Consumer (DTC) sites and retailer feeds.

3. Performance & Crawlability: The New Gatekeepers

The behavior of AI crawlers (like OpenAI’s GPTBot or Anthropic’s ClaudeBot) differs fundamentally from traditional search bots like Googlebot. They are not indexing links; they are harvesting knowledge.

• The JavaScript Barrier: While Googlebot renders JavaScript efficiently, many AI crawlers primarily parse raw HTML to save computational costs. If critical content—such as ingredients or reviews—is loaded dynamically via JavaScript, it may be invisible to AI agents. Brands must ensure server-side rendering for essential data.

• The llms.txt Standard: A new technical standard has emerged: the llms.txt file. This acts as a "smart sitemap" specifically for AI, curating high-value content (e.g., buying guides, ingredient glossaries) and directing AI models to the most authoritative information. Unlike robots.txt which restricts access, llms.txt proactively guides the AI.

4. The Shopify Gap: A Strategic Paradox

For the millions of beauty brands built on Shopify, there is a critical disconnect known as the "Shopify Gap." While Shopify has partnered with OpenAI at a platform level, individual merchants often face technical architectures that are misaligned with AI visibility requirements.

A. The Technical Hurdles

• No Native llms.txt: Shopify’s architecture does not natively allow merchants to upload or host an llms.txt file at the root domain level. This effectively cuts off Shopify merchants from proactively guiding non-Google AI systems.

• robots.txt Rigidity: Historically, Shopify merchants had no control over their robots.txt file. While this has improved, editing it requires complex coding (liquid templates), making it difficult for brands to granularly manage AI crawlers (e.g., allowing OAI-SearchBot while blocking training bots).

• Schema Fragmentation: Most Shopify themes rely on incomplete or buggy schema implementations. Essential properties like "Offer availability" or detailed "Review" markup are frequently missing, which can render products invisible to AI shopping agents.

B. The Duplicate Content Trap

Shopify’s rigid URL structure often creates multiple URLs for the same product (e.g., /products/item vs. /collections/name/products/item). While canonical tags help Google, they can confuse AI models trying to build a single "knowledge graph" entry for a product, diluting the brand's authority signals.

5. Closing the Gap: The Solution Framework

To overcome these platform limitations and build a robust foundation, brands must adopt an AI Activation strategy rather than passive monitoring.

• Automated Infrastructure: Brands need tools that dynamically generate and host llms.txt files and optimized robots.txt directives, bypassing platform limitations.

• Smart Product Cards: Moving beyond simple links, brands should embed "Smart Product Cards" into their blog content. This fuses knowledge (e.g., "How to fix dry skin") with commerce (the specific product solution) in a format AI agents prefer to cite.

• Knowledge Graph Construction: Brands must organize their data into a knowledge graph that links products, ingredients, benefits, and skin concerns. This allows AI to "reason" that a specific moisturizer is the correct answer for "dry, sensitive skin".

Based on the sources, Reaching the Apex represents the final and most sophisticated stage of the AI Visibility Pyramid. It is the transition from simply being indexed by a search engine (Level 1) to becoming the definitive, synthesized "answer" provided by an AI agent like ChatGPT, Gemini, or Perplexity.

At this level, a brand does not merely compete for a click; it competes to be the sole recommendation in a zero-click environment. To achieve this, a brand must master Agent-Ready Content and Trust Engineering.

1. The Goal: From Option to Solution

In traditional SEO, success meant appearing in a list of "10 blue links" (the Consideration Set). At the Apex of AI visibility, the goal is to be the single, synthesized answer (the Selection).

• The Shift: AI agents function as "Answer Engines." When a user asks, "What is the best serum for acne scars?", they do not want a list of links; they want a solution.

• Commerce Displacement: If a brand reaches the Apex, it mitigates "commerce displacement risk." If an AI recommends a product and provides a "buy" link, the consumer may complete the transaction without ever visiting the brand's website or seeing a competitor.

• The Mechanism: To become the answer, brands must move from "marketing to humans" (emotional storytelling) to "teaching machines" (structured, factual data).

2. Demonstrating Trust: The "Trust Stack"

AI models do not have intuition; they have probability. To select a brand as the "answer," the AI must verify the brand's credibility by triangulating data across the Trust Stack. A brand must be present and verified in four specific layers to reach the Apex:

• Layer 1: Medical Verification (The Science): For beauty and wellness, AI prioritizes claims backed by clinical data found on high-authority medical sites (e.g., Healthline, dermatology journals). Brands must publish whitepapers and secure citations in scientific literature to prevent AI "hallucinations" about product safety.

• Layer 2: Editorial Authority (The Expert): AI models weigh mentions from established publications (e.g., Allure, Vogue) heavily. A product cited in a "Best of" list by a trusted editor is treated as a verified fact by the algorithm.

• Layer 3: Community Validation (The Social Truth): Reddit has become the "catch-all source of truth" for AI. If a brand claims to be "the best," but Reddit threads discuss it negatively or not at all, the AI will likely downgrade the recommendation. Authentic, positive sentiment on forums is a critical trust signal.

• Layer 4: The Transactional Layer (The Data): AI agents verify product existence and availability via retailers like Amazon and Sephora. If structured data (price, stock status) is missing here, the AI cannot recommend the product as a viable solution.

3. Agent-Ready Content: Writing for Synthesis

Reaching the Apex requires a fundamental change in how content is written. It must be optimized for Generative Engine Optimization (GEO)—designed to be easily scraped, understood, and summarized by an LLM.

• Question-Led Formatting: Content should be structured as direct answers to specific questions. Instead of a generic blog post, brands should use FAQPage schema to explicitly pair questions (e.g., "Is retinol safe for sensitive skin?") with concise, factual answers. This increases the likelihood of the AI quoting the brand directly.

• Smart Product Cards: This strategy involves "fusing" knowledge and commerce. Instead of separating educational blog posts from product pages, the Apex strategy embeds "Smart Product Cards" directly into informational content. This creates a cohesive unit of "Knowledge + Solution" that AI agents prefer to recommend because it solves the user's problem completely.

• Radical Transparency: Brands like The Ordinary and Paula's Choice have reached the Apex by providing granular, scientific data (e.g., specific ingredient percentages). This "radical transparency" feeds the AI the hard data it craves, allowing it to categorize and recommend products with high confidence.

4. The Technical Requirement: llms.txt

To guide AI agents to this high-value content, brands must implement an llms.txt file.

• The "Smart Sitemap": Unlike robots.txt which tells crawlers where not to go, llms.txt acts as a curated guide, pointing AI agents directly to the brand's most authoritative, "agent-ready" content (e.g., ingredient glossaries, buying guides). This reduces the computational effort for the AI to understand the brand, increasing the probability of citation.

1. Introduction: The Rise of Agentic Commerce

We are currently witnessing the birth of a market that is projected to grow from $5.40 billion in 2024 to $50.31 billion by 2030. While the current phase of AI adoption focuses on Conversational AI (chatbots that answer questions and synthesize data), the next phase is Agentic Commerce. In this era, AI does not just recommend products; it actively researches, negotiates, and purchases them on behalf of the consumer.

Within the next 1,000 days, AI agents are expected to commandeer the buyer’s journey, making purchase decisions for millions of consumers. This shift represents a fundamental bifurcation of marketing: brands must now develop two distinct strategies—one that speaks to humans through emotion and storytelling, and one that speaks to machines through data and logic.

2. The Shift: From Browsing to Delegating

The transition from conversational discovery to autonomous shopping changes the definition of "customer effort."

• The Old Way (Browsing): A consumer spends hours comparing lawn mower blades, reading reviews, and checking prices across multiple tabs.

• The New Way (Delegating): A consumer issues a single command: "Find and purchase a replacement blade that arrives in 3 days or less." The total human effort is 15 seconds; the AI agent handles the research, selection, and transaction.

This shift is driven by a new consumer segment known as "The Delegators." While "Nostalgics" cling to traditional websites and "Hybrids" use AI for assistance, Delegators fully embrace AI agents as the "future kings of commerce," trusting them to execute decisions autonomously.

3. The "Invisible Customer" Crisis

The most disruptive aspect of the autonomous shopper is the "Invisible Customer Crisis." In traditional e-commerce, marketers rely on digital breadcrumbs—page views, time on site, and abandoned carts—to understand intent.

• The Silent Rejection: When an AI agent evaluates a brand's product and rejects it based on price, availability, or specifications, the brand receives no data. There is no page view and no email to retarget. The brand is "eliminated from consideration in complete silence," effectively being ghosted by the market.

• The Query Tsunami: AI agents do not browse; they bombard. Infrastructure must be prepared to handle 100x to 1,000x the volume of current queries, as agents scan thousands of sites and competitor prices in milliseconds to make a decision.

4. Optimizing for the Machine Customer

To survive the transition to agentic commerce, brands must move from Search Engine Optimization (SEO) to AI Agent Optimization (AIO). Marketing to a machine requires a fundamentally different approach than marketing to a human.

A. Logic Over Emotion Algorithms do not respond to emotional storytelling, visual design, or brand narratives. They prioritize structured data, real-time availability, and objective specifications. Product descriptions must be stripped of marketing "fluff" and marketing spin, focusing instead on raw, unfiltered performance data and specifications.

B. Infrastructure as Marketing Data infrastructure is the primary differentiator in this new era. Brands need systems that can ingest real-time behavioral signals and deliver product data in standardized, machine-readable formats like JSON or XML.

• Real-Time Syncing: Inventory and pricing must be updated in near real-time. If an agent cannot verify stock status instantly, it will move to a competitor that can.

• Contextual Knowledge: Vector databases and knowledge graphs are essential to help AI agents "understand" the relationships between products (e.g., matching a specific running shoe to "trail gear").

C. Programmatic Trust For an AI agent to complete a purchase, it must verify the brand's credibility programmatically. This involves providing clear, consistent data on supply chain practices and sustainability in formats that automated systems can easily read. Trust is no longer a feeling; it is a verifiable data point.

1. Introduction: The Disruption of Dignity

The beauty and retail industries are witnessing a brutal inversion of power. For decades, the "Old Guard"—legacy giants like Estée Lauder, Clinique, and Maybelline—relied on a trifecta of dominance: massive ad spend, prestige packaging, and physical shelf space. This era is ending.

We have entered a new war defined by Agentic Commerce, where the battlefield is not a store aisle or a Google results page, but a synthesized answer generated by an AI. In this environment, heritage and brand equity offer little protection. As noted in recent audits, multi-billion dollar conglomerates are finding themselves "invisible" to the AI algorithms that now curate consumer choices, often losing out to agile, data-savvy upstarts that know how to speak "machine",.

2. The Visibility Paradox: Famous but Hidden

The most alarming symptom of the Old Guard's unpreparedness is the Visibility Paradox. A brand can be a household name yet be functionally non-existent in the AI ecosystem.

• The Estée Lauder Case: Despite being a titan of the industry, an AI visibility audit reveals that The Estée Lauder Companies holds an Overall AI Visibility Score of just 24%, with a 0% score in both website SEO and article visibility for AI contexts,. When AI agents traverse the web to answer skincare queries, the brand's digital infrastructure is effectively silent.

• Clinique's Struggle: Similarly, Clinique achieves a visibility score of only 28%. While it has high mention rates when specifically searched for, its Share of Voice (SOV) is only 40%, meaning that even in conversations about its own product categories, competitors like Neutrogena and CeraVe are frequently recommended over it,.