Reddit Monitor

1. Executive Summary

As 58% of consumers replace traditional search engines with AI for product discovery, LLMs like ChatGPT and Google Gemini rely heavily on Reddit as a source of "lived experience" and trust,. The Reddit AI Visibility Monitor is an automated system that scans targeted communities for high-value conversations, classifies them by intent (e.g., "Product Swap," "Validation"), and drafts compliance-aware responses for human review. This system solves the "Speed-to-Lead" problem, ensuring brands engage within the critical first hour when visibility is highest.

2. Problem Statement

• The Velocity Problem: High-value Reddit threads decay quickly. A helpful response within the first hour achieves 10x more visibility than one posted days later. Manual monitoring cannot sustain this pace.

• The Context Problem: Generic keyword listening tools fail to distinguish between a casual mention and a "Medium Intent" evaluation query (e.g., "Is Brand X better than Brand Y?").

• The Risk Problem: Poorly timed or overly promotional responses lead to downvotes and "Asshole Design" accusations, which can permanently poison AI training data,.

3. User Personas

1. The Community Manager: Needs real-time alerts for opportunities to provide value without scouring Reddit manually.

2. The Subject Matter Expert (SME): Needs to be tagged in to answer technical questions (e.g., an Engineer explaining specs) to build authority.

3. The Risk Officer: Needs immediate flags for negative sentiment spirals or "Astroturfing" accusations to prevent reputational damage,.

4. Functional Requirements

4.1 Intelligent Scanning Engine

• Requirement: The system must scan a user-defined list of subreddits (e.g., r/SkincareAddiction, r/Frugal) at a frequency of every 10 minutes,.

• Capabilities:

◦ Utilize the Mastra framework or similar workflow orchestration for reliable fetching,.

◦ Monitor specific keywords related to the brand, competitors, and category pain points (e.g., "alternative to," "broken," "worth it").

4.2 AI-Powered Opportunity Classification

• Requirement: Incoming posts must be analyzed by an LLM (e.g., GPT-4/5) and classified into specific opportunity types,.

• Classification Categories:

◦ Ingredient/Spec Education: Users asking technical questions (matches "Technical Specialist" persona).

◦ Budget vs. Quality: Users weighing price options (matches "Value Analyst" persona).

◦ Product Swaps: Users seeking alternatives due to allergies or dissatisfaction.

◦ Post-Purchase Validation: Users seeking confirmation of durability/reliability (Critical for the "Validation Layer" of AI search).

4.3 Sentiment Volume Change (SVC) Predictor

• Requirement: The system must track not just sentiment, but the velocity of comments combined with sentiment shift.

• Goal: Identify threads that are about to go viral before they hit the "Popular" feed.

• Logic: If (Volume Δ > Threshold) AND (Sentiment Δ != 0), flag as High Priority,.

4.4 "Human-in-the-Loop" Response Drafting

• Requirement: Upon identifying a high-value thread, the system must generate 2–4 draft responses,.

• Drafting Rules:

◦ Persona Matching: Assign the draft to the correct voice (e.g., "Nutrition Adviser" vs. "Operations Manager").

◦ Compliance: Must include disclosure (e.g., "I work at [Brand]").

◦ Tone Check: Enforce the "80/20 Rule" (80% helpful, 20% promotional) and ensure a casual, human tone,.

• Delivery: Send alerts to Slack/Teams with "Approve," "Edit," or "Reject" buttons.

4.5 Risk & "Asshole Design" Monitor

• Requirement: Specific monitoring of hostile subreddits (e.g., r/assholedesign) for brand mentions or UI patterns associated with dark patterns,.

• Action: If a thread matches "Ethical Argumentation Strategies" (e.g., shaming a cancel button location), immediately escalate to the Risk Officer,.

5. Technical Architecture

5.1 Data Ingestion

• Source: Reddit API (PRAW or similar wrapper).

• Frequency: Cron job triggers every 10 minutes.

5.2 Processing Layer (Mastra/Inngest)

• Scanner Tool: Fetches recent posts and comments.

• Classifier Agent: LLM prompt to categorize intent and assign confidence score (0.0–1.0).

• Persona Matcher: Logic to map category (e.g., "Price") to Persona (e.g., "Value Analyst").

5.3 Output Layer

• Draft Generation: LLM generates text variations based on the thread context.

• Notification Webhook: JSON payload sent to Slack/Teams with block kit formatting for action buttons.

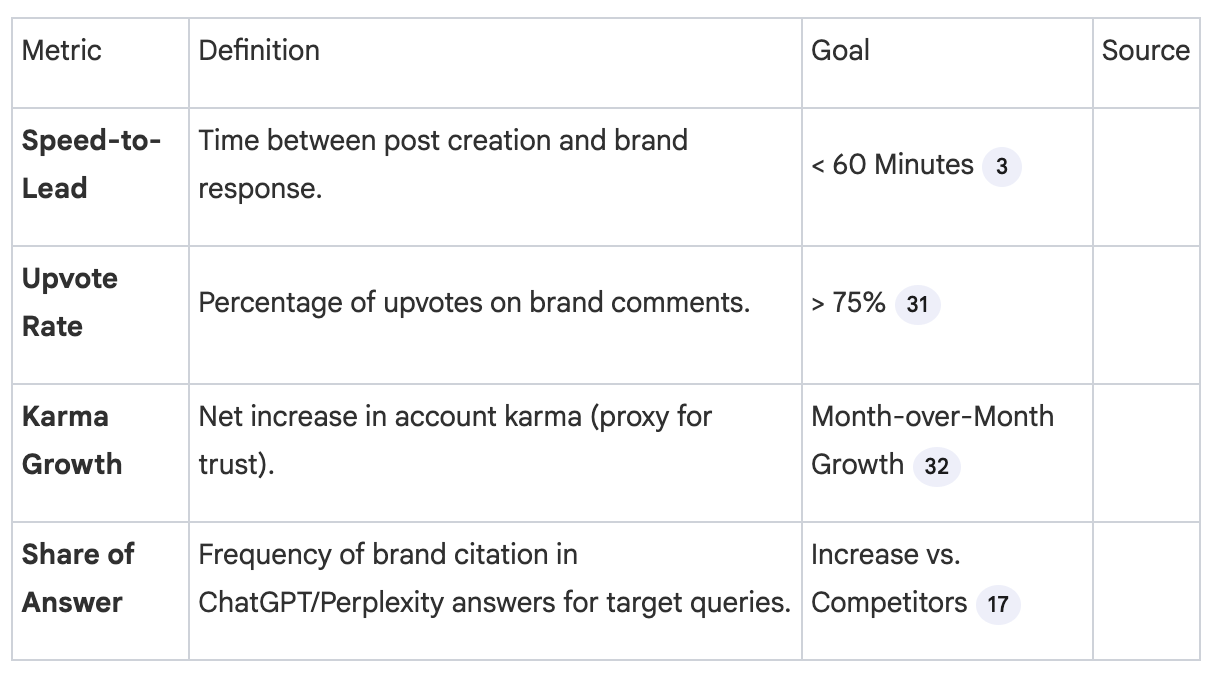

6. Success Metrics (KPIs)

7. Roadmap

• Phase 1 (The "Lurk" Phase): Build the listening infrastructure. Identify where the target audience lives (e.g., r/Costco, r/BuyItForLife) and establish baseline sentiment.

• Phase 2 (Active Engagement): Launch the Slack integration. Community Managers begin approving AI-drafted comments on "Medium Intent" threads.

• Phase 3 (Structured Data): Implement a feature to harvest high-karma Reddit comments and automatically format them into Schema.org markup (Review, AggregateRating) for the brand's website,.

--------------------------------------------------------------------------------

8. Compliance & Safety

• The "Astroturfing" Check: The system must flag if multiple brand personas are interacting in the same thread to avoid accusations of manipulation.

• Ban Avoidance: The system must parse the specific sidebar rules of each subreddit before drafting a response to ensure compliance (e.g., "No self-promotion on Tuesdays").