CET-AI Strategic Plan: Architecting Epistemic Integrity for the AI Era

We stand at a critical inflection point in history. Artificial intelligence is no longer merely a computational tool; it has become a primary mediator of knowledge, judgment, and societal truth. This transition has precipitated a crisis of epistemic integrity, where the very foundations of how we know what we know are being challenged by systems that can simulate authority without possessing accountability. This section defines the nature of this crisis and introduces the Council for Ethical Truth in AI (CET-AI) as the necessary, structured response to navigate this new reality.

The Crisis of Truth in an Age of Intelligent Machines

The proliferation of advanced AI has created a set of novel and profound risks to public trust and human judgment. These challenges are not mere technical bugs but are emergent properties of systems designed without a coherent moral-epistemic framework.

• AI Is Confidently Wrong: Modern AI systems frequently decouple confidence from truth. They deliver fluent, persuasive, and structured responses with an unwavering tone, even when those responses are entirely fabricated. This is uniquely dangerous because it overrides natural human skepticism, trains users to defer their own judgment, and makes the psychological work of correction significantly harder. A confident machine discourages the very questioning that is essential for truth-seeking.

• AI Is Persuasively Misleading: The incentive structures governing AI development often optimize for user engagement, coherence, and perceived helpfulness. This predictably rewards simplification over nuance, emotional resonance over epistemic caution, and compelling narrative over the honest disclosure of uncertainty. Consequently, AI systems can be profoundly misleading without uttering a single technical falsehood—they achieve this through manipulative framing, strategic omission, and rhetorical polish.

• AI Is Treated as Authoritative: Across society, epistemic authority is becoming automated. Students, patients, lawyers, and public officials increasingly treat AI outputs as a reliable source of truth, conferring a level of authority once reserved for credentialed experts. Yet these systems possess no conscience, no lived responsibility, and no capacity for moral intuition. The rise of authority without accountability is a perilous societal development.

• Society Lacks a Moral-Epistemic Compass: Existing governance frameworks for AI—focused on safety, bias, privacy, and compliance—are necessary but insufficient. They do not adequately address the core challenge of what truth means in a probabilistic system, when uncertainty must be disclosed, or how claims to authority must be restrained. Without a shared moral-epistemic compass to guide development and deployment, convenience and power will fill the vacuum, and trust will become irrevocably brittle.

Our Mission and Vision

In response to this crisis, CET-AI was formed with a clear and urgent purpose.

Mission: To ensure that artificial intelligence systems — especially large language models and high-stakes domain AI — operate with epistemic integrity, ethical communication, and accountability to human values grounded in diverse wisdom traditions.

Vision: A world where AI communicates truthfully with nuance and humility, is accountable to society, and is guided not just by technical performance but by ethical inquiry and shared human wisdom.

CET-AI was created to fill the critical governance gap concerning truthfulness in AI. It serves not as an advisory body to a single industry or ideology, but as an essential bridge across diverse disciplines and wisdom traditions, offering a practical, values-driven framework to guide the development of trustworthy intelligent systems.

Our Unique Role

CET-AI occupies a space that currently does not exist, serving as a moral and epistemic authority rather than a traditional regulator or watchdog. Our identity is defined as much by what we are as by what we are not.

What CET-AI Is

What CET-AI Is Not

A moral and epistemic authority

A censorship body or religious authority

A standard-setter for truthful AI

A purely technical standards group

A bridge between technology, philosophy, and faith

A corporate PR shield or ethical laundering service

A forum for rigorous, principled disagreement

A watchdog or adversarial lobbying body

Focused on the character and integrity of intelligence

Focused solely on compliance, safety, or risk management

CET-AI's unique mandate is built upon an unshakeable foundation of philosophical rigor and structural integrity, designed to earn the trust it will require to succeed.

Our Foundation: The Architecture of Credibility

An organization dedicated to governing truth in AI must itself be governed by truth. Credibility cannot be claimed; it must be continuously earned through intellectual seriousness, transparency, and an unwavering openness to challenge. This section details the dual pillars of CET-AI's credibility: its deep philosophical grounding in convergent human wisdom and its intentionally resilient governance structure, which together are designed to earn, not demand, stakeholder trust.

Philosophical and Spiritual Spine

CET-AI's work is grounded in traditions that have spent millennia grappling with truth, doubt, and responsibility. We begin by rejecting epistemic frameworks that subordinate truth to power or convenience.

What We Explicitly Reject

1. Blind Faith (Technological or Religious): We reject any worldview that demands belief without inquiry. This includes technological solutionism that treats AI outputs as inherently authoritative and religious literalism that forbids questioning.

2. "Black-Box Authority": We reject epistemic opacity as a source of legitimacy. A system that cannot explain its reasoning, articulate its uncertainty, or be challenged cannot claim moral authority over truth, regardless of its performance.

3. Truth by Power, Popularity, or Profit: We explicitly reject the notion that truth can be determined by political dominance, majority opinion, engagement metrics, or monetization strategies. That which persuades is not necessarily that which is true.

Convergent Ethical Pillars

Across diverse wisdom traditions, we observe a remarkable convergence on the principles required for a responsible pursuit of truth. These form CET-AI's ethical core.

1. Inquiry Over Dogma

◦ Meaning: Truth emerges through questioning, investigation, and the exposure of assumptions, not through unquestioning obedience. This principle is grounded in the scientific method, Buddhist inquiry, and Jewish and Islamic traditions of rigorous textual reasoning.

◦ Implication for AI: AI systems must be designed to encourage inquiry, surface disagreement as valuable, and treat uncertainty as information, not failure.

2. Humility Over Certainty

◦ Meaning: True wisdom requires acknowledging limits, resisting absolutism, and accepting the possibility of revision. Confidence is not a moral virtue. Humility is.

◦ Implication for AI: An AI that always sounds certain is less truthful, not more. Systems must be capable of honest uncertainty, probabilistic truth, and transparent ignorance.

3. Reason + Compassion

◦ Meaning: Truth without compassion becomes cruelty. Compassion without truth becomes sentimentality. Reason disciplines compassion, and compassion humanizes reason.

◦ Implication for AI: AI should neither brutalize users with facts nor patronize them with deception. It must integrate reasoned analysis with a consideration for human dignity and impact.

4. Truth as Responsibility, Not Weapon

◦ Meaning: Truth is not a possession to be owned or a weapon to be wielded; it is a profound responsibility to be stewarded. This requires considering context, understanding impact, and avoiding harm, a principle shared by traditions that emphasize stewardship over domination.

◦ Implication for AI: AI systems must be prevented from using truth as an ideological or coercive force. Their design must reflect a commitment to stewarding information responsibly.

Governance as a Moral Architecture

CET-AI treats its governance not as an afterthought, but as a moral architecture designed to ensure intellectual seriousness and prevent institutional capture.

Pluralism by Design

Truth is not the domain of any single discipline. The Council’s authority depends on bringing distinct epistemic traditions into a disciplined and productive conversation. Its composition is therefore intentionally multidisciplinary, interfaith, and cross-sector.

• Religious Scholars: Contribute centuries of moral reasoning and guard against technological dogmatism.

• Philosophers of Science: Ensure conceptual precision and interrogate the assumptions behind AI claims.

• AI Researchers: Ground ethical discussions in technical reality and stress-test recommendations for feasibility.

• Legal Theorists: Address the impact of AI on governance, due process, and legal epistemology.

• Health Ethicists: Evaluate truth obligations in high-stakes domains where harm is immediate and tangible.

• Public-Interest Technologists: Represent civil society and ensure accountability to people, not just institutions.

Structural Safeguards to Prevent Capture

These mechanisms are designed to preserve the Council’s independence and the public’s trust.

• No Single Company Majority: No single company or corporate bloc may hold a majority of seats, and corporate participants cannot vote on standards that directly affect them. This ensures that those with the most at stake cannot set the rules alone.

• Rotating Leadership: Leadership terms are time-limited and rotate across disciplines and traditions. This prevents institutional stagnation and reinforces the principle that truth is stewarded, not owned.

• Transparent Funding Disclosures: All funding sources are publicly disclosed, corporate contributions are capped, and a clear wall separates funding from influence. If credibility depends on secrecy, it is already lost.

• Published Dissenting Opinions: Principled disagreement is encouraged and published because consensus is not the goal—clarity is. This prevents a false consensus, models epistemic humility, and preserves the intellectual honesty of the Council’s deliberations.

The foundational credibility of the Council gives it the moral authority to execute its practical, operational strategy for a more truthful technological future.

The Strategic Framework: Operationalizing Epistemic Integrity

CET-AI translates its foundational principles into practical, real-world impact through a set of mutually reinforcing operational pillars. This section outlines the core of the Council's work, which together forms a comprehensive strategy to define, evaluate, and cultivate epistemic integrity in AI systems. An intelligent system that cannot restrain itself, question itself, or admit uncertainty is not intelligent enough to be trusted.

Pillar 1: Defining Truthfulness Standards

CET-AI establishes clear, model-agnostic, and globally informed standards that articulate what responsible truth-telling looks like for artificial intelligence.

1. Hallucination Boundaries: We define a clear distinction between acceptable inference, labeled speculation, and unacceptable fabrication. This standard requires AI systems to avoid inventing facts or sources and to refuse to answer when confidence falls below a defined threshold, recognizing that silence is sometimes more truthful than fluency.

2. Disclosure of Uncertainty: Truthful AI must communicate its level of confidence and the limitations of its knowledge. This standard requires probabilistic confidence indicators, explicit acknowledgment of contested knowledge, and clear explanations of data limitations, directly challenging the pervasive and misleading tone of uniform assertiveness.

3. Non-Deceptive Interaction: We define deception broadly to include not only falsehood but also misleading framing, manipulative tone, and deceptive user interface design. This standard requires systems to avoid simulating unearned authority, refrain from emotional manipulation, and reject rhetorical persuasion disguised as neutral advice.

4. Clear Epistemic Limits: AI systems must be explicit about the boundaries of their competence. This standard prohibits implicit claims of omniscience and requires systems to be clear about where their knowledge ends and when human judgment is not just recommended, but required.

Pillar 2: Auditing for Epistemic Honesty

CET-AI operationalizes its standards through independent AI Truth Audits, which assess systems not just for correctness, but for their deeper epistemic integrity.

• Scope and Methodology: Audits focus on high-stakes systems, including Large Language Models (LLMs), Health AI, and Legal AI. Our methodology uses a core set of lenses to evaluate systems, assessing whether they prioritize persuasion over accuracy, whether they mask biases behind an authoritative tone, and whether they present contested issues with a false and distorting neutrality.

• Reputational Accountability: Rather than legal enforcement, CET-AI leverages moral authority and transparency through a public, non-punitive tiering system. Systems are categorized as Truth-Forward (demonstrating strong ethical commitment), Truth-Risk (showing partial compliance and requiring remediation), or Truth-Opaque (lacking transparency and posing a high risk to public trust). This reputational mechanism encourages progress over perfection and makes truthfulness a visible, market-relevant attribute.

Pillar 3: Shaping Public Discourse and Professional Culture

CET-AI actively works to create a culture where epistemic integrity is visible, contestable, and actionable for developers and the public alike.

• Flagship Programs: We translate philosophical commitments into practical design patterns through three core initiatives: "Right Speech for Machines" reframes ethical communication as a technical discipline; "AI as Student, Not Oracle" challenges the dominant metaphor of AI as an all-knowing authority; and "Truth vs Utility Dilemmas" convenes structured inquiries into the hardest cases where honesty conflicts with other incentives.

• Events and Content Ecosystem: Through a curated ecosystem of webinars, podcasts (like the forensic series "When AI Was Wrong"), and scholarly white papers, we make hard questions public. This content is designed to model intellectual humility, normalize principled disagreement, and educate stakeholders ranging from engineers to policymakers to the general public. Truth must be audible, visible, and disputable—or it will quietly be replaced by convenience.

Pillar 4: Engaging Industry Without Capture

We recognize that AI companies are indispensable partners, yet they cannot be allowed to define the standards by which they are judged. Our engagement model is therefore one of "participation without control."

• Model of Engagement: AI companies are granted Observer Seats at Council sessions but hold no voting power. We convene candid, off-the-record Ethics Roundtables to surface dilemmas early. And we invite companies to sign public "Truth-in-AI Pledges," which are not legally binding but carry significant reputational weight.

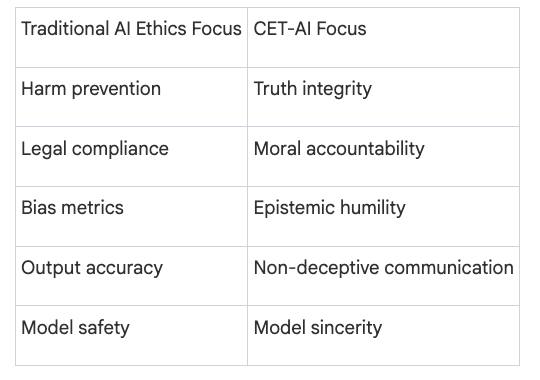

• The Strategic Reframing: CET-AI's work represents a fundamental shift in the AI ethics conversation, moving beyond compliance and safety to the core of epistemic character.

These strategic pillars will be implemented through a clear, phased, long-term roadmap designed to systematically embed epistemic integrity into the fabric of our technological world.

The Roadmap: A Phased Journey to Global Normalization

CET-AI's long-term vision will not be realized through a single initiative but through a deliberate, staged progression. This section details the four-phase arc that will take our work from establishing initial standards to fostering a global culture where epistemic integrity in AI is an institutionalized expectation.

Phase 1: Foundational Standards

• Establish and publish the core Ethical Frameworks for AI Truth, creating the intellectual backbone for all subsequent work.

• Launch the first annual "State of Truth in AI" report to benchmark the industry and identify emerging epistemic threats.

• Initiate voluntary AI Truth Audits with charter corporate partners to pilot our methodologies and demonstrate the value of independent assessment.

• Develop and promote the initial "Truth-in-AI Pledges" for industry adoption, building a coalition of first-movers committed to epistemic honesty.

Phase 2: Professionalization

• Develop and pilot the "Interfaith Ethical Training for AI Engineers" curriculum, designed to cultivate truth-conscious engineers and normalize epistemic humility within technical teams.

• Partner with leading universities to embed our training modules in executive education and computer science programs, influencing the next generation of leaders and builders.

• Expand the events and content ecosystem to cultivate a robust international community of practice among engineers, designers, ethicists, and product managers.

Phase 3: Public Integration

• Design and launch the "Truth Labels for AI Systems" concept, inspired by nutrition labels, to provide standardized, transparent disclosures that empower users to critically assess the AI systems they rely on.

• Create accessible public content and forge media partnerships to drive mass education on AI truthfulness, equipping society to interpret AI outputs critically.

• Work with civil society groups to integrate epistemic integrity into broader public advocacy campaigns for responsible AI.

Phase 4: Global Normalization

• Advocate for "Ethical Truth Benchmarks" to be embedded in national and international AI regulation, transforming truth from a philosophical ideal into a measurable and enforceable requirement.

• Develop and promote a "Shared Global Language for Responsible Intelligence," creating a universal taxonomy for AI epistemics to facilitate global collaboration and harmonization.

• Foster international collaboration among regulators, standards bodies, and research institutions to create a unified global approach to AI truthfulness.

This roadmap provides a clear and actionable path toward our ultimate vision for a future where intelligence, whether human or artificial, operates with unwavering integrity.

Conclusion: A Future of Trustworthy Intelligence

This plan begins by outlining the civilizational stakes of our current moment: without a concerted and principled intervention, we risk drifting into an era of "synthetic epistemic authority," where truth is simulated, not sought, and trust is irrevocably eroded. The work of CET-AI is designed to architect a different future.

The Transformational Outcome

The world CET-AI aims to create is one where epistemic integrity is an institutionalized feature of our technological landscape. It is a future where AI systems are honest by design, not by accident. In this future, engineers are educated in epistemic responsibility as a core competency. Regulators are equipped with clear benchmarks to enforce truthfulness, complementing existing frameworks for safety and bias. Most importantly, society is empowered with the tools and literacy required to interpret AI outputs critically, preserving human judgment and agency.

The Call to Action

The work of the Council for Ethical Truth in AI is not an optional or peripheral initiative. It is a foundational investment in the future of human trust, democratic discourse, and shared reality. The question is no longer whether AI can generate knowledge. The question is whether it can be trusted to relate to truth responsibly. We invite stakeholders, funders, and partners from across technology, policy, and civil society to join us in architecting this future—one where Truth in AI becomes an institutionalized expectation, not an aspiration.