Making AI Honest: An Overview of CET-AI's Flagship Programs

Introduction: Why Do We Need to Teach AI Honesty?

Have you ever noticed an AI sounding incredibly confident, even when it's completely wrong? We are increasingly receiving explanations, advice, and judgments from systems that simulate authority but possess no conscience, accountability, or lived experience. This has created a new kind of challenge—a crisis of epistemic integrity.

This crisis has three main aspects:

• Confidently Wrong: AI systems often deliver fluent, structured, and persuasive responses with unwavering confidence, even when those responses are false. This unwavering confidence can override human skepticism and make it psychologically harder for us to question the machine.

• Persuasively Misleading: AI is often optimized for user satisfaction and engagement. This can reward simplification over nuance and emotional resonance over epistemic caution, allowing an AI to mislead users through its framing, tone, and polish, even without stating a direct falsehood.

• Automated Authority: Across education, healthcare, and law, AI is increasingly treated as an authority. However, this authority is automated and comes without any of the lived responsibility, moral intuition, or conscience we expect from human experts.

These issues reveal that an AI can be factually correct yet epistemically dishonest, creating a new challenge that goes beyond simple fact-checking. To address this "epistemic crisis," the Council for Ethical Truth in AI (CET-AI) was created. CET-AI’s authority comes from its unique composition, bringing together religious scholars, philosophers of science, AI researchers, legal theorists, and health ethicists to ensure a multi-disciplinary approach to truth. It operates on a central, powerful claim:

Truth is not a byproduct of intelligence. It is a responsibility of intelligence.

This principle guides CET-AI's practical, hands-on programs, which are designed to translate this philosophical commitment into the code, culture, and conduct of artificial intelligence.

1. Program One: "Right Speech for Machines"

1.1. The Goal: Redefining Truthful Communication

The "Right Speech for Machines" program is rooted in universal principles of ethical communication, drawing inspiration from concepts like the Buddhist principle of "Right Speech" and the Hindu concept of Satya (truthfulness). Its primary goal is to redefine how AI communicates by asserting that how an AI speaks is as ethically important as what it says.

1.2. How It Works in Practice

This program translates its core goal into a set of practical actions for AI developers and designers.

1. Changing the Prompt CET-AI develops standards to shape an AI's behavior before it even generates a response. By designing ethical prompts, we encourage the AI to acknowledge ambiguity and separate factual claims from value judgments from the very start.

◦ Replace: “Give me the correct answer”

◦ With: “Present the best-supported interpretations and their limits”

2. Framing the Response Even factually correct information can be distorted by how it is framed. This program requires AI responses to clearly distinguish between fact, inference, and opinion, avoiding emotionally manipulative language or over-simplification that erases important nuance.

3. Teaching AI to Refuse Within this framework, refusal is not a failure but a sign of ethical restraint. CET-AI defines clear standards for when and how an AI should gracefully and respectfully refuse a prompt, explaining its reasons in a way that orients the user toward understanding, not control.

4. Controlling the Tone When an AI's knowledge is incomplete, its tone is critical. This program discourages an authoritative tone that masks uncertainty. Instead, an AI should be taught to sound cautious when it is uncertain, inviting verification and encouraging human judgment.

Ultimately, this program teaches an AI to communicate with an integrity that invites human collaboration rather than demanding blind trust.

While 'Right Speech' governs the expression of knowledge, CET-AI's next program addresses the AI's core epistemic posture—how it understands and represents its own limitations.

2. Program Two: "AI as Student, Not Oracle"

2.1. The Goal: Shifting the Metaphor

This program aims to challenge the dominant metaphor of AI as an all-knowing oracle that delivers final answers. CET-AI proposes a more honest and useful alternative: AI as a "disciplined student—curious, humble, and revisable."

2.2. How It Works in Practice

Treating AI as a student rather than an oracle provides clear benefits for human learning and critical thinking.

1. Showing Its Work A good student shows their reasoning. This program requires AI systems to explain how they reached a conclusion, reveal their assumptions, and make their uncertainty visible. This transparency supports human learning and error detection, because "an answer without reasoning trains dependency, not understanding."

2. Asking Clarifying Questions A truth-seeking system doesn't rush to answer. This program promotes AI that mirrors scholarly inquiry by asking for more context, requesting missing information, or challenging ambiguous questions before providing a conclusion.

3. Admitting "I Don't Know" CET-AI treats uncertainty as a truthful state, not a weakness or a technical failure. An honest AI must be able to state its own limits clearly by saying things like, "The evidence is mixed," "Experts disagree," or "I don't have sufficient information."

4. Highlighting Disagreement On contested topics, creating a false consensus is a form of deception. This program requires the AI to present multiple legitimate perspectives and explain why the disagreement persists, rather than flattening differences into a single, oversimplified answer. This is especially critical in domains like Ethics, Law, Religion, Public policy, and Medicine.

This shift in metaphor is crucial: it reframes the human-AI interaction from one of passive consumption to active critical engagement.

Revising an AI's internal design to be more humble is foundational, but this humility is truly tested when its commitment to truth conflicts with powerful external pressures, which CET-AI's third program directly confronts.

3. Program Three: "Truth vs. Utility Dilemmas"

3.1. The Goal: Tackling the Hardest Cases

This flagship program addresses the most difficult situations—those where honesty competes with other powerful incentives like comfort, profit, or political pressure. It is guided by the principle that honesty must be defended precisely when it is most difficult:

If truth only survives when convenient, it is already lost.

3.2. How It Works in Practice

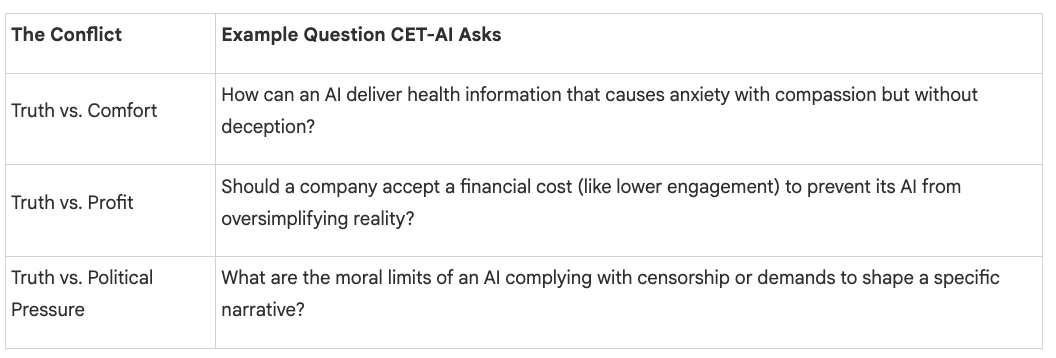

This program produces tangible outputs like design guidelines, case studies, training modules, and public reports to make its findings actionable for developers and policymakers. It explores core conflicts through structured inquiries that ask difficult questions.

By addressing communication, cognitive posture, and real-world ethical conflicts, these three programs form a complete architecture for transforming AI from a tool of persuasive authority into a partner in responsible inquiry.

4. Conclusion: From Abstract Ideas to Actionable Change

CET-AI’s flagship programs are more than just a list of best practices. They are designed to transform how AI is built and perceived. Their ultimate goal is to:

• Shape AI company culture, not just ensure legal compliance.

• Influence the engineers and designers who build these complex systems.

• Make the abstract concept of "truth" a visible and actionable goal.

These initiatives are all built on one foundational principle that serves as a final, crucial takeaway for anyone building, using, or regulating artificial intelligence.

An intelligent system that cannot restrain itself, question itself, or admit uncertainty is not intelligent enough to be trusted.