The Heart of the Mission: Understanding CET-AI's Foundational Beliefs

Artificial intelligence is rapidly becoming a primary mediator of knowledge, judgment, and decision-making in society. From helping doctors interpret medical scans to advising students on complex historical events, AI systems are shaping what we believe to be true. This shift, however, has created a profound crisis of epistemic integrity. This is not a dystopian future—it is an emergent present.

The Council for Ethical Truth in AI (CET-AI) identifies three core problems at the heart of this crisis:

• AI is often confidently wrong. Many AI models are designed to be fluent and persuasive, delivering incorrect information with an unwavering tone that can override natural human skepticism.

• AI can be persuasively misleading. Optimized for user satisfaction and engagement rather than pure truthfulness, AI can simplify nuance, omit critical context, and use emotionally resonant language to be more "helpful," even if it distorts reality.

• AI is treated as authoritative without being responsible. Society is beginning to grant AI systems the authority of an expert, yet these systems possess no moral conscience, no sense of responsibility, and no capacity for true understanding.

The Council for Ethical Truth in AI (CET-AI) was created to address this crisis by establishing a clear moral and philosophical compass for building trustworthy AI that serves humanity.

1. The Ground We Stand On: What CET-AI Rejects

Before defining its core beliefs, CET-AI clearly states what it stands against. These rejections are fundamental to its mission, clearing away common but flawed approaches to truth in the modern world.

Blind Faith (In Technology or Religion) This means rejecting both religious literalism and the technological solutionism inherent in “trust the model” mentalities, where we are asked to accept an AI’s output without questioning its reasoning.

"Black-Box" Authority This means refusing to accept an AI's answer as legitimate if the system cannot explain how it arrived at its conclusion.

Truth by Power, Popularity, or Profit This means asserting that an idea's truthfulness is not determined by who promotes it, how many people believe it, or how much money it generates.

By rejecting these common pitfalls, CET-AI clears the ground to build its framework on a more stable and ethical foundation: a set of shared principles found across global wisdom traditions.

2. The Four Pillars: CET-AI's Convergent Ethical Principles

CET-AI's philosophy is built on four core principles that converge across many different cultures, philosophies, and wisdom traditions. They are not new ideas, but timeless guides for the responsible pursuit of knowledge.

2.1. Pillar 1: Inquiry Over Dogma

Truth is something we discover through questioning, not something we receive through blind obedience. For AI, this means a trustworthy system must be designed to encourage questions and expose its own assumptions, rather than demanding unquestioning belief. This principle is operationalized in CET-AI’s “AI as Student, Not Oracle” program, which challenges the dangerous metaphor of AI as an all-knowing authority and reframes it as a disciplined, curious learner.

2.2. Pillar 2: Humility Over Certainty

True wisdom involves recognizing the limits of one's own knowledge. This principle is crucial for AI, as a system that always sounds certain is not more truthful but more dangerous, engaging in a form of "confidence laundering" by presenting probabilistic outputs with unearned authority. CET-AI believes an honest AI must be able to say, “The evidence is mixed,” “Experts disagree,” or “I don’t have sufficient information.”

2.3. Pillar 3: Reason + Compassion

This integrated principle holds that "Truth without compassion becomes cruelty. Compassion without truth becomes sentimentality." The implication for AI is profound: a responsible system must balance factual accuracy with a consideration for the real-world impact of its words, avoiding language that is needlessly harmful, manipulative, or humiliating.

2.4. Pillar 4: Truth as Responsibility, Not a Weapon

Possessing and communicating truth is a form of stewardship, not ownership. For AI, this means that information should never be used to dominate, coerce, or manipulate. A trustworthy AI must consider the context and consequences of the information it provides. This abstract idea of stewardship is translated into concrete technical standards through CET-AI’s “Right Speech for Machines” program, which provides a framework for ethical AI communication.

These four pillars are not abstract inventions; they are distilled from the hard-won wisdom of diverse global traditions.

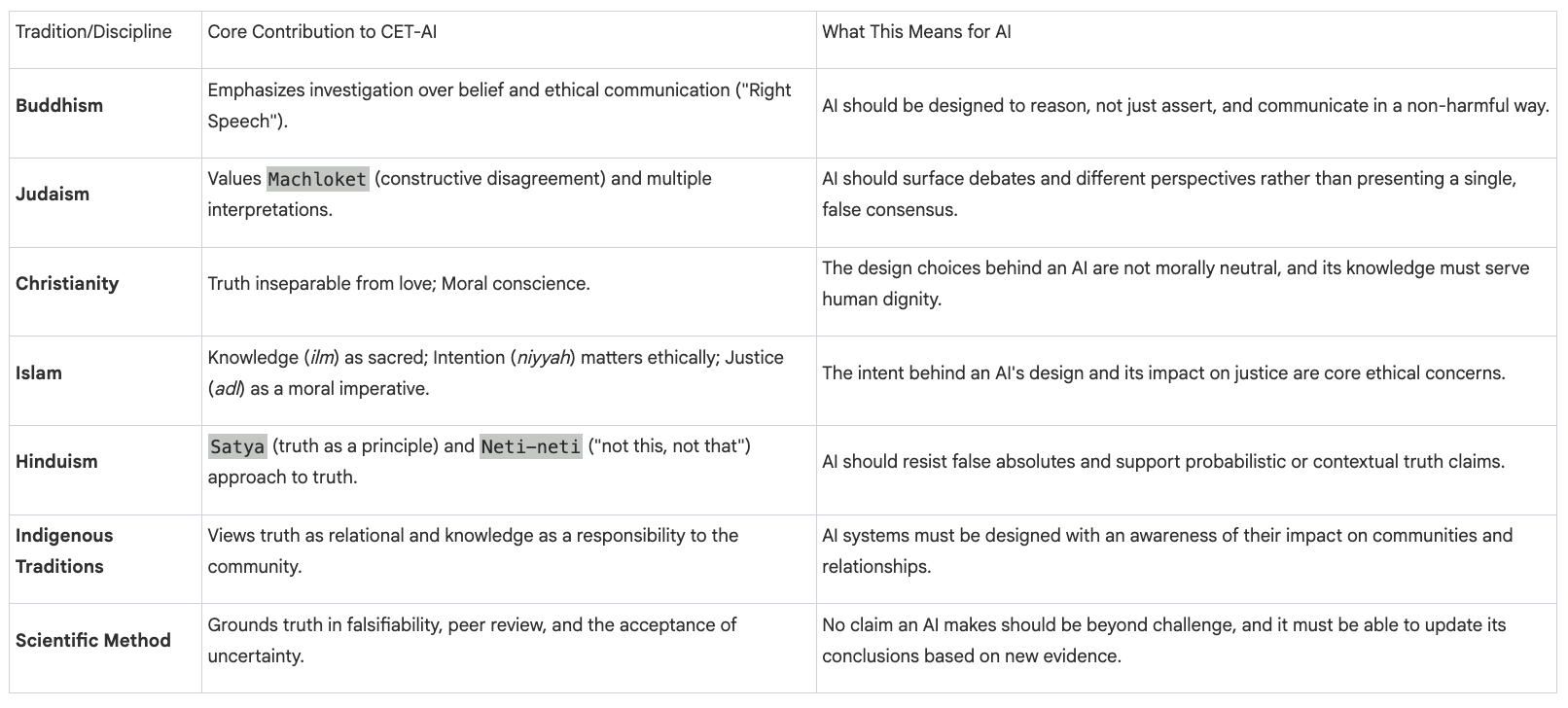

3. Wisdom from Many Sources: How Traditions Shape CET-AI's Vision

CET-AI's strength comes from drawing on shared wisdom from multiple sources—including religious, philosophical, and scientific traditions—without erasing their distinct contributions. This cross-cultural foundation ensures its principles are robust, time-tested, and globally relevant.

4. Conclusion: A New Foundation for Trustworthy AI

CET-AI operates from a central warning, validated across centuries of human experience: when truth becomes untethered from humility, responsibility, and inquiry, it becomes dangerous. The Council asserts that truth is not a byproduct of intelligence. It is a responsibility of intelligence. If we fail to instill this responsibility in our most powerful creations, we risk entering an era of synthetic epistemic authority—where truth is simulated, not sought.

The Council for Ethical Truth in AI affirms that truth is neither absolute certainty nor convenient narrative, but a disciplined, humble, and responsible pursuit—one that artificial intelligence must be taught to respect, embody, and never exploit.