Launching AI Products in 2026: the Definitive Guide

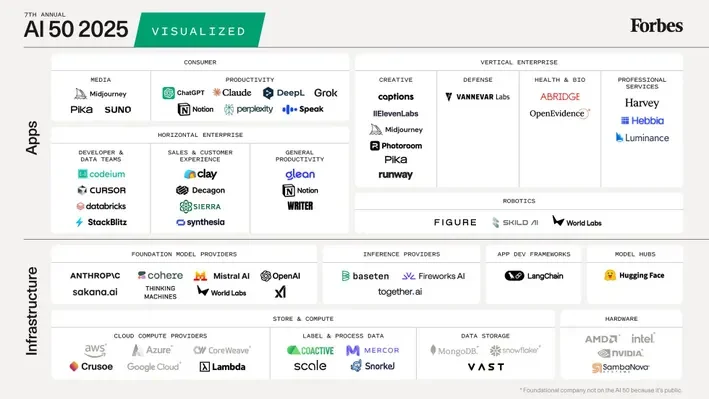

The 2025 edition of the AI 50 list shows how companies are using agents and reasoning models to start replacing work previously done by people at scale.

Artificial intelligence is entering a new phase in 2025. After years of AI tools mostly answering questions or generating content on command, this year’s innovations are about AI actually getting work done. The 2025 Forbes AI 50 list illustrates this pivotal shift, as startups on it signal a move from AI that merely responds to prompts to one that solves problems and completes entire workflows. For all the buzz around big AI model makers like OpenAI, Anthropic or xAI, the biggest changes to the story in 2025 are in these application-layer tools that use AI to produce real business results.

Now you imagine you were responsible for marketing these AI Products to Developers, Exectutives and the General Consumer. How would you go about this? This essay aims to debunk how to GTM to these different target demos and outline the key differences between SaaS products and AI products. Avoiding the pitfalls of Vanity metrics and creating truly viral products.

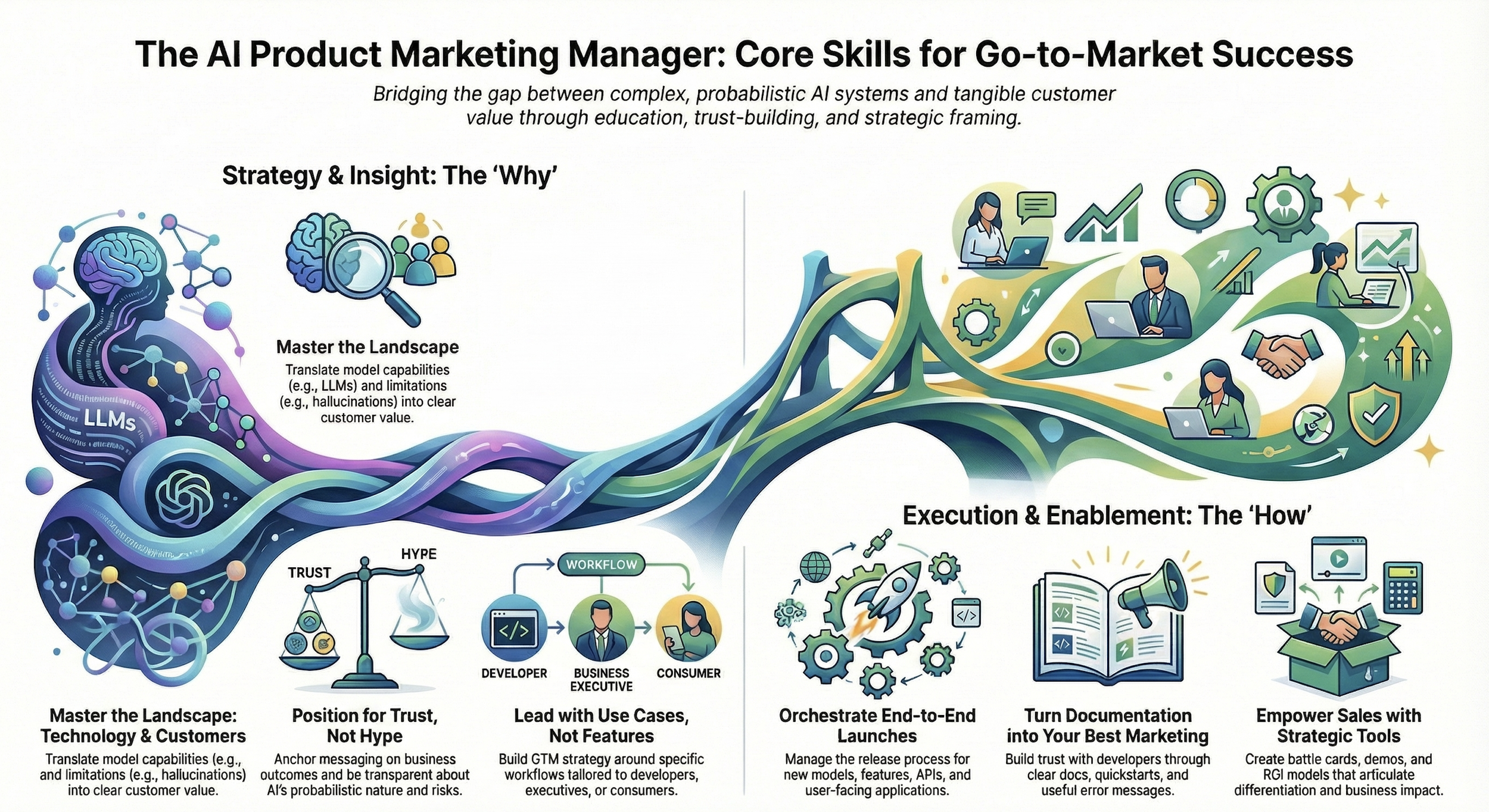

Product marketing has always sat at the intersection of product, market, and growth. In AI products, that intersection becomes sharper—and more fragile.

AI PMMs are not just responsible for messaging and launches. They are responsible for translation: translating probabilistic systems into customer value, translating research progress into market readiness, and translating usage signals into meaningful measures of success.

This essay explores what product marketing looks like in AI products, how go-to-market (GTM) strategy must adapt, and which metrics actually indicate adoption and value.

1. Why Product Marketing Is Harder in AI

AI products differ from traditional SaaS in several fundamental ways:

They are probabilistic, not deterministic

The same input can produce different outputs, which complicates expectations and trust.They are capability-dense

A single model can support dozens of use cases, making positioning harder.They evolve continuously

Models, prompts, and system behavior change faster than typical feature cycles.They require user education

Value depends heavily on how well users understand how to interact with the system.

Because of this, AI product marketing is less about persuasion and more about sense-making.

The PMM’s job is not to convince customers that the product is powerful—but to help them understand when, how, and why it is useful.

2. Core Responsibilities of Product Marketing in AI

While the foundational PMM responsibilities remain—positioning, launches, enablement, adoption—the emphasis shifts.

1. Positioning Under Uncertainty

In AI, positioning cannot be built on absolutes (“accurate,” “autonomous,” “human-level”). Those claims break trust quickly.

Instead, strong AI positioning:

Anchors on outcomes, not capabilities

Makes constraints explicit

Differentiates on reliability, workflow fit, and trust

Effective positioning follows a Value → Capability → Proof structure:

Value: What meaningful outcome does the customer achieve?

Capability: What does the AI system do to enable that outcome?

Proof: Evidence—benchmarks, pilots, customer results—that the system performs in context.

This structure prevents overclaiming while maintaining credibility.

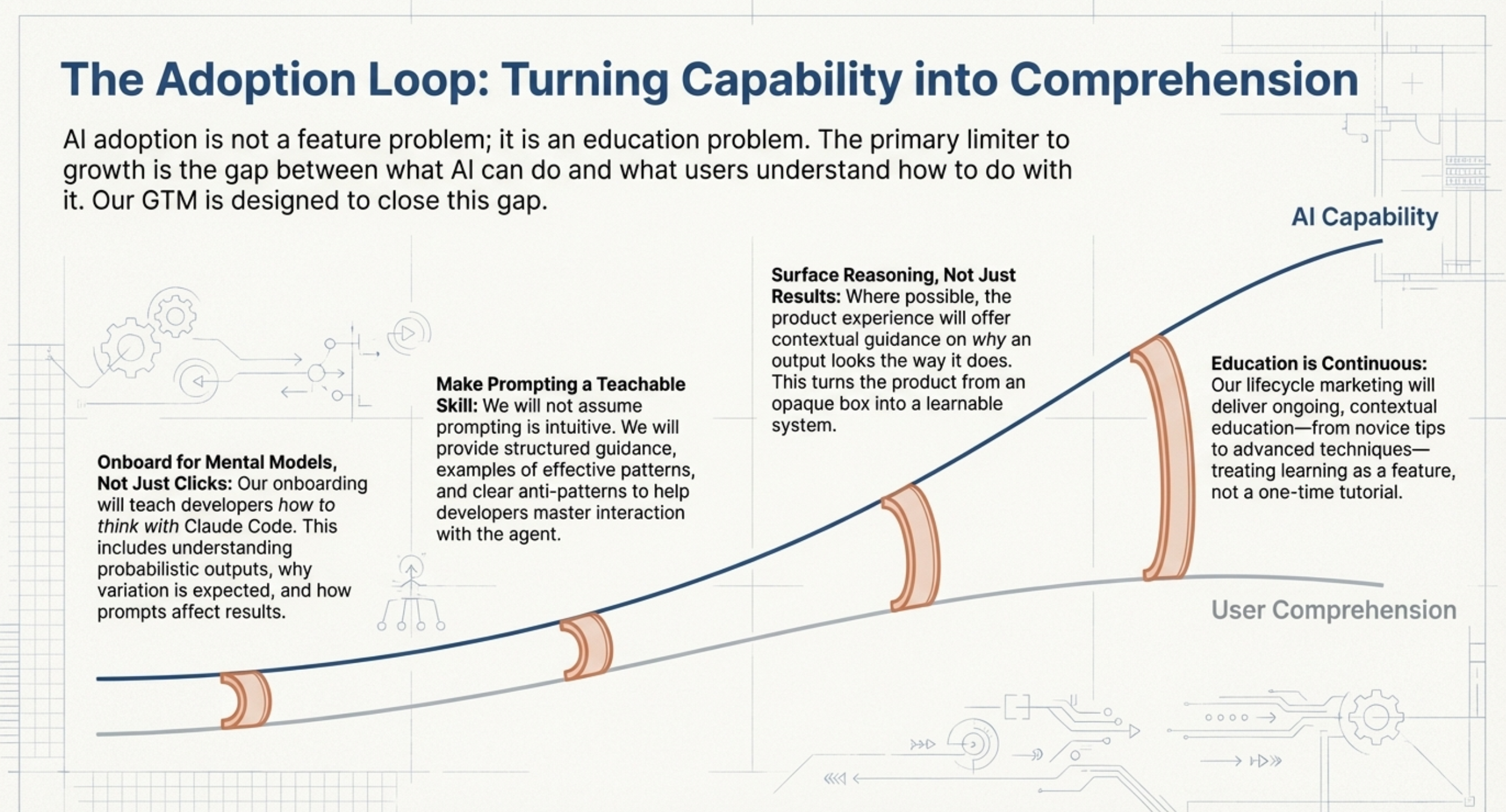

2. Education as a GTM Lever

In AI, adoption is limited less by features than by understanding.

Product marketing must own:

Mental model education (how the AI behaves)

Prompting guidance and examples

Explanation of variability and failure modes

Clear articulation of when human judgment is required

Education is not documentation alone—it is:

Onboarding flows

Demos

Sales narratives

Use-case playbooks

AI PMMs who treat education as a first-class GTM strategy outperform those who treat it as support.

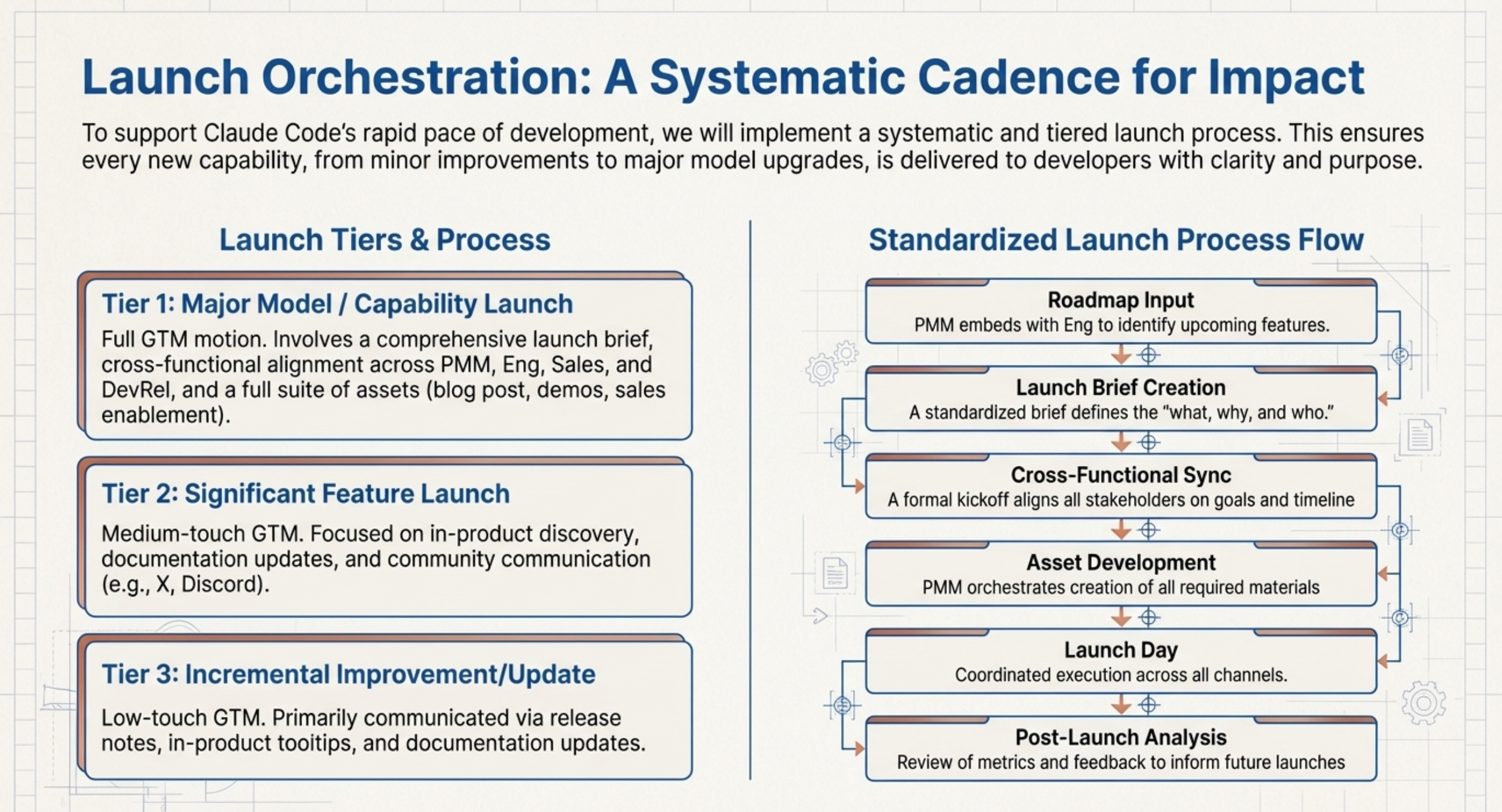

3. Launches in a World of Continuous Change

AI launches are rarely “big bang” events. More often, they are:

Model upgrades

New workflows

Expanded context windows

Reliability or cost improvements

This requires PMMs to:

Reframe launches around user impact, not technical change

Segment messaging by audience (developers, operators, buyers)

Prepare internal teams to explain what did not change as much as what did

A successful AI launch answers:

“What is now possible that wasn’t before—and for whom?”

3. Go-to-Market Strategy for AI Products

AI GTM strategy must reflect how AI is discovered, evaluated, and adopted.

1. Bottom-Up Meets Top-Down

Most AI products follow a hybrid GTM motion:

Bottom-up: Developers or practitioners experiment, test, and prototype

Top-down: Buyers and executives approve spend, risk, and scale

Product marketing must serve both paths:

Clear docs, examples, and quickstarts for builders

Outcome-driven narratives, risk framing, and ROI for buyers

Misalignment between these layers is a common failure mode.

2. Use-Case-Led GTM

Because AI systems are flexible, customers struggle to see relevance unless use cases are concrete.

Strong AI GTM strategies lead with:

Specific workflows (“ticket triage,” “contract review”)

Clear before/after comparisons

Evidence of repeatability

This reduces cognitive load and accelerates time-to-value.

3. Trust as a GTM Advantage

In crowded AI markets, trust becomes a differentiator.

PMMs must:

Avoid inflated claims

Communicate uncertainty honestly

Explain safeguards and governance clearly

Trust compounds adoption—especially in enterprise and regulated environments.

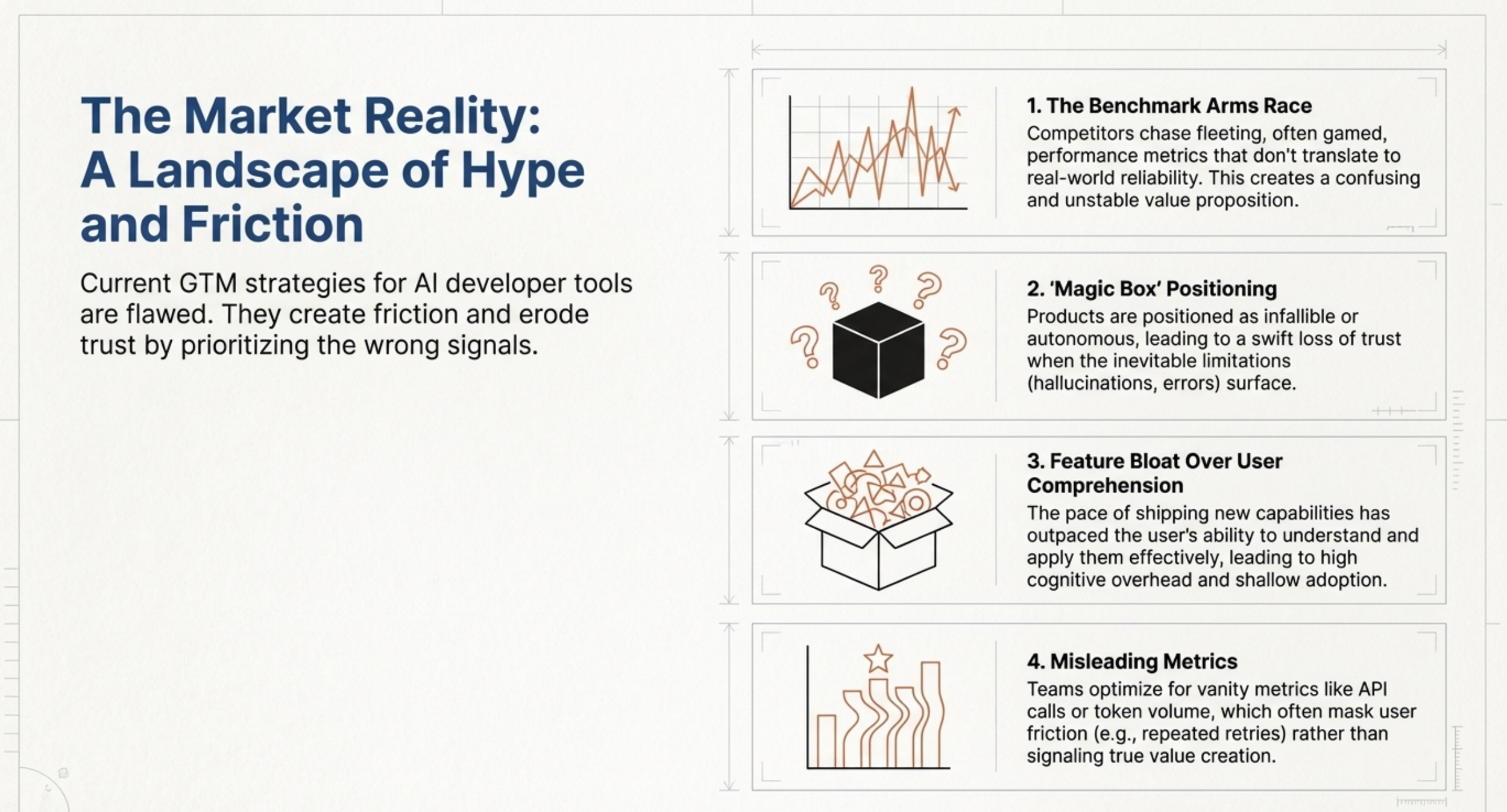

4. Metrics: Measuring What Actually Matters in AI

One of the biggest mistakes AI teams make is measuring success the same way they measure SaaS.

Model usage is not customer value.

AI PMMs must help redefine success metrics around outcomes, confidence, and learning.

1. Activation Metrics in AI

Activation is not “first use.” It is first reliable value.

Good activation signals include:

First accepted output

Time-to-first-useful result

Reduction in retries

Evidence the user understands how to reproduce success

If a user interacts but does not understand why the output worked, they are not activated.

2. Retention Metrics in AI

Retention is not frequency—it is repeat reliance.

Better retention signals include:

Repeated use of the same use case

Reduced verification or manual override

Increased automation

Expansion into adjacent tasks

A highly retained AI product may show lower usage over time as workflows become efficient.

3. Churn Signals in AI

AI churn is often silent and trust-based.

Early churn indicators include:

Increased retries without improvement

Avoidance of high-impact tasks

Declining output acceptance

Continued use without commitment or automation

PMMs should treat these as leading indicators long before accounts cancel.

4. Business Value Metrics

Ultimately, AI PMMs must connect product usage to business outcomes:

Time saved

Cost reduced

Errors prevented

Decisions improved

Revenue influenced

These metrics matter far more than tokens, sessions, or clicks.

5. The PMM as the Translator-in-Chief

In AI organizations, PMMs play a uniquely critical role.

They translate:

Research into relevance

Capabilities into outcomes

Metrics into meaning

They help teams avoid:

Overclaiming

Misaligned GTM

Vanity metrics

And they ensure that as models improve, customers actually benefit.

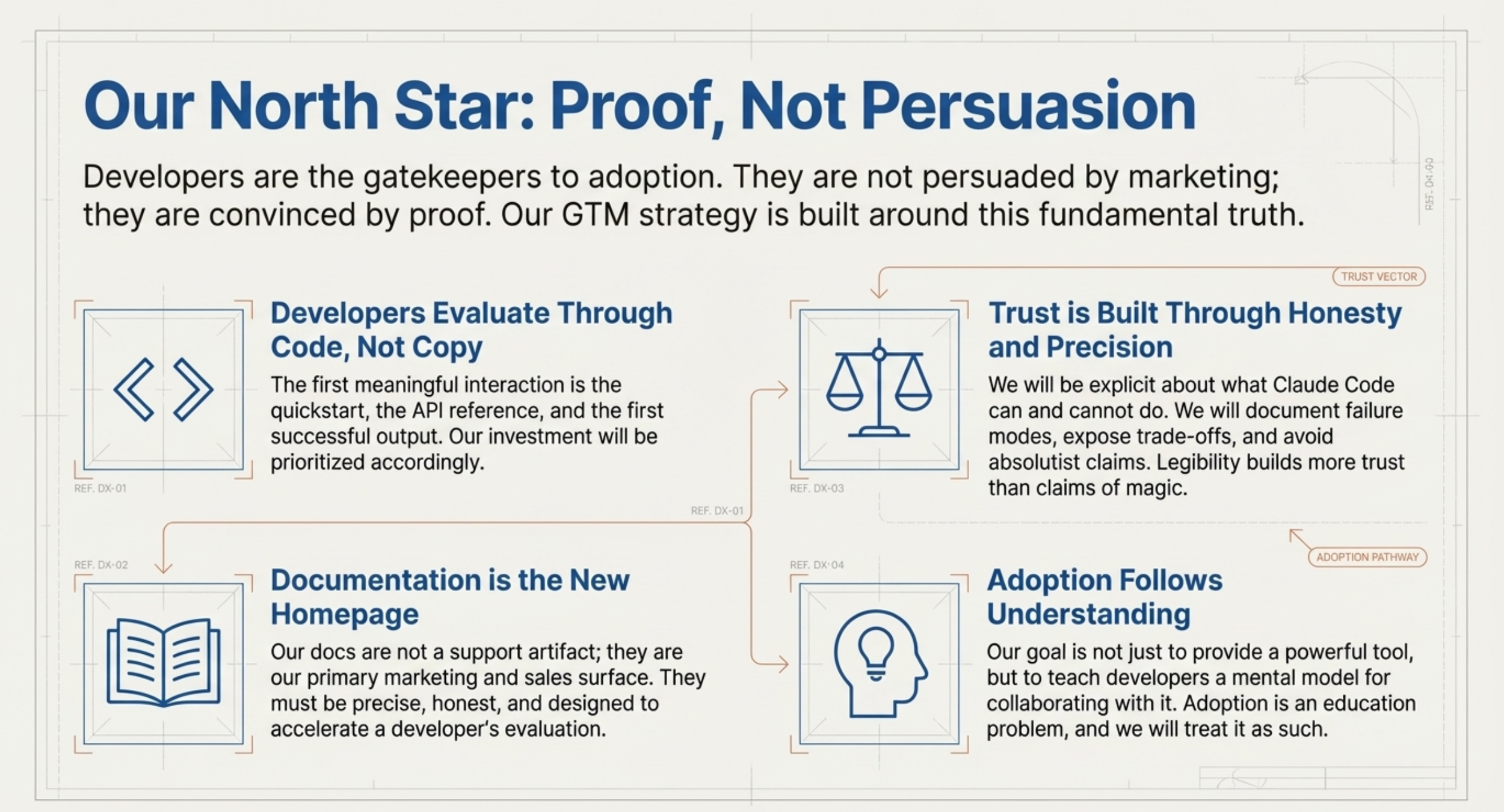

TARGETTING Developers

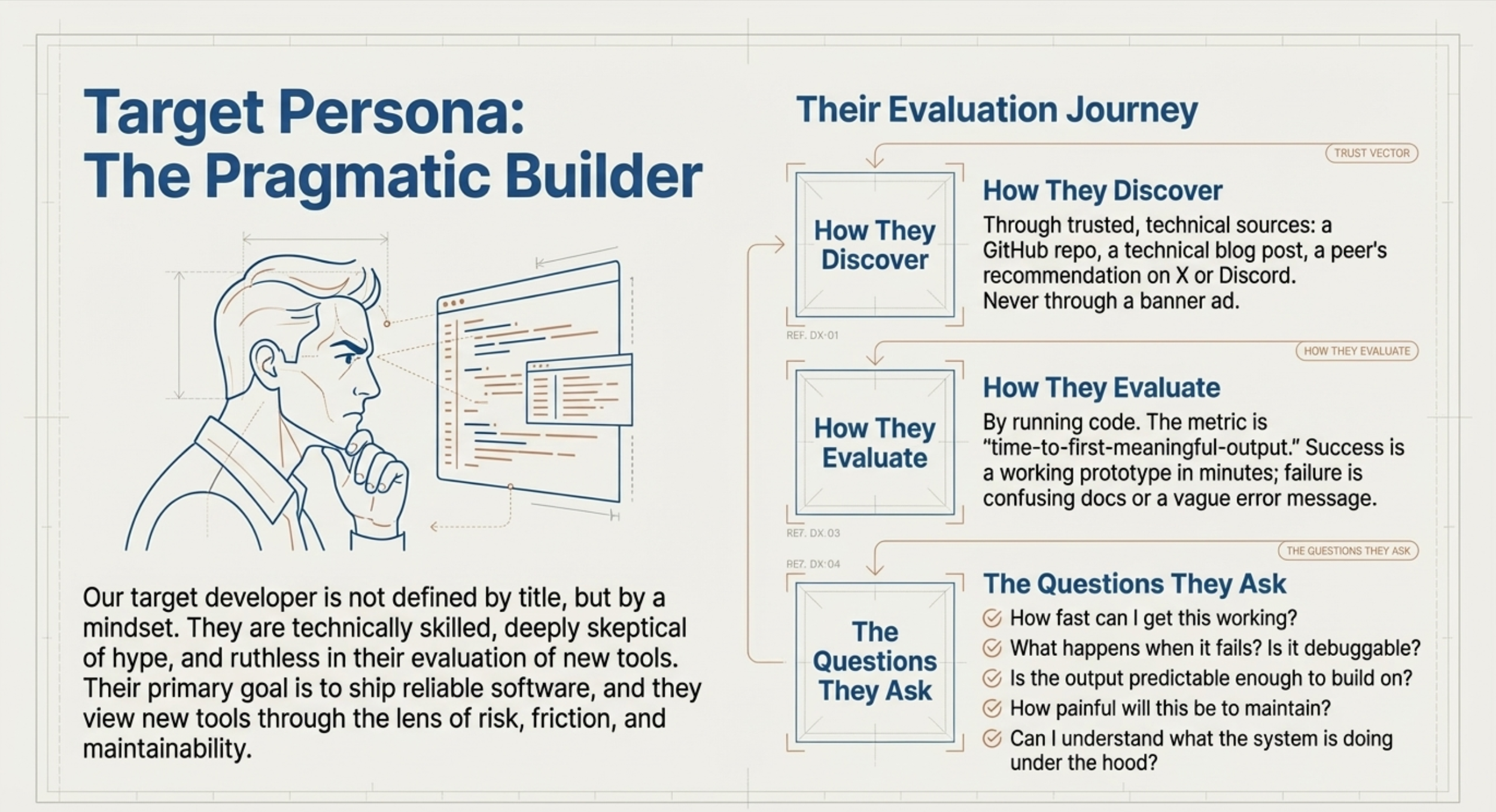

When developers are the ideal customer profile (ICP), go-to-market strategy stops looking like marketing and starts looking like product design.

This is especially true in AI.

AI products are not adopted because of persuasion. They are adopted because a developer tries something, sees that it works, trusts it enough to depend on it, and then builds real systems on top of it. Every step in that sequence is technical, experiential, and unforgiving.

When developers are the ICP, GTM is not a funnel—it is a confidence-building pipeline.

This essay explains how GTM strategy must change when developers are the primary buyers, users, and advocates of AI products—and why most traditional SaaS GTM playbooks fail in this context.

1. Developers Do Not “Buy” AI Products—They Validate Them

In developer-led AI GTM, the first sale does not happen through a contract. It happens through a successful experiment.

A developer asks:

Can I get this working quickly?

Does it behave the way I expect?

Can I debug it when it fails?

Does it fit my architecture and constraints?

If the answer to any of those is “no,” the GTM motion ends immediately—often without feedback.

This is why developer GTM for AI must prioritize:

Speed to first meaningful output

Predictability of behavior

Clarity of failure modes

Ease of iteration

Traditional GTM optimizes for awareness and conversion. Developer GTM optimizes for verification.

2. In AI, Trust Is Earned Through Interfaces, Not Messaging

AI systems are probabilistic. Outputs vary. Edge cases exist. Behavior changes as models evolve.

Developers are acutely sensitive to this.

They do not expect perfection—but they do expect legibility.

For developers, trust is built when:

Inputs map clearly to outputs

Parameters have understandable effects

Errors are explicit and actionable

Constraints are documented honestly

Performance trade-offs are visible

This makes GTM surfaces like:

API docs

Quickstarts

SDKs

Example repos

Error messages

far more important than:

Landing pages

Value propositions

Vision statements

When developers are the ICP, documentation is the primary GTM surface.

3. Discovery Is Bottom-Up and Accidental

Developer-led AI GTM rarely begins with “campaigns.”

It begins with:

A GitHub repo

A code snippet shared on X

A blog post with real examples

A mention in a Discord or Slack

A benchmark comparison

This discovery is:

Non-linear

Peer-driven

Skeptical by default

As a result, GTM strategy must focus less on controlling the narrative and more on making the product easy to evaluate in isolation.

Developers assume:

“If this is good, I should be able to tell quickly.”

Your GTM either enables that judgment—or blocks it.

4. Use-Case Clarity Matters More Than Feature Breadth

AI products often have broad capability surfaces:

One model, many tasks

One API, many workflows

One system, many use cases

Developers do not experience this as flexibility. They experience it as ambiguity.

Strong GTM for developer ICPs is use-case-led, not capability-led.

That means:

Leading with concrete problems (“classify support tickets,” not “advanced NLP”)

Providing end-to-end examples, not isolated endpoints

Showing how the AI fits into a real system, not just how it works

The question developers ask is not:

“What can this model do?”

It is:

“What can I build with this today that will still work in six months?”

5. Friction Is the GTM Enemy—Especially in AI

AI GTM lives or dies by friction.

Common friction points that kill adoption:

Complicated authentication

Poor defaults

Unclear parameter interactions

Hidden costs (tokens, latency)

Vague performance guarantees

Silent failure modes

Every one of these is a GTM issue, not just a product issue.

When developers are the ICP:

Onboarding is GTM

API design is GTM

Error handling is GTM

Rate limits are GTM

Pricing transparency is GTM

You do not “message” your way out of friction. You remove it.

6. Pricing and Packaging Must Match Developer Mental Models

Developers reason about cost differently than buyers.

They care about:

Predictability

Marginal cost

Worst-case scenarios

How cost scales with usage

Whether experimentation is safe

Opaque or surprising pricing kills trust quickly—especially in AI, where token-based or usage-based pricing can escalate fast.

Effective developer GTM:

Makes pricing legible at the API call level

Provides cost estimation tools

Aligns pricing units with real workloads

Avoids forcing early commitments

If developers cannot safely experiment, they will not advocate.

7. Adoption Is Earned Through Learning, Not Exposure

In AI products, adoption is not a function of exposure. It is a function of learning.

Developers must learn:

How to frame inputs

How to interpret outputs

How to mitigate variability

How to detect and handle failure

How to test and monitor behavior

GTM strategy must therefore include:

Educational docs

Example prompts and patterns

Anti-patterns and “what not to do”

Migration guides as models evolve

Clear explanations of breaking changes

This is why the best AI developer GTM feels closer to teaching than selling.

8. Metrics: What Matters When Developers Are the ICP

Traditional GTM metrics mislead in developer-first AI products.

What doesn’t work:

Raw API call volume

Token consumption

Session counts

Feature usage

These often measure confusion, retries, or inefficiency.

What does work:

Time to first successful build

First accepted output

Repeat use of the same workflow

Reduction in retries over time

Willingness to automate or depend on outputs

Expansion into additional use cases

The strongest signal of developer adoption is not activity—it is confidence.

When developers trust the system, usage often becomes quieter, not louder.

9. Bottom-Up GTM Still Needs Top-Down Support

Even when developers are the ICP, successful AI GTM eventually intersects with:

Security

Compliance

Procurement

Architecture review

GTM strategy must anticipate this transition.

That means:

Clear security documentation

Transparent data handling policies

Enterprise-ready deployment options

Honest discussion of limitations

Developers who trust your product become your strongest internal champions—but only if you give them the tools to defend it.

Developer GTM Is Earned, Not Broadcast

When developers are the ICP, GTM strategy must change at a foundational level.

You are not persuading.

You are not “creating demand.”

You are enabling judgment.

The best GTM strategy for AI products aimed at developers:

Reduces friction

Increases clarity

Teaches mental models

Exposes reality honestly

Respects skepticism

Developers do not want to be sold to.

They want something that works—and keeps working when it matters.

If your GTM helps them discover that truth quickly, adoption will follow.

TARGETING Enterprise Executives

When enterprise executives are the ideal customer profile (ICP), go-to-market strategy for AI products must change fundamentally.

Executives do not buy AI because it is impressive.

They buy it because it changes outcomes while not introducing unacceptable risk.

Most AI GTM strategies fail at the executive level because they over-index on capability and under-index on decision-making psychology: accountability, risk exposure, organizational complexity, and trust. Executives are not evaluating whether an AI model is “state of the art.” They are evaluating whether adopting it will help them hit objectives without creating new liabilities.

This essay outlines how GTM strategy must be designed when AI products are sold to enterprise executives—what they care about, how they evaluate value, and how AI companies must structure positioning, sales motions, and metrics accordingly.

1. Executives Do Not Buy AI—They Buy Change

Enterprise executives do not purchase tools. They authorize organizational change.

When an executive evaluates an AI product, they are implicitly asking:

Will this materially improve a business outcome I am accountable for?

What risks does this introduce—technical, legal, reputational, operational?

How hard will this be to roll out across teams?

Who will be blamed if this fails?

Can this scale without constant intervention?

This framing matters. AI GTM to executives is not about feature adoption—it is about decision safety.

If your GTM narrative does not explicitly reduce perceived risk, no amount of technical differentiation will matter.

2. Capability-Based Positioning Fails at the Executive Level

A common AI GTM mistake is leading with capabilities:

Model size

Accuracy metrics

Benchmarks

Architecture

“Powered by X”

Executives do not have the context—or patience—to interpret these meaningfully. More importantly, capability-based positioning shifts risk onto them. If they greenlight a technically impressive product that later fails, they own the decision.

Effective executive GTM replaces capability-first messaging with outcome-first framing.

Instead of:

“Our AI model achieves 95% accuracy on benchmark Y”

Executives need:

“This reduces manual review time by 40% while maintaining auditability.”

The former invites scrutiny.

The latter invites discussion.

3. The Executive GTM Hierarchy: Outcome → Risk → Proof

When executives evaluate AI products, they follow a predictable mental sequence:

1. Outcome

What concrete business result does this drive?

Cost reduction

Revenue lift

Risk mitigation

Speed to decision

Capacity unlock

2. Risk

What could go wrong?

Incorrect outputs

Regulatory exposure

Data leakage

Brand damage

Organizational failure

3. Proof

Why should I believe this will work here?

Reference customers

Pilots

Metrics tied to real workflows

Guardrails and governance

AI GTM strategy must align to this hierarchy. Skipping or reordering it almost always fails.

4. Trust Is the Core Differentiator in Enterprise AI

In enterprise markets, trust is not a brand attribute—it is a buying criterion.

Executives have lived through:

Overhyped analytics tools

Failed digital transformations

Vendors that disappeared after contract signing

AI increases this skepticism because:

Outputs are probabilistic

Failures can be subtle

Errors may only surface after decisions are made

Effective GTM to executives therefore emphasizes:

What the AI will not do

Where human oversight remains

How uncertainty is handled

How errors are detected and mitigated

Ironically, honesty about limitations increases executive confidence. Overclaiming does the opposite.

5. Enterprise Executives Buy Systems, Not Features

Executives are not thinking in terms of “using a tool.” They are thinking in terms of embedding a system into their organization.

This means GTM must address:

Integration with existing workflows

Compatibility with governance and compliance processes

Change management and adoption

Ongoing operational ownership

AI GTM that ignores organizational reality is dismissed quickly.

Strong executive-facing GTM frames AI as:

An augmentation layer, not a replacement

A decision support system, not an autonomous actor

A controllable asset, not a black box

This reduces perceived disruption and increases likelihood of buy-in.

6. The Buying Committee Is the Real ICP

When executives are the ICP, the buyer is plural.

Even if a C-level executive sponsors the purchase, decisions involve:

Legal

Security

IT

Compliance

Operations

Sometimes labor or ethics committees

Effective GTM anticipates this and equips the executive champion with:

Clear narratives for each stakeholder

Preemptive answers to common objections

Documentation that supports internal justification

AI GTM fails when it assumes executive authority overrides internal scrutiny. In reality, executives rely on evidence to defend their decisions internally.

7. Enterprise AI GTM Is Slow by Design

Unlike developer-led GTM, enterprise executive GTM is intentionally slow.

Executives value:

Predictability over speed

Pilot validation over demos

Incremental rollout over big bets

A strong GTM strategy embraces this by:

Designing pilots with clear success criteria

Structuring phased rollouts

Aligning milestones with executive reporting cycles

Setting expectations around timelines and learning

Trying to accelerate enterprise AI GTM through pressure or hype backfires. Executives interpret urgency as immaturity.

8. Metrics Executives Care About (and Those They Don’t)

Executives do not care about:

Model usage

Token counts

Session frequency

Feature adoption rates

They care about:

Time saved

Costs avoided

Errors reduced

Throughput increased

Decisions improved

Risk exposure lowered

AI GTM must translate product metrics into business metrics that align with executive KPIs.

For example:

“Reduced manual review hours by X per quarter”

“Lowered false positives by Y% in compliance checks”

“Accelerated reporting cycles by Z days”

If your GTM cannot tie AI performance to executive scorecards, it will stall.

9. The Role of the PMM in Executive AI GTM

When selling to enterprise executives, PMMs play a critical role as narrative architects.

They must:

Shape outcome-oriented positioning

Ensure claims are defensible

Balance ambition with restraint

Translate technical reality into executive language

Protect long-term trust over short-term excitement

This is not traditional messaging. It is decision support.

The PMM’s success is measured not by how compelling the story sounds—but by how confidently executives can repeat it in a boardroom.

10. Why Many AI Products Stall After the First Deal

A common failure pattern in enterprise AI GTM:

Executive approves pilot

Initial excitement

Inconsistent results surface

Risk concerns escalate

Expansion stalls

This happens when GTM oversells early success and undersells ongoing operational complexity.

Sustainable enterprise GTM:

Sets expectations correctly

Frames AI as a learning system

Plans for iteration

Treats trust as cumulative, not assumed

Executives are willing to tolerate imperfection—but not surprises.

Executive GTM in AI Is About Reducing Decision Risk

When enterprise executives are the ICP, AI GTM strategy must center on decision confidence.

Executives are not asking:

“Is this AI impressive?”

They are asking:

“Can I responsibly stand behind this decision?”

The AI companies that succeed at the enterprise level are not the ones with the flashiest demos or the largest models.

They are the ones that:

Lead with outcomes

Acknowledge limitations

Make risk legible

Provide proof in context

Earn trust incrementally

In enterprise AI, GTM is not about convincing executives to believe.

It is about giving them the confidence to decide.

TARGETING General Consumers

When general consumers are the ideal customer profile (ICP), go-to-market (GTM) strategy for AI products becomes less about distribution and more about interpretation.

Consumers do not adopt AI products because they understand them.

They adopt them because they believe they know what will happen when they use them.

This distinction is critical.

Unlike enterprise buyers or developers, consumers lack both the technical context and the institutional safeguards that make AI’s uncertainty manageable. For consumer AI products, adoption hinges on whether people can form accurate mental models of what the product does, when it helps, and when it does not.

As a result, education is not a support function or a nice-to-have.

It is the core GTM strategy.

1. Consumer AI Is Evaluated Emotionally, Not Technically

General consumers do not evaluate AI products based on:

Benchmarks

Model architecture

Training data scale

Accuracy metrics

They evaluate based on:

Whether the product feels helpful

Whether it feels reliable

Whether it feels safe

Whether it makes them feel competent or confused

Consumer AI adoption is driven by perceived agency, not raw capability.

If users feel:

Misled by outputs

Confused by variability

Embarrassed by mistakes

Anxious about misuse

They churn quickly—and often permanently.

This is why GTM for consumer AI must focus less on what the AI can do and more on how it behaves and how users should relate to it.

2. The Central GTM Challenge: AI Violates Everyday Intuition

Most consumer products align with existing intuition.

A calculator gives the same answer every time.

A map app shows the same route unless conditions change.

A camera captures what is in front of it.

AI products break this intuition.

They:

Generate different outputs for similar inputs

Sound confident even when wrong

Require experimentation to improve results

Behave inconsistently across contexts

For consumers, this feels less like software and more like unpredictability.

Without education, users interpret this as:

“The product is broken”

“I’m using it wrong”

“I can’t trust this”

This is why consumer AI GTM must actively reshape user expectations.

3. Education Is the First Conversion Event

In consumer AI, the most important GTM milestone is not sign-up or first use.

It is first correct expectation.

A user is meaningfully “converted” when they understand:

What kinds of tasks the AI is good at

What kinds of tasks it struggles with

Why outputs may vary

How to improve results

When to rely on it—and when not to

Without this understanding:

Early delight turns into frustration

Novelty turns into disappointment

Usage turns into churn

Education is what turns curiosity into confidence.

4. Onboarding Must Teach a Mental Model, Not a Feature Set

Traditional consumer onboarding explains what buttons do.

AI onboarding must explain how the system thinks—at least at a conceptual level.

Effective consumer AI onboarding:

Explains that outputs are generated, not retrieved

Normalizes variation instead of hiding it

Demonstrates iteration (“try refining your input”)

Shows examples of both good and bad outputs

Frames the AI as assistive, not authoritative

This does not require technical language. It requires honest framing.

For example:

“This tool helps you draft ideas quickly. It won’t always be right, but it’s great for getting started.”

That single sentence prevents dozens of downstream trust failures.

5. Feature Discovery Without Education Backfires

Many consumer AI products ship rapidly expanding feature sets:

Image generation

Text generation

Summarization

Voice

Agents

Automation

From a GTM perspective, this looks like progress.

From a consumer perspective, it often looks like chaos.

Without education:

Users don’t know which feature to use when

Outputs feel inconsistent

Product value feels scattered

Confidence erodes

In consumer AI, more features increase cognitive load unless paired with guidance.

Effective GTM introduces capabilities:

Gradually

In context

With clear “when to use this” framing

Anchored to familiar use cases

Education is what turns feature breadth into perceived versatility rather than confusion.

6. Trust Is Built by Explaining Limitations Early

Consumer AI products often fail by overselling intelligence and underselling constraints.

This creates a predictable cycle:

High expectations

Impressive first output

Confident wrong output

Loss of trust

Churn

Education interrupts this cycle.

When consumers are told:

“This might be wrong sometimes”

“You should double-check important outputs”

“This is best for drafts, ideas, and assistance”

They do not feel disappointed when limitations appear—they feel informed.

Paradoxically, disclosing limitations increases consumer trust, because it aligns experience with expectation.

7. Education Reduces Fear as Well as Friction

Consumer AI adoption is constrained not just by usability, but by anxiety.

Common fears include:

“Is this replacing me?”

“Is this spying on me?”

“Is this safe to rely on?”

“Will this make me look stupid if it’s wrong?”

Education addresses these fears directly.

Clear GTM messaging and in-product education should explain:

What data is and isn’t used

Whether outputs are stored or shared

What control the user has

How mistakes can be corrected

Fear is a silent churn driver. Education is its antidote.

8. Consumer AI Retention Depends on Learning, Not Habit

Traditional consumer apps rely on habit loops.

Consumer AI relies on skill acquisition.

Users stay when they feel:

They are getting better at using the product

They understand how to get consistent value

The AI feels more predictable over time

This means GTM must extend beyond acquisition into:

Ongoing tips

Progressive guidance

Contextual suggestions

Gentle correction of misuse

Retention grows as confidence grows.

9. Metrics Must Measure Understanding, Not Just Engagement

Consumer AI teams often over-index on:

Daily active users

Session length

Interaction counts

These metrics are dangerously misleading.

High engagement may indicate:

Confusion

Retry behavior

Frustration

Lack of resolution

More meaningful consumer AI GTM metrics include:

First successful outcome

Reduction in retries

Output acceptance

Willingness to rely on results

Return to the same task

These metrics reflect value realization, not activity.

10. Education Is the Only Scalable Consumer AI Moat

In consumer markets, distribution advantages are fleeting.

What compounds is user understanding.

A consumer who:

Understands what the AI is for

Knows how to use it well

Trusts it appropriately

Is far more likely to:

Stick with the product

Expand usage

Recommend it accurately

Defend it socially

Education creates informed users.

Informed users create durable adoption.

Consumer AI Wins by Teaching, Not Impressing

When general consumers are the ICP, GTM strategy must be built around education.

Not tutorials.

Not documentation.

Not FAQs.

But expectation-setting, mental model building, and trust formation.

The consumer AI products that win long-term will not be the ones that feel the most magical on day one.

They will be the ones that:

Teach users what to expect

Make uncertainty understandable

Reduce fear and confusion

Help people feel capable—not replaced

In consumer AI, adoption follows understanding.

And understanding must be designed, not assumed.

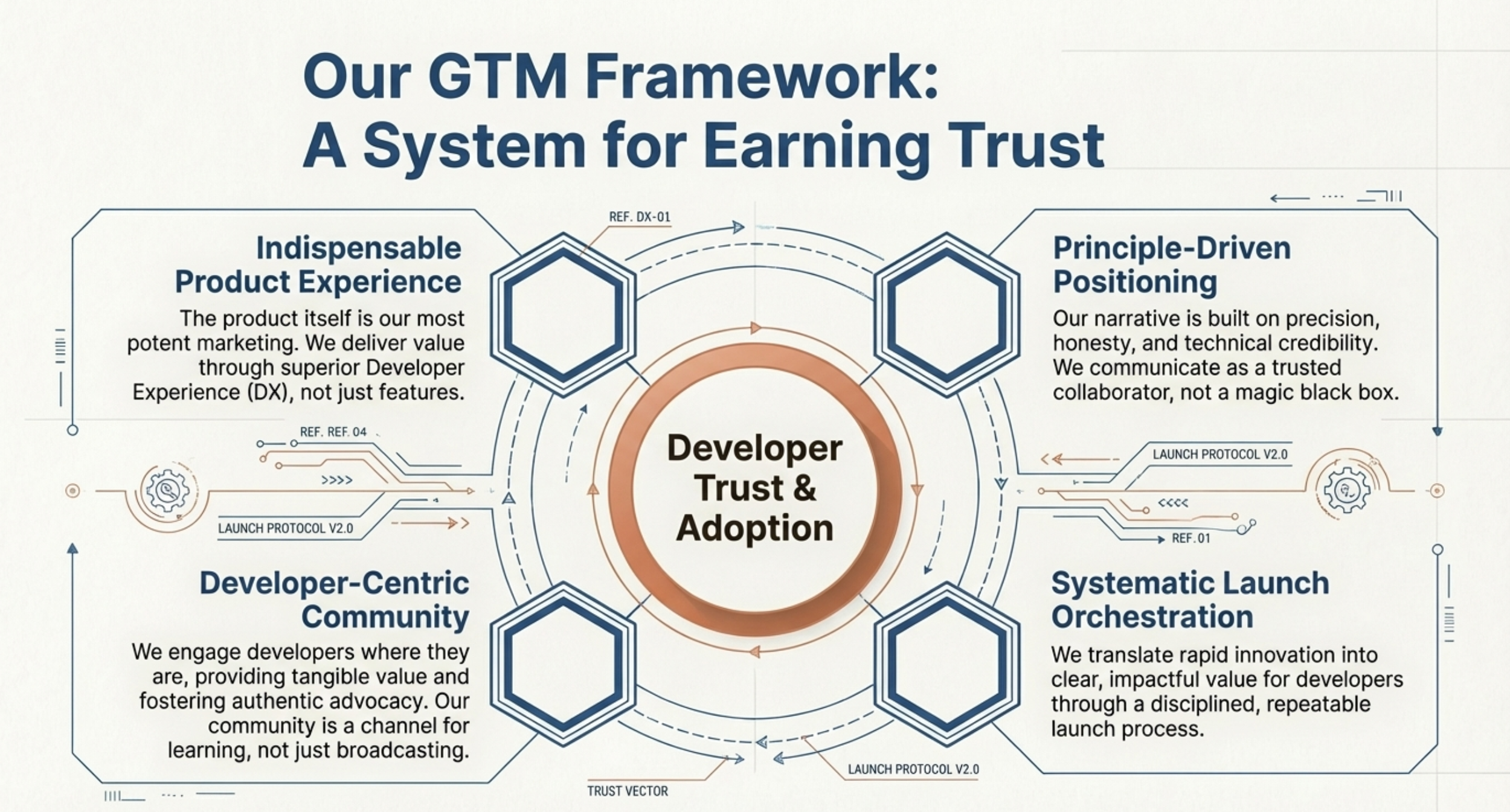

EXAMPLE GTM STRATEGY FOR Claude Code

1.0 Introduction: The Strategic Imperative for Claude Code

This document outlines the go-to-market (GTM) strategy for Claude Code, our new AI coding agent. The launch of Claude Code is more than a product release; it represents a strategic initiative to establish a leadership position in the rapidly expanding market for AI-powered developer tools. By leveraging our unique strengths, we aim to not only capture significant market share but also set a new standard for how developers collaborate with AI.

Our vision is to make Claude Code the indispensable AI partner for developers, establishing a new frontier where development velocity and code integrity are not a trade-off, but a unified outcome. This approach is a direct extension of Anthropic's deep research background in foundational areas like Circuit-Based Interpretability, Concrete Problems in AI Safety, and Learning from Human Preferences. We are not just building another coding assistant; we are creating a reliable, transparent, and highly capable partner for professional software development teams.

This plan provides a comprehensive roadmap for internal stakeholders, detailing our strategy from initial market analysis through sustained, long-term growth. It begins with a thorough assessment of the competitive landscape, which informs our unique positioning and messaging framework. From there, we define our target audience and detail a multi-phased launch plan designed for maximum impact. Finally, we outline our strategies for post-launch growth and establish a clear framework for measuring success.

To succeed, we must first understand the environment in which we will compete. The following analysis of the market landscape will illuminate the opportunities and challenges ahead, allowing us to carve out a distinct and defensible position for Claude Code.

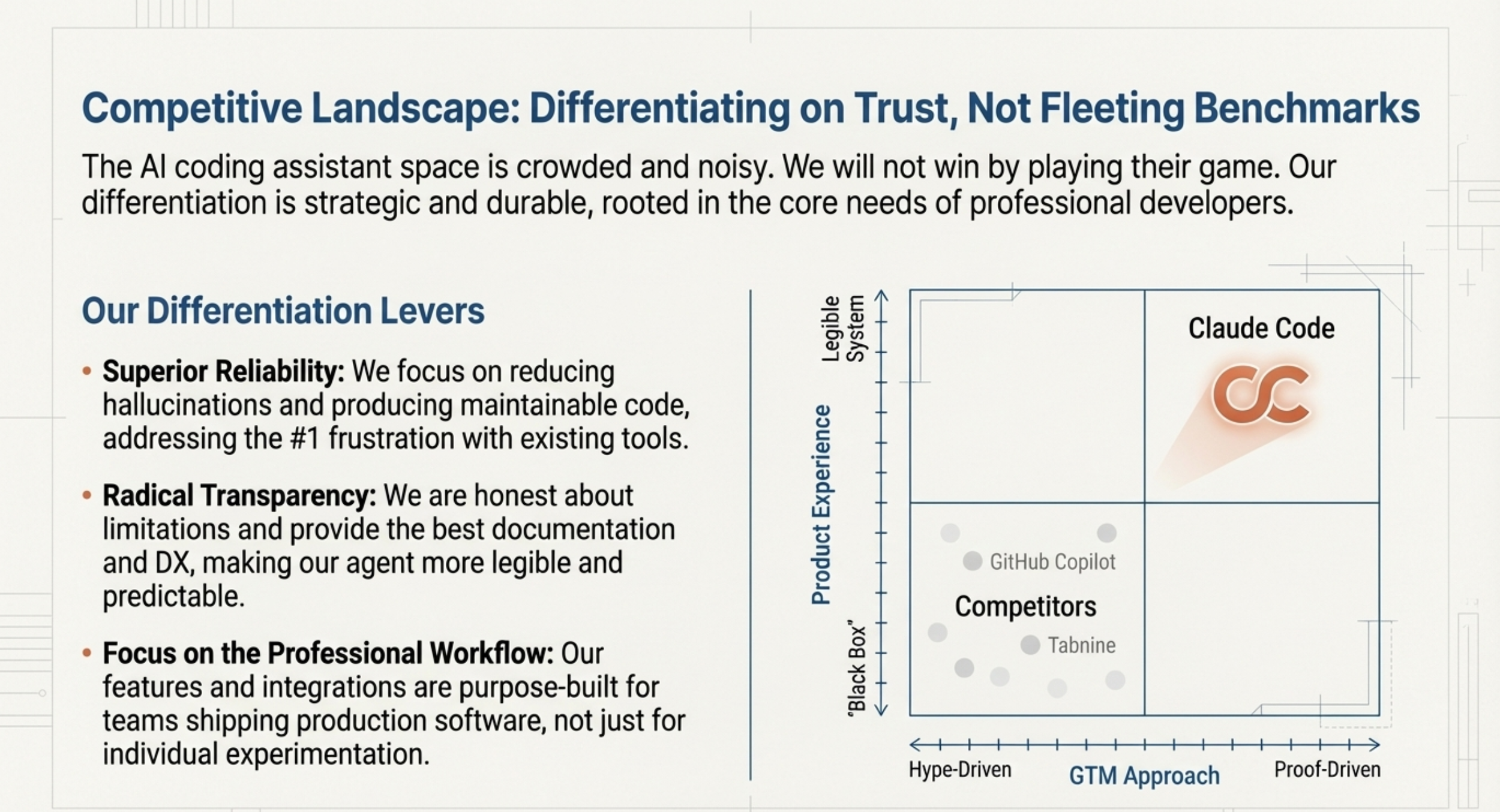

2.0 Market & Competitive Landscape Analysis

The market for AI coding assistants is dynamic and increasingly crowded, with a new baseline of expectation set by powerful foundation models like GPT-4. In this environment, simply matching baseline capabilities is insufficient for success. Sophisticated and meaningful differentiation is no longer a luxury but a critical necessity. Our strategy must be rooted in a deep understanding of developer workflows, the relentless pressure for efficiency, and the growing complexity of modern software development.

The opportunity is clear: developers are actively seeking tools that do more than just generate code snippets. They need intelligent partners that can help them navigate complex codebases, improve code quality, and accelerate the entire development lifecycle. Our analysis indicates that the competitive landscape can be effectively understood not as a list of individual products, but as distinct tiers of solutions, each addressing a different underlying pain point for developers.

Competitive Tiers

Tier

Core Pain Point Addressed

Strategic Implications for Claude Code

Tier 1: Incumbent Platforms

Workflow Integration & Breadth: These are large, integrated platforms (e.g., GitHub Copilot) that offer AI assistance directly within an existing, widely adopted developer ecosystem. Their primary value is convenience and low adoption friction.

Our opportunity is to flank incumbents by focusing on high-stakes domains like security and compliance, where our model's provable correctness and auditable code provenance offer a defensible moat.

Tier 2: Specialized AI Agents

Niche Task Automation: These are point solutions designed to excel at a specific, high-value task, such as automated testing, debugging, or code refactoring. Their value is rooted in depth of capability for a narrow use case.

These tools validate the market demand for specialized AI assistance. We can differentiate by offering a cohesive agent that combines multiple high-value specializations, while maintaining a consistent and trustworthy user experience.

Tier 3: Open-Source Frameworks

Customization & Control: These are open-source models and libraries that allow organizations to build their own custom AI coding solutions. They address the need for maximum control, transparency, and data privacy.

This tier validates the enterprise need for trust. We will position against them by offering the auditability of a custom solution without the prohibitive TCO of building, fine-tuning, and maintaining a bespoke AI model.

Based on this analysis, Claude Code's core strategic differentiation is a direct result of our research ethos: we deliver an AI partner that excels in high-stakes environments where code correctness, security, and auditability are non-negotiable. While competitors focus on feature velocity and breadth, our approach is grounded in solving a specific class of customer problems where trust and technical nuance are paramount. This allows us to build a loyal following among professional developers who value reliability as much as speed.

Understanding this competitive landscape is the foundation upon which we will build a precise and compelling positioning strategy.

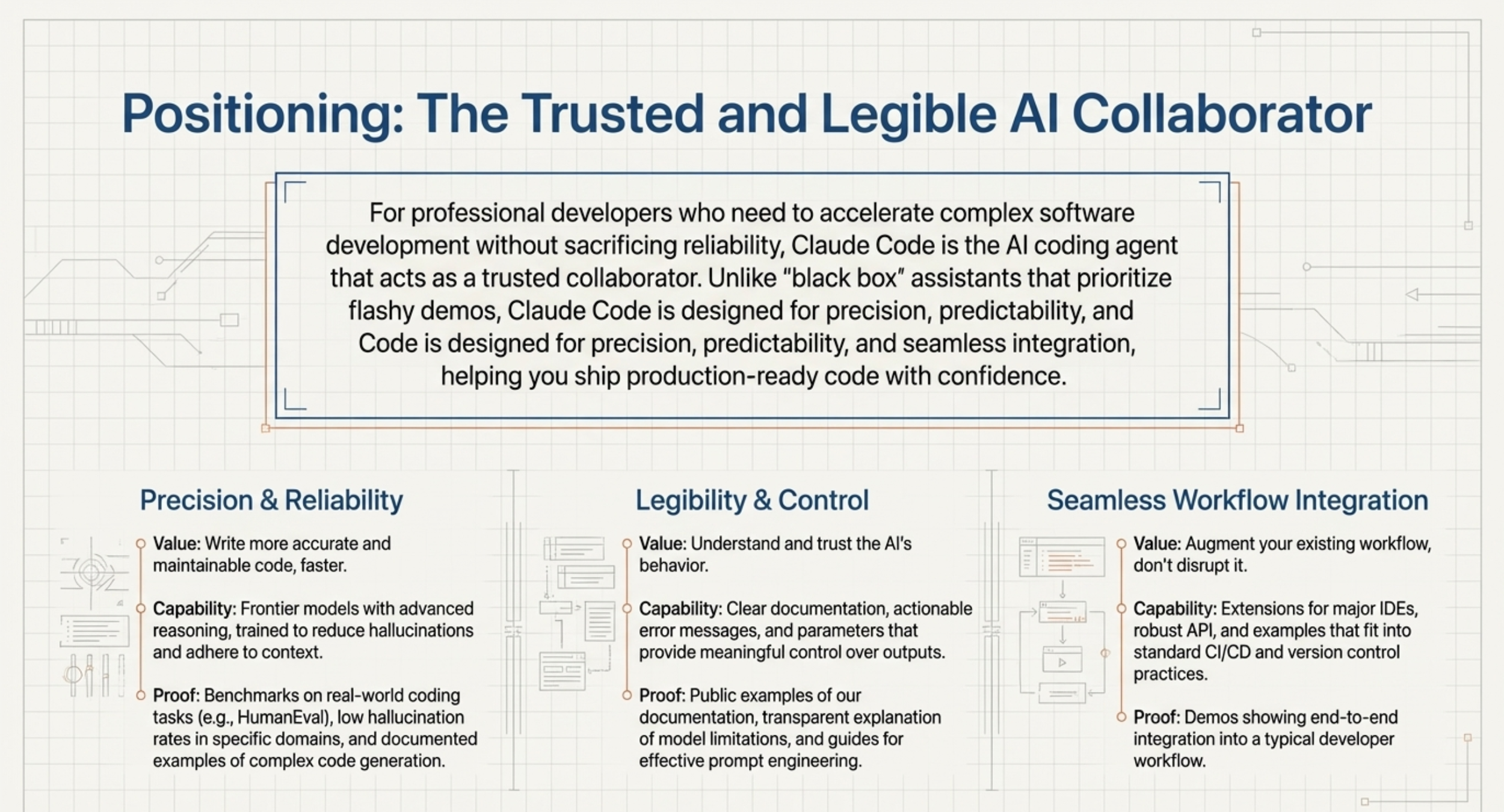

3.0 Positioning & Messaging Framework

A clear and defensible messaging framework is essential for cutting through the noise of a crowded market. Our positioning will be fundamentally outcome-based, moving the conversation away from a commoditized list of AI features and toward the measurable value we deliver to developers and their organizations. This framework will ensure all internal teams—from product and engineering to sales and marketing—are aligned on a single, powerful narrative.

Claude Code empowers professional development teams who demand performance without compromising on reliability. By combining frontier AI capabilities with a deep commitment to responsible design, we help teams accelerate development, enhance code quality, and build with confidence.

This internal statement will guide the development of our external messaging, which is structured around three core pillars. Each pillar follows a simple but powerful framework: Value → Capability → Proof.

Pillar 1: Accelerate Development Velocity

Value: Significantly reduce the time and friction involved in complex coding tasks, allowing developers to focus on high-impact problem-solving rather than routine implementation.

Capability: Claude Code is powered by domain-tuned large language models, specifically optimized for code generation, complex logic implementation, and analysis of large codebases.

Proof: We will substantiate our claims with quantitative evidence, such as "Beta study results showing 40% time savings on common development tasks" and a measurable reduction in manual coding efforts.

Pillar 2: Enhance Code Quality & Reliability

Value: Empower developers to write more robust, maintainable, and secure code from the start, reducing bugs and long-term technical debt.

Capability: Features include advanced debugging assistance, automated code review with suggestions based on best practices, and provenance tracking to ensure generated code is auditable.

Proof: Our proof points will be anchored in objective metrics, such as "98% citation alignment with gold-standard code repositories" and benchmarks demonstrating a tangible reduction in common bugs and vulnerabilities.

Pillar 3: Foster Trust Through Responsible AI

Value: Provide a predictable and trustworthy AI collaboration experience, giving professional developers the confidence to integrate AI deeply into their critical workflows.

Capability: We are committed to honest messaging, model explainability, and proactively flagging outputs where model uncertainty is high, enabling human oversight where it matters most.

Proof: Trust will be established through transparent documentation on model limitations, external audits demonstrating compliance with AI safety standards, and customer case studies highlighting our commitment to responsible partnership.

Guidelines for Responsible Messaging

To maintain our brand's credibility, all public-facing communication will adhere to the following principles:

DO: Use bounded, honest language that sets realistic expectations. For example, "Our AI achieves high-confidence insights within known data domains."

DO: Explain that higher confidence does not mean certainty. Position AI outputs as powerful advisory inputs that augment, rather than replace, human expertise.

DON'T: Make absolute or exaggerated claims. Avoid statements like, "Our AI always gives factually correct insights."

With a clear understanding of what we will say, we can now turn our focus to whom we will be saying it to.

4.0 Target Audience & Personas

A deep and nuanced understanding of our customer is the cornerstone of our go-to-market strategy. A precise definition of our Ideal Customer Profile (ICP) and key user personas will guide every GTM activity, from product roadmap decisions and documentation priorities to the execution of targeted marketing campaigns.

Ideal Customer Profile (ICP)

Our primary focus is on organizations where software development is a core driver of business value and where teams are sophisticated enough to appreciate the trade-offs between speed and quality.

Industry/Sector: Technology, SaaS, and Enterprise Software companies where innovation is directly tied to engineering output.

Company Size: Mid-to-large enterprises with established engineering teams (50+ developers) that feel the acute pain of complexity and scale.

Technical Context: Organizations building on modern technology stacks (e.g., Python, Node.js) and are heavy users of cloud services. These teams are typically early adopters of new developer tools and APIs.

Primary User Persona: "Devon Integrator"

Within our target organizations, our primary user persona is the hands-on developer tasked with building and integrating new capabilities.

Title: Senior Software Engineer / Full-Stack Developer

Jobs-To-Be-Done (JTBD): "Enable natural language understanding in product workflows without reinventing the wheel."

Goals:

Rapidly integrate high-quality code generation and analysis via a reliable API.

Reduce development time and minimize the introduction of bugs.

Ship high-quality prototypes quickly to validate ideas and demonstrate progress.

Pain Points:

Poor or incomplete documentation that causes significant integration delays.

AI tools that produce unreliable, insecure, or un-auditable code.

High friction in moving from experimentation and prototyping to production-ready implementation.

Key Stakeholder Roles

Successfully selling into an enterprise requires addressing the distinct needs of multiple stakeholders within the account.

Stakeholder Role

Key Persona

Primary Concern

User

Senior Software Engineer ("Devon Integrator")

Ease of use, performance, reliability, and documentation quality.

Champion

Engineering Manager / Lead Developer

Team productivity, adoption rates, and defending the tool's value internally.

Buyer

Head of Engineering / CTO

Return on investment (ROI), security, compliance, and long-term strategic fit with the company's technology roadmap.

This clear definition of our audience provides the necessary context to design a go-to-market plan that effectively engages and activates each of these critical roles.

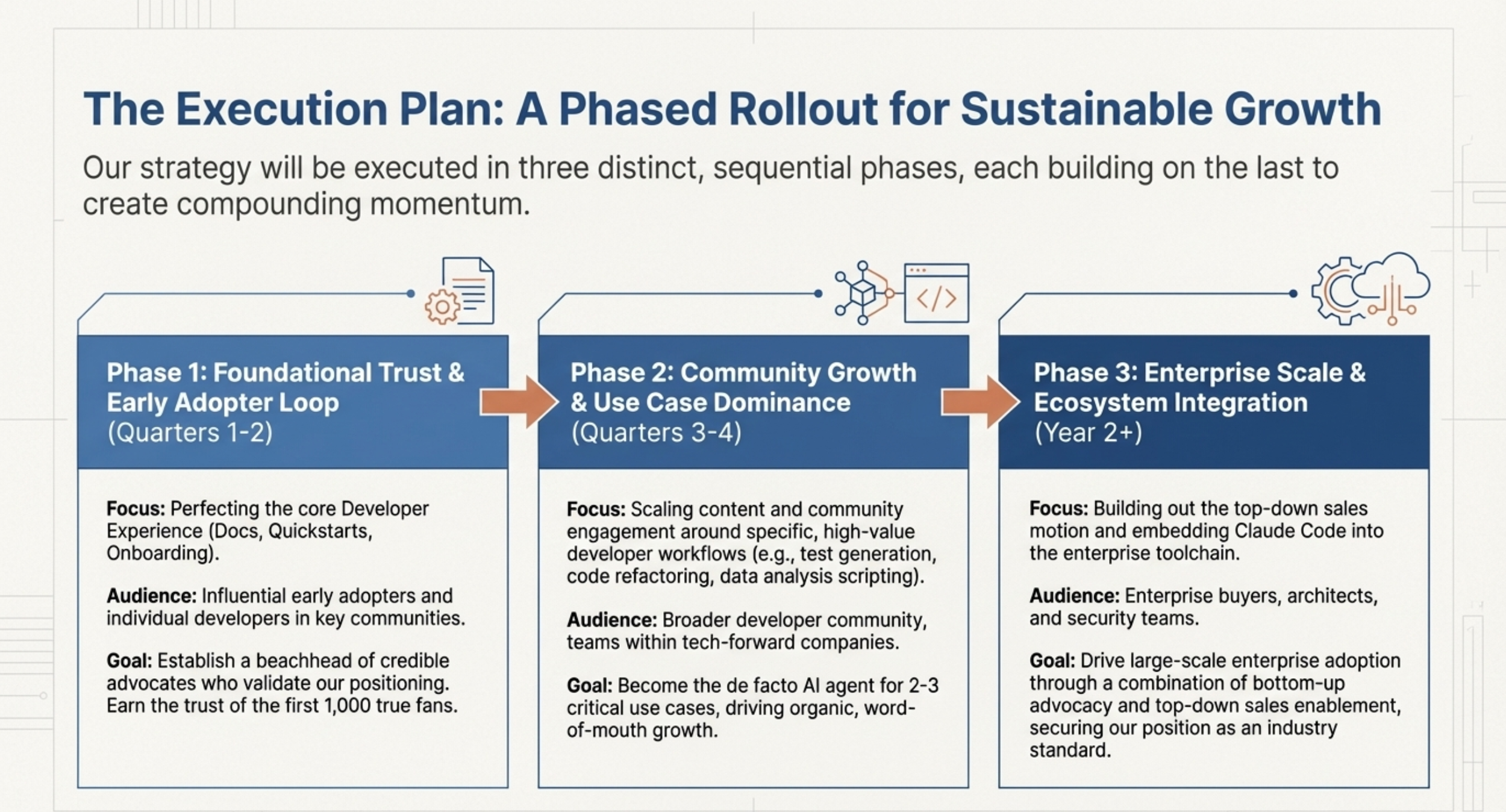

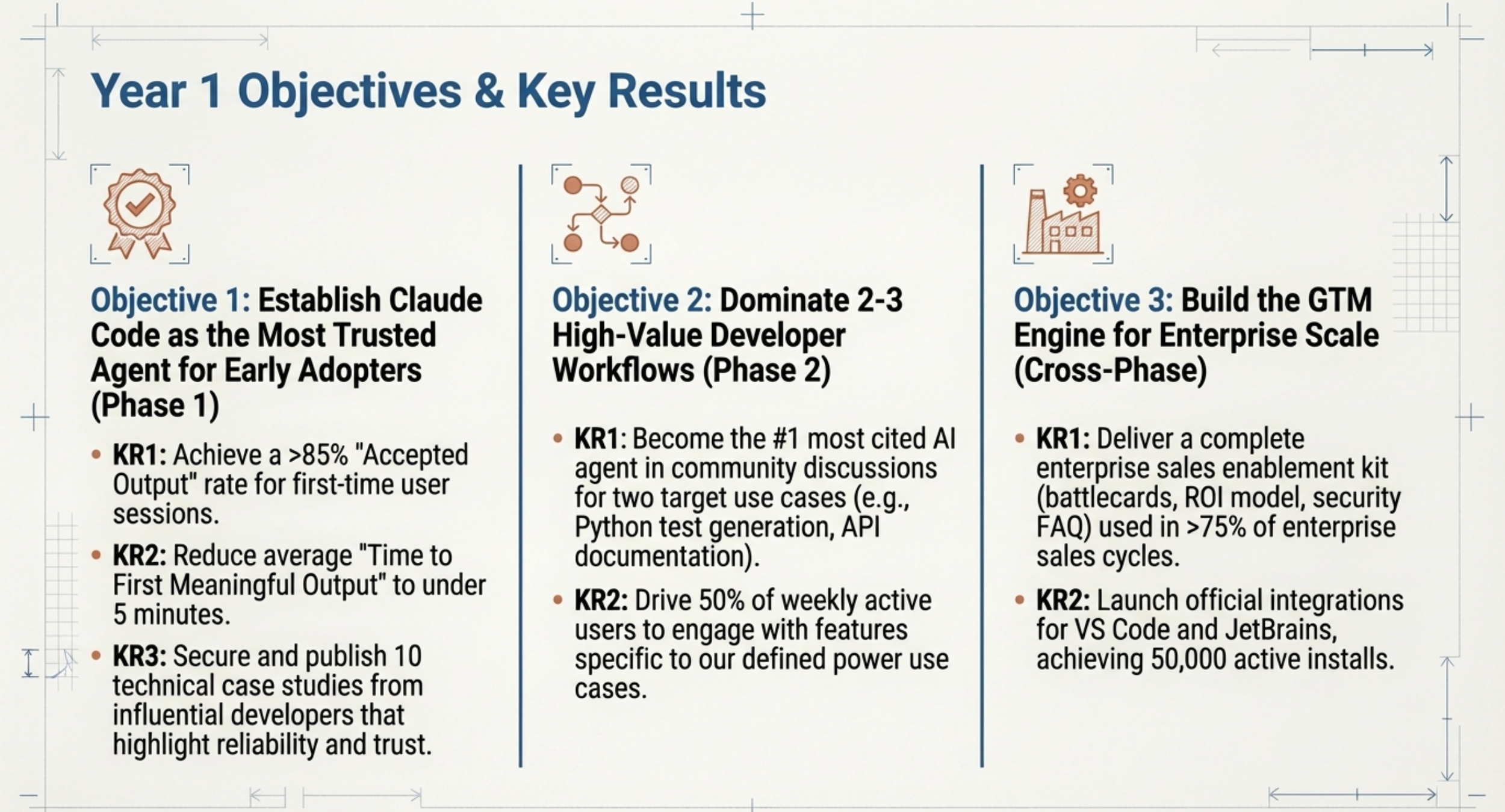

5.0 Go-to-Market Launch Plan

Our launch will follow a phased, developer-first GTM strategy. This approach is intentionally designed to build momentum sequentially, gather critical feedback from our target audience, and establish deep credibility within the technical community before a broad public announcement. Each phase has a distinct objective and set of activities.

Multi-Phased Launch Sequence

Phase 1: Alpha Pilot

Objective: Validate core use cases and gather deep qualitative feedback with a select group of trusted, high-expertise developers from our ICP.

Key Activities: Direct, high-touch engagement; user interviews to understand workflows and pain points; establish initial performance benchmarks and identify critical product gaps.

Phase 2: Closed Beta

Objective: Refine our positioning, messaging, and onboarding experience with a wider, invite-only audience of early adopters. Harden the product for broader use.

Key Activities: Test documentation for clarity and completeness; release initial GitHub starter kits to gauge their effectiveness; gather feedback on the API and overall developer experience.

Phase 3: General Availability (GA) Launch

Objective: Drive broad market awareness, establish thought leadership, and accelerate widespread adoption within our target market segments.

Key Activities: Public announcement via coordinated PR and blog posts; launch marketing campaigns targeting key developer communities; activate sales and developer relations teams for outreach.

Core Developer-First GTM Tactics

Our strategy is centered on reducing adoption friction by allowing developers to experience value immediately.

Interactive API Playground: An in-browser environment where developers can experiment with Claude Code's capabilities and test specific use cases before writing a single line of code or committing a budget. This lowers the barrier to entry and allows for rapid evaluation.

GitHub Starter Kits: A collection of high-quality, well-documented example codebases that provide a scaffold for common projects. This accelerates adoption by giving developers a functional starting point rather than a blank slate.

2-Minute Value-Proof Demos: Short, focused video demonstrations that show a clear, immediate payoff with minimal code. For example: "Analyze 10 customer reviews from raw text, extract key themes and sentiment, and output a structured JSON summary in under 30 lines of code," instantly proving the product's ability to turn unstructured data into actionable insights.

Cross-Functional Orchestration

A successful launch requires a tightly coordinated effort across more than 15 key stakeholders. Product Marketing will serve as the central hub, owning the GTM strategy. Engineering will provide technical validation for all claims and partner on benchmark development. Developer Relations will seed our GitHub starter kits and cultivate community feedback. Sales will execute the enablement plan and provide real-time feedback from the field on competitive objections.

The GA launch is not the final destination but the beginning of our journey to build a sustainable and growing business. This leads directly to our plan for continuous, post-launch growth.

6.0 Post-Launch Growth & Adoption Strategy

Our go-to-market strategy does not end at launch. We will treat our GTM as a continuous process, focusing on building a virtuous cycle of user engagement, systematic feedback collection, and rapid iteration. Sustaining momentum requires a deliberate plan to drive deeper adoption and prove our value over the long term.

Building Systematic Feedback Loops

We will establish formal processes to ensure customer insights are systematically gathered, analyzed, and integrated back into our product and GTM strategies.

Source of Feedback: We will actively monitor multiple channels, including direct user interviews, support tickets, community forums (e.g., Reddit), and quantitative product usage analytics.

Action: Insights will be triaged and translated into actionable items. This includes refining our messaging to address common questions, prioritizing features on the product roadmap to solve validated pain points, and improving our documentation.

Lifecycle Marketing Plan

We will develop a targeted communication strategy to guide users through their journey with Claude Code, driving deeper engagement and transforming initial users into long-term advocates.

Onboarding: A series of in-app prompts, tutorials, and targeted emails designed to help new users experience the "aha!" moment quickly by highlighting key features and starter use cases.

Engagement: Our engagement content will be segmented by stakeholder role, delivering API-centric deep dives for the 'Devon Integrator' persona, team productivity case studies for the 'Champion,' and ROI calculators for the 'Buyer'.

Community: Fostering an active presence in key developer communities to encourage peer-to-peer support, gather organic feedback, and build a base of vocal advocates for Claude Code.

Sales Enablement for Ongoing Success

To ensure our sales team can compete effectively, we will create and maintain a suite of strategic enablement materials.

Competitive Battle Cards: Leveraging our "long-tail" framework, we will equip sales with concise, actionable guidance on how to position Claude Code against different categories of competitors.

Customer Stories & Case Studies: We will proactively develop compelling case studies that provide social proof and demonstrate validated business outcomes, shifting conversations from technical capabilities to proven ROI.

Objection Handling Guides: We will create legally-vetted, factually true responses to common objections and competitor claims, ensuring our team can navigate difficult conversations with confidence and integrity.

The success of these ongoing efforts will be determined not by activity, but by the measurable impact they have on our business and our customers.

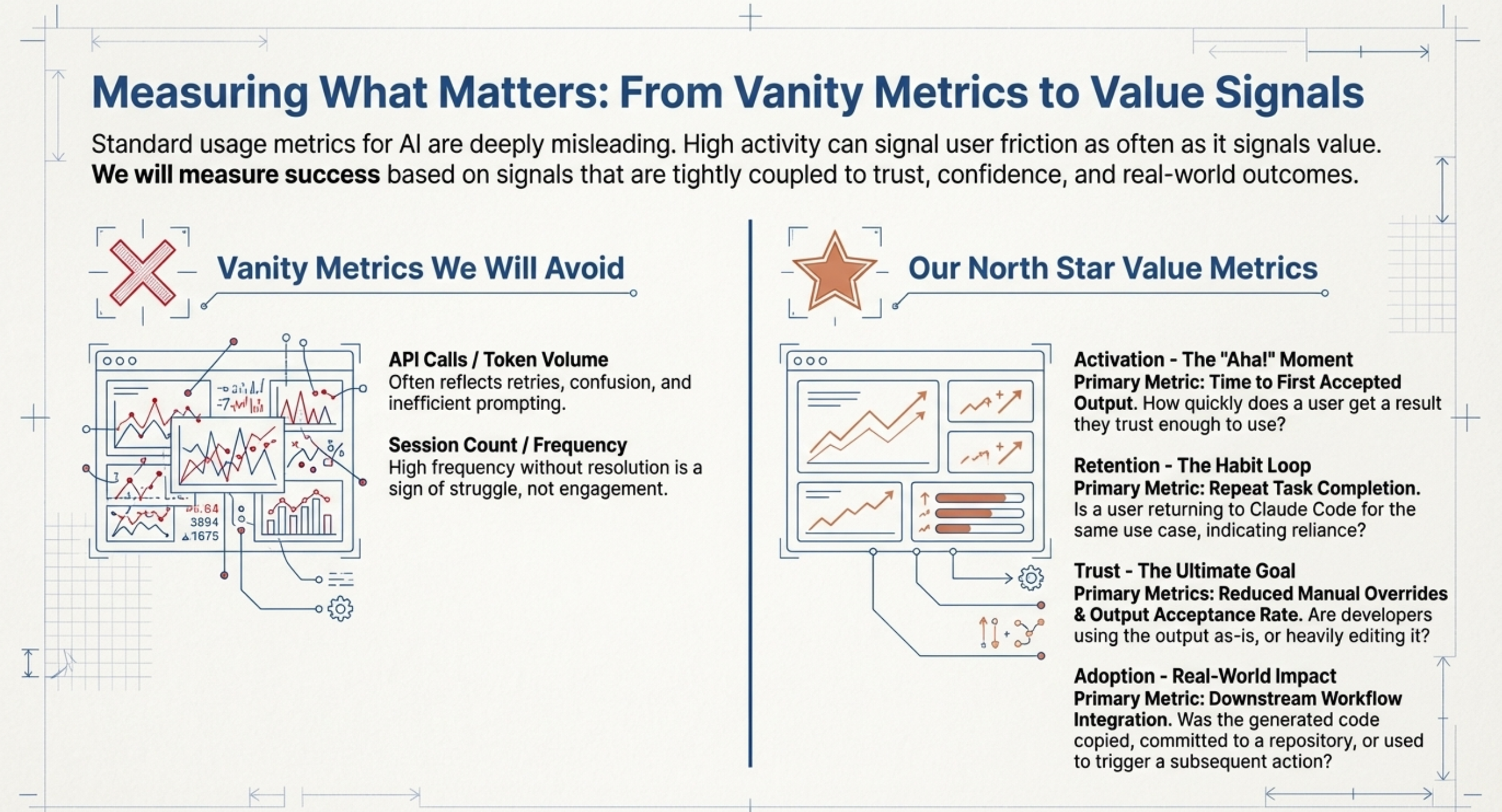

7.0 Success Metrics & Measurement Framework

Our measurement philosophy is centered on tracking realized customer value and tangible business impact, not vanity metrics. Every KPI we monitor must be directly tied to a strategic outcome, ensuring that our efforts are aligned with what truly matters: building a product that customers love and a business that can scale.

Key Performance Indicators (KPIs) Across the User Lifecycle

Activation: First successful API call that produces a valid result.

Why it matters: This initial success is the first proof point of our low-friction, developer-first GTM promise.

Adoption & Engagement:

Weekly Engaged Users: The number of unique users performing core, value-creating actions, indicating habitual use.

User Acceptance Ratio: The proportion of AI-generated suggestions that developers adopt.

Why it matters: This is a direct proxy for the model's utility and its ability to generate code that meets professional standards, reinforcing our core 'reliability' promise.

Task Success Rate (TSR): The percentage of interactions that result in the successful completion of a developer's intended task.

Retention: 60-day cohort retention rates.

Why it matters: This measures our ability to deliver sustained value over time and integrate Claude Code into our users' regular workflows.

Value Realization: Percentage reduction in development cycle time (measured via customer studies) and Time-to-Value, which tracks how quickly a new user achieves a meaningful outcome.

Product Quality: Model hallucination rates and accuracy vs. human benchmarks.

Why it matters: These technical metrics are essential for ensuring we are delivering on our promise of reliability and trust.

Aligning PMM Activities to Business OKRs

To ensure clear accountability, the product marketing team's objectives will be explicitly linked to key business results.

PMM Objective

Key Business Result

Strengthen product messaging to drive higher deal velocity.

Increase sales team confidence in competitive deals (measured by survey).

Equip sales with superior enablement materials.

Improve win rates against key competitor categories.

Drive adoption of high-value features post-launch.

Increase weekly engaged users and cohort retention rates.

This go-to-market plan provides a strategic, disciplined, and measurable path to establishing Claude Code as a trusted leader in the AI developer tool market. By executing this plan with focus and agility, we will not only capture the market—we will define it, empowering developers to build the future with an unprecedented combination of speed and trust.