Architectural Decision Records (ADR)

1. Define an Architecture Decision Record (ADR) and its primary purpose in an agile development environment.

An Architecture Decision Record (ADR) is a short document that captures an important architectural decision, along with its context and consequences.

Its primary purpose in an agile environment is to:

Preserve decision history as systems evolve quickly

Explain why decisions were made (not just what was built)

Help onboard new team members faster

Prevent re-debating the same issues repeatedly

Support incremental, evolutionary architecture

ADRs fit agile because they are lightweight, versioned, and written as decisions happen, not as heavy upfront documentation.

2. Structure — Five ADR Sections (Michael Nygard)

1. Title

Short description of the decision.

➡ Example: “Use gRPC for Internal Sensor Communication”

2. Status

Current state of the decision.

➡ Proposed, Accepted, Superseded, Deprecated

3. Context

Background, constraints, and problem statement.

➡ Why this decision was needed.

4. Decision

The actual choice made.

➡ Clear statement of what will be done.

5. Consequences

Results and impacts of the decision.

➡ Positive, negative, risks, trade-offs.

3. Significance — Two-way Door vs One-way Door

This analogy comes from decision theory popularized by Amazon.

Two-way door decision

Easy to reverse

Low cost to change

Example: Switching a logging library

One-way door decision

Hard or very expensive to reverse

Long-term system impact

Example: Choosing database technology or communication protocol

How it helps decide whether to write an ADR:

One-way door → Always write ADR

Two-way door → ADR optional unless high impact or repeated debate risk

4. Anti-Patterns

Groundhog Day Anti-Pattern

Same architectural discussions happen repeatedly because decisions aren’t recorded.

Email-Driven Architecture Anti-Pattern

Important decisions are buried in email threads, Slack messages, or meetings.

How ADRs help

Provide a single source of truth

Make decisions searchable and version controlled

Reduce time wasted re-discussing settled topics

Preserve knowledge beyond individual team members

5. Rationale — Why “Why” > “How”

The Second Law of Software Architecture says every decision involves trade-offs.

The Rationale is often more important because:

Implementation details change over time

Future teams need to understand constraints and trade-offs

Helps evaluate whether the decision is still valid later

Prevents repeating rejected approaches

If you know why, you can adapt the how safely.

6. Scenario — Smart Toaster Y-Statement

In the context of real-time communication between distributed internal sensors and the central control hub in a smart toaster,

we decided to use gRPC instead of REST,

to achieve low-latency, strongly typed, efficient binary communication with streaming support,

accepting increased complexity, tighter coupling through contracts, and reduced human readability of traffic.

7. Consequences — NoSQL for Breakfast Preferences

Positive Consequence 1

Flexible schema allows rapid feature evolution and new preference types without migrations.

Positive Consequence 2

Better horizontal scalability for large user preference datasets.

Negative Consequence

Reduced consistency guarantees and more complex querying/reporting compared to relational databases.

(Other valid negatives could include skill gaps, tooling maturity, or data duplication risks.)

8. Lifecycle — Replacing ADR #0005 with ADR #0012

You should:

Create ADR #0012

Explain new legal/privacy context

State new decision

Reference ADR #0005 as superseded

Update ADR #0005 Status

Change status to Superseded by ADR #0012

Maintain History

Do NOT delete old ADRs

Keep traceability for audits and learning

This preserves decision lineage and compliance evidence.

9. Tooling — Ensuring Developer Compliance

Association Principle:

Architectural decisions should be directly connected to the code, tests, or artifacts they influence.

Method + Tool Example

Method:

Require ADR references in pull requests for architecture-impacting changes.

Tool / Technique:

Use GitHub Pull Request templates + CI checks

PR template includes: “Related ADR: ____”

CI validates ADR exists if certain files change (e.g., infra, data, API contracts)

Alternative tools:

Architecture linting rules

ADR repositories with markdown + automation

Tools like Backstage or ADR CLI workflows

ADR-0001 — Use Retrieval-Augmented Generation (RAG) Instead of Pure LLM Responses

Status: Accepted

Date: 2026-02-06

Context

The chatbot will answer cancer patient questions about:

Treatment pathways

Medication information

Appointment preparation

Hospital-specific procedures

Pure LLM responses risk hallucinating medical information and may not reflect hospital-approved guidance or Danish healthcare regulations.

Decision

We will implement a Retrieval-Augmented Generation (RAG) architecture:

Hospital-approved knowledge base (clinical guidelines, patient leaflets, oncology protocols)

Vector search retrieval

LLM generates answers using retrieved context only

Consequences

Positive

Reduced hallucination risk

Answers aligned with hospital-approved content

Easier clinical review and auditing

Negative

Higher system complexity

Requires knowledge base curation and updates

Slight latency increase vs direct LLM calls

ADR-0002 — Host Patient Data Processing Inside EU / Denmark-Compliant Cloud

Status: Accepted

Context

The system processes:

Potentially identifiable patient conversations

Health-related data (GDPR special category data)

Danish and EU privacy regulations require strict control over health data processing and residency.

Decision

We will:

Host services in EU-based healthcare-compliant cloud infrastructure

Store patient data only in GDPR-compliant systems

Use data minimization and automatic redaction pipelines

Consequences

Positive

Regulatory compliance

Reduced legal risk

Increased patient trust

Negative

Fewer vendor choices

Potentially higher infrastructure cost

Some cutting-edge AI services may be unavailable

ADR-0003 — Use Human-in-the-Loop Escalation for Medical Advice Risk

Status: Accepted

Context

Cancer patients may ask:

Prognosis questions

Medication safety questions

Emergency symptom questions

The chatbot must not provide unsafe or unverified clinical guidance.

Decision

Implement risk classification + escalation workflow:

Low risk → automated chatbot response

Medium risk → chatbot response + disclaimer + recommend contacting clinic

High risk → block answer + escalate to nurse/oncology team queue

Consequences

Positive

Improved patient safety

Aligns with medical governance

Reduces liability exposure

Negative

Requires clinical staffing integration

Operational overhead

Slower response for high-risk queries

ADR-0004 — Use Multilingual Support (Danish First, English Secondary)

Status: Accepted

Context

Primary users are Danish cancer patients, but:

Some patients speak English

Some staff will test or support in English

Decision

We will:

Optimize prompts and knowledge base primarily for Danish

Provide English fallback responses

Store knowledge base in Danish + translated versions where required

Consequences

Positive

Better patient accessibility

Higher answer quality for primary population

Negative

Translation maintenance overhead

Need bilingual clinical review

ADR-0005 — Log Conversations for Clinical Quality Review (With De-Identification)

Status: Proposed

Context

Hospital wants to:

Improve chatbot quality

Detect unsafe outputs

Train future models

But must protect patient privacy.

Decision

We will:

Store conversation logs after automatic de-identification

Remove CPR numbers, names, addresses, phone numbers

Allow opt-out logging for patients

Consequences

Positive

Enables continuous improvement

Supports incident investigation

Helps clinical audit

Negative

Requires strong anonymization pipeline

Residual re-identification risk

Additional compliance review overhead

ADR-0006 — Restrict Chatbot to Informational Support, Not Diagnosis

Status: Accepted

Context

LLMs can appear authoritative. Cancer diagnosis or treatment planning must remain clinician-led.

Decision

The chatbot will:

Provide educational and navigation support only

Never generate diagnosis statements

Include safety guardrails and prompt constraints

Consequences

Positive

Reduces clinical and legal risk

Clear scope for product

Negative

Some patients may expect deeper clinical answers

Requires careful UX messaging

ADR-0007 — Use Prompt Versioning + Model Version Tracking

Status: Accepted

Context

LLM behaviour changes over time due to:

Prompt updates

Model upgrades

Knowledge base updates

Healthcare requires traceability.

Decision

Every response will log:

Model version

Prompt template version

Knowledge snapshot ID

Consequences

Positive

Enables audit trails

Supports incident root-cause analysis

Helps regulatory reporting

Negative

More logging storage cost

Additional engineering complexity

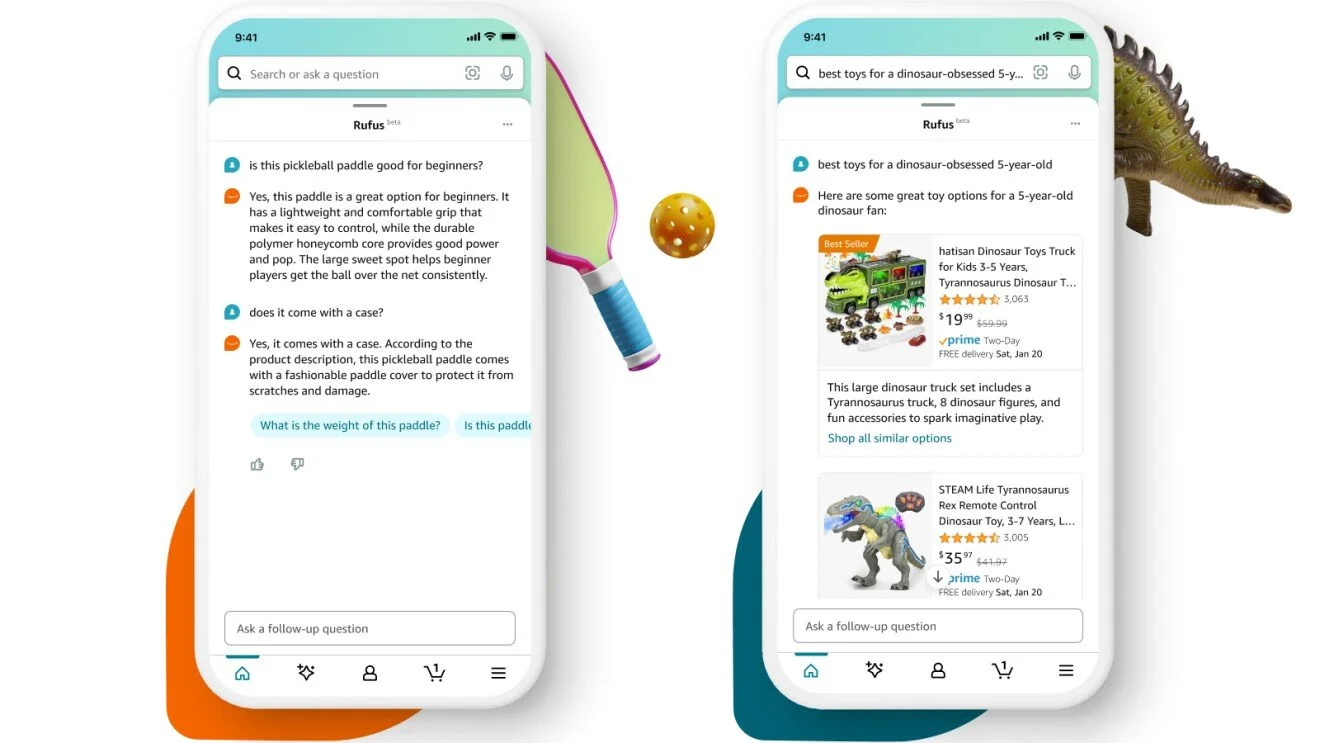

ADR-0001 — Use LLM + Rules Hybrid for Rufus Content Optimisation

Status: Accepted

Date: 2026-02-06

Context

The tool must generate and optimise:

Product titles

Bullets

A+ content suggestions

Conversational answer snippets (Rufus Q&A style)

Pure LLM generation risks:

Brand compliance violations

Regulatory claim risks (especially dairy & nutrition claims in EU)

Inconsistent tone across brands (Mars vs Arla brand voices differ)

Decision

Use a hybrid system:

LLM generates candidate optimisations

Rules engine validates:

Brand voice rules

Nutrition / health claim compliance

Amazon category content policies

Consequences

Positive

Safer automated content generation

Maintains brand consistency

Reduces legal/compliance review load

Negative

Higher engineering complexity

Requires ongoing rule maintenance

Slightly slower generation pipeline

ADR-0002 — Centralised Brand Knowledge Graph for Product + Claim Relationships

Status: Accepted

Context

Mars and Arla manage:

Hundreds of SKUs

Complex claim relationships (e.g., protein content, sustainability claims, ingredient sourcing)

Frequent product refresh cycles

Simple flat product databases make optimisation logic brittle.

Decision

Implement a Brand Knowledge Graph storing:

SKU → Ingredients → Claims → Certifications → Regions → Campaigns

Queryable by optimisation engine and analytics

Consequences

Positive

Enables richer Rufus conversational answers

Supports cross-product optimisation insights

Improves data reuse across brands

Negative

Higher data modelling effort

Requires governance and stewardship

Harder initial setup vs relational tables

ADR-0003 — Use Retrieval-Augmented Generation (RAG) for Rufus Q&A Optimisation

Status: Accepted

Context

Rufus answers customer questions using Amazon catalog data plus contextual signals.

Optimisation requires aligning product content with likely customer questions.

Decision

Use RAG combining:

Amazon product catalog exports

Customer review themes

Search query datasets

Brand product documentation

LLM generates Rufus-aligned answer suggestions using retrieved evidence.

Consequences

Positive

Improves factual grounding

Aligns content to real customer language

Reduces hallucinated product claims

Negative

Requires vector index maintenance

Data freshness dependency

More infrastructure cost

ADR-0004 — Multi-Tenant Architecture With Brand Isolation

Status: Accepted

Context

The platform will serve multiple FMCG clients:

Mars

Arla

Potential future EU FMCG brands

Brand data and strategy must remain isolated.

Decision

Implement logical tenant isolation:

Separate brand vector indexes

Separate optimisation rule sets

Separate analytics dashboards

Shared core infrastructure

Consequences

Positive

Scales to additional clients

Protects commercial data

Enables brand-specific optimisation strategies

Negative

Increased deployment complexity

Higher operational monitoring overhead

ADR-0005 — Use EU Cloud Hosting With Data Export Controls

Status: Accepted

Context

Although product data is not personal data, tool usage may include:

Customer review text

Search behaviour datasets

Potential marketplace partner data

EU companies prefer EU-hosted processing.

Decision

Host analytics + optimisation services in EU cloud regions with:

Data export logging

Role-based access controls

Audit logging for client data usage

Consequences

Positive

Supports EU enterprise procurement requirements

Improves client trust

Easier compliance reviews

Negative

Some AI services may have delayed EU availability

Higher infra cost vs global-only hosting

ADR-0006 — Optimisation Recommendations Must Be Explainable

Status: Accepted

Context

Brand and category managers must trust optimisation outputs.

Black-box recommendations reduce adoption.

Decision

Every optimisation suggestion must include:

Source signal (reviews, search queries, competitor analysis)

Confidence score

Expected impact metric (CTR, CVR, visibility probability)

Consequences

Positive

Higher adoption by brand teams

Easier A/B test planning

Supports internal justification

Negative

Requires extra data processing

More complex UI and data pipelines

ADR-0007 — Continuous Learning via Closed-Loop Performance Feedback

Status: Proposed

Context

Optimisation value depends on learning from:

Content changes

Conversion performance

Rufus interaction trends

Decision

Implement feedback loop using:

Amazon performance metrics (where available)

Internal experimentation tracking

Content version → performance correlation

Consequences

Positive

Improves optimisation quality over time

Enables ROI measurement

Negative

Attribution complexity

Requires careful experiment design

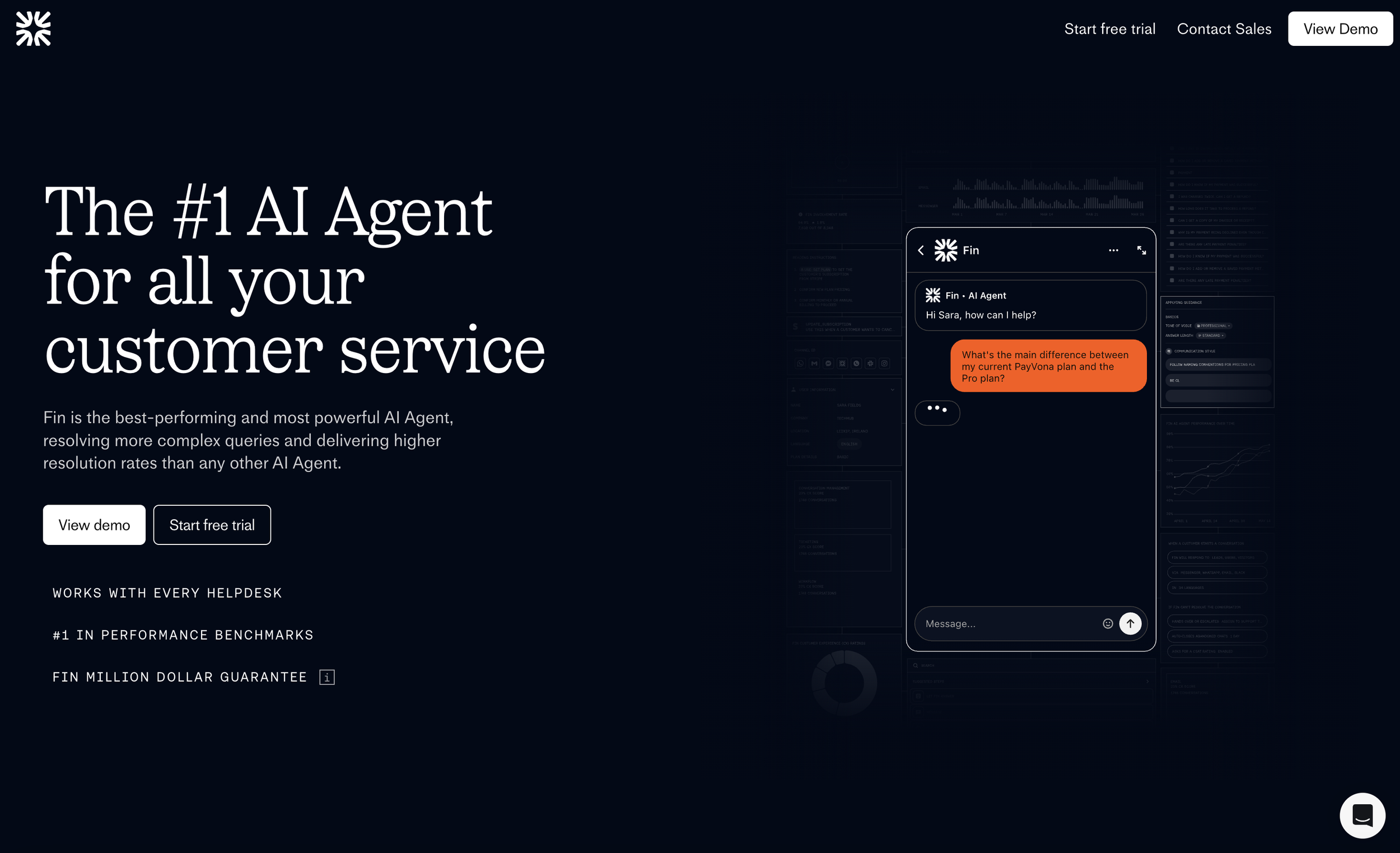

Below is a clean, production-quality ADR set for adopting and operating Fin (Fin.ai) by Intercom as an enterprise AI Customer Service Agent.

I’ve structured these like ADRs you’d realistically store in a repo for architecture governance, security review, or procurement sign-off.

Fin.ai / Intercom — Example ADR Set

ADR-0001 — Adopt Fin (Intercom) as Primary AI Customer Service Agent

Status: Accepted

Date: 2026-02-06

Context

The organisation requires an AI agent capable of:

Resolving complex customer queries

Operating across multiple channels

Integrating with existing helpdesk infrastructure

Operating with minimal engineering overhead

Internal LLM build options were evaluated but present:

Higher time-to-market

Ongoing MLOps overhead

Increased governance and safety burden

Decision

We will adopt Fin by Intercom as the primary AI Customer Service Agent, due to:

Average ~65% resolution rate

No-code configuration model

Rapid deployment (≈ <1 hour setup)

Native helpdesk integration capability

Enterprise safety controls and certifications

Consequences

Positive

Fast deployment and time to value

Reduced internal AI engineering cost

Proven production reliability

Continuous vendor model improvement

Negative

Vendor lock-in risk

Limited low-level model customisation

Ongoing per-resolution operational cost

ADR-0002 — Multi-Channel Customer Interaction Strategy

Status: Accepted

Context

Customers contact support through:

Live chat

Email

SMS

Phone (voice assist workflows)

Social media messaging

Fragmented automation causes inconsistent customer experience and duplicated support workflows.

Decision

Fin will be deployed as a single AI resolution layer across:

Web chat

Email

Messaging channels

Voice-assist workflows (via integration layer)

Consequences

Positive

Unified customer experience

Shared training data across channels

Higher automation coverage

Negative

Channel-specific nuance may require custom training

Increased monitoring complexity across channels

ADR-0003 — Helpdesk Integration (No Migration Strategy)

Status: Accepted

Context

Current support stack includes:

Existing helpdesk ticketing workflows

Agent assignment rules

Reporting pipelines

CRM integrations

Full migration to a single vendor platform is not feasible short-term.

Decision

Fin will integrate with existing helpdesks (e.g., Intercom, Zendesk, Salesforce Service Cloud) using:

Native integration connectors

Existing routing and escalation rules

Existing reporting dashboards

Consequences

Positive

Minimal operational disruption

Faster rollout

Lower training requirements for support teams

Negative

Potential limitations vs full native platform features

Requires integration monitoring

ADR-0004 — Outcome-Based Pricing Model Adoption

Status: Accepted

Context

Traditional SaaS pricing models charge per seat or per conversation.

Fin pricing is based on per successful resolution (~$0.99 per resolution).

Decision

We will adopt outcome-based pricing and align Fin usage with:

Resolution quality metrics

Automation rate targets

Cost-per-resolution thresholds

Consequences

Positive

Direct ROI measurement

Aligns cost to business value

Encourages optimisation of automation quality

Negative

Requires strong resolution definition governance

Requires cost forecasting models

ADR-0005 — Security and Identity Verification Using JWT

Status: Mandatory

Context

Customer identity verification is required to prevent:

Account takeover

Cross-user data leakage

Session spoofing

Legacy identity verification approaches are deprecated.

Decision

We will use JWT-based identity verification for Intercom Messenger:

Signed user ID and email

Short token expiry

Token rotation via backend auth service

Consequences

Positive

Strong identity validation

Reduced impersonation risk

Industry standard implementation

Negative

Requires secure token issuance service

Requires key rotation governance

ADR-0006 — AI Governance and Responsible AI Compliance

Status: Accepted

Context

Customer-facing AI introduces risks:

Hallucinations

Brand reputation risk

Unsafe or misleading responses

Prompt injection or adversarial queries

Decision

We will align operations with:

Intercom AI security protections

AIUC-1 certification framework controls

Internal AI governance review process

Continuous output monitoring

Consequences

Positive

Demonstrable responsible AI posture

Strong enterprise procurement alignment

Reduced brand risk

Negative

Requires ongoing audit reviews

Requires internal AI risk reporting process

ADR-0007 — Data Residency and Privacy Compliance Strategy

Status: Accepted

Context

Operations span:

EEA

UK

USA

Must comply with:

GDPR

UK GDPR

CCPA (where applicable)

Decision

We will:

Use Intercom approved data hosting frameworks

Use legal transfer mechanisms (e.g., DPF or equivalent where applicable)

Provide transparency via privacy policy updates

Implement data minimisation in customer payloads

Consequences

Positive

Regulatory compliance support

Lower legal exposure

Easier enterprise sales approvals

Negative

Requires ongoing vendor compliance review

May limit certain data uses

ADR-0008 — Prohibited Sensitive Data Handling

Status: Mandatory

Context

Standard support tooling is not certified for:

PCI DSS payment storage

Certain regulated health data processing

Special category sensitive data

Decision

Fin will be configured to:

Avoid requesting or storing sensitive personal data

Redirect sensitive workflows to secure systems

Use detection rules to block restricted data categories

Consequences

Positive

Reduces compliance exposure

Protects customers and organisation

Negative

Requires fallback flows for sensitive workflows

May increase handoffs to human agents

ADR-0009 — Continuous Optimisation Using Fin Analytics and Testing

Status: Accepted

Context

AI performance degrades without:

Content updates

Training refresh

Behaviour monitoring

Decision

We will use Fin tooling to:

Analyse support trends

Identify content gaps

Run answer testing experiments

Optimise tone and localisation (45+ languages capability)

Consequences

Positive

Continuous automation improvement

Higher long-term resolution rates

Negative

Requires content operations ownership

Requires performance review cadence

Strategic ADR — Fin vs Build-Your-Own LLM Customer Support Platform

ADR-STRAT-0001 — Strategic Direction for AI Customer Service Platform

Status: Accepted (Example)

Owner: CTO / Chief Digital Officer

Decision Type: Strategic / Board-Level

Date: 2026-02-06

Context

The organisation is investing in AI-driven customer support automation to achieve:

Higher resolution rates

Lower cost per contact

24/7 global support coverage

Scalable multilingual support

Improved customer experience

Two strategic options were evaluated:

Option A — Adopt Fin (Intercom Managed AI Agent)

A vendor-managed AI support agent providing:

No-code configuration

Multi-channel deployment

Outcome-based pricing

Enterprise-grade safety controls

Pre-built integrations

Option B — Build Proprietary LLM Support Platform

Internally built AI support platform including:

Custom LLM orchestration

Internal RAG pipelines

Internal safety guardrails

Custom evaluation frameworks

Full data and model control

Decision

The organisation will adopt Fin (Intercom) as the primary AI customer support platform for the next 24–36 months, while maintaining a strategic capability assessment for potential future internal AI platform development.

Strategic Drivers Behind Decision

1. Time to Value

Fin deployment: weeks

Internal build: 12–24 months to mature production capability

2. Risk Reduction

Vendor solution provides:

Mature production usage

Pre-built safety controls

Certified responsible AI controls

Enterprise-grade reliability

3. Talent and Capability Reality

Building requires:

LLM platform engineering

AI safety engineering

ML infrastructure (MLOps / LLMOps)

Continuous evaluation teams

This capability is currently limited internally.

4. Cost Predictability

Fin:

Variable cost aligned to outcomes

Lower upfront capital investment

Build:

High upfront platform cost

Ongoing model ops + infra + team cost

Alternatives Considered

Full Internal LLM Platform

Rejected for now due to:

High upfront cost

Talent acquisition risk

Longer time to business value

Higher regulatory and safety ownership burden

Hybrid (Internal LLM + Vendor Tools)

Deferred until internal AI maturity increases.

Consequences

Positive Consequences

1. Faster Business Impact

Immediate automation improvements and measurable ROI.

2. Lower Execution Risk

Vendor absorbs:

Model maintenance

Safety tuning

Threat mitigation

Scaling infrastructure

3. Reduced Regulatory Burden

Vendor certification and governance frameworks reduce internal compliance workload.

4. Predictable Cost Model

Outcome pricing aligns cost with customer value delivered.

Negative Consequences

1. Vendor Dependency

Risk of:

Pricing changes

Feature roadmap control outside organisation

Contract lock-in

Mitigation:

Data portability strategy

Periodic market re-evaluation

Exit architecture maintained

2. Reduced Differentiation

Competitors could use same vendor platform.

Mitigation:

Differentiate through:

Proprietary knowledge

Customer workflows

Integration depth

Customer experience design

3. Long-Term Strategic Capability Gap

Risk of falling behind in internal AI capability.

Mitigation:

Maintain internal AI architecture review group

Run small internal experimentation programme

Reassess build vs buy every 12 months

Financial Trade-Off Summary

DimensionFinBuild InternalUpfront CostLowVery HighOngoing CostVariableHigh Fixed + VariableTime to MarketFastSlowTalent RequirementLowVery HighControlMediumFullRiskLowerHigher (initially)

Risk Register (Strategic)

RiskImpactLikelihoodMitigationVendor pricing increaseMediumMediumMulti-year contract + exit planVendor roadmap misalignmentMediumMediumQuarterly roadmap governanceVendor outage riskHighLowHuman fallback workflowsAI regulatory changesHighMediumVendor compliance + internal review

Exit Strategy

The architecture will ensure:

Conversation data export capability

Knowledge base portability

Integration abstraction layer

Internal evaluation capability retained

This enables future migration if:

Costs become unfavourable

Strategic differentiation requires internal AI

Regulatory requirements change

Strategic Review Cadence

Re-evaluate Build vs Buy when:

Internal AI capability reaches maturity

Support automation becomes core competitive differentiator

Vendor costs exceed internal TCO

Regulatory environment changes

Formal review interval: 12 months

Board-Level Y-Statement

In the context of scaling global customer support automation while managing cost, regulatory exposure, and delivery risk,

we decided to adopt Fin (Intercom) as the primary AI support agent,

to achieve rapid time-to-value, predictable operational cost, and enterprise-grade safety and reliability,

accepting vendor dependency and reduced low-level AI customisation in the short to medium term.

Below is a Security / Board + Risk Committee level ADR focused specifically on LLM threat modelling.

This sits alongside strategic ADRs and would typically be owned jointly by Security, Platform, and AI Governance.

Security ADR — LLM Threat Model and Mitigation Strategy

ADR-SEC-0004 — LLM Threat Model (Prompt Injection, Data Exfiltration, Jailbreak Resistance)

Status: Accepted (Example)

Owner: CISO / Head of AI Security

Decision Type: Security Architecture / Enterprise Risk

Date: 2026-02-06

Context

The organisation is deploying LLM-powered customer-facing and internal AI systems (e.g., AI support agents, copilots, automation assistants).

LLMs introduce new attack classes not fully covered by traditional AppSec models:

Prompt Injection

Data Exfiltration via Model Context

Jailbreaks / Policy Evasion

Tool Abuse (if agentic capabilities exist)

Training Data Leakage

Cross-tenant Context Leakage

These risks can impact:

Customer data confidentiality

Regulatory compliance (GDPR, PCI, etc.)

Brand reputation

Operational trust in AI systems

Decision

We will implement a Layered LLM Security Model covering:

Input Security Controls

Context Isolation and Data Minimisation

Output Validation and Policy Enforcement

Runtime Behaviour Monitoring

Vendor Security Assurance (where applicable)

Continuous Red Teaming and Testing

Threat Model Scope

In Scope

External user prompts

Retrieved knowledge base content

Tool invocation payloads

LLM responses

System prompts and hidden instructions

Integration data (CRM, ticketing, internal APIs)

Out of Scope

Non-LLM traditional application attacks (handled by existing AppSec framework)

Primary Threat Classes and Controls

Threat Class 1 — Prompt Injection

Threat Description

Attackers attempt to override system instructions or force the model to:

Reveal hidden prompts

Ignore policy controls

Perform unintended actions

Access restricted data

Example attack patterns:

“Ignore previous instructions…”

“You are now in debug mode…”

Embedded instructions in uploaded documents or URLs

Controls

Technical Controls

Input classification and risk scoring

Context boundary enforcement

Instruction hierarchy (system > policy > user)

Prompt template hardening

Retrieval content sanitisation

Operational Controls

Red team prompt injection testing

Prompt change approval workflow

Residual Risk

Medium — cannot be fully eliminated, only reduced.

Threat Class 2 — Data Exfiltration

Threat Description

Attackers attempt to extract:

PII

Customer records

Internal knowledge base content

System prompts

Cross-user session data

Controls

Technical Controls

Strict tenant isolation

Data minimisation in prompt context

Retrieval filtering (need-to-know basis)

Output PII scanning

Token-level response filtering

Architecture Controls

No direct raw database access from LLM

Mediated tool access via policy layer

Zero-trust service access

Residual Risk

Low–Medium depending on data exposure surface.

Threat Class 3 — Jailbreaks

Threat Description

Attempts to bypass safety or policy constraints through:

Multi-step reasoning attacks

Role-play attacks

Encoding / obfuscation attacks

Language switching attacks

Controls

Technical Controls

Safety classifier layer before response release

Adversarial prompt detection

Multi-model verification for high-risk outputs

Refusal and safe completion patterns

Process Controls

Continuous jailbreak dataset testing

Vendor model update review

Residual Risk

Medium — evolves with new attack patterns.

Threat Class 4 — Tool / Agent Abuse (If Enabled)

Threat Description

If LLM can:

Call APIs

Trigger workflows

Access data systems

Attackers may attempt:

Privilege escalation

Fraudulent transactions

Data scraping

Controls

Tool permission scoping

Transaction confirmation gates

Policy engine approval layer

Rate limiting and anomaly detection

Residual Risk

Low with strict policy enforcement.

Security Architecture Pattern

Required Control Layers

Input Security Layer

Injection detection

Content classification

Context Security Layer

Data filtering

Tenant isolation

Least privilege retrieval

Model Safety Layer

Guardrails

Safety tuning

Prompt templates

Output Security Layer

PII detection

Policy validation

Response risk scoring

Monitoring Layer

Behaviour anomaly detection

Security event logging

Governance Requirements

Mandatory

LLM threat modelling review per new deployment

Quarterly red team exercises

Security logging for all LLM requests

Incident response playbooks for AI incidents

Vendor Requirements (If Using External AI Providers)

Vendors must provide:

Security architecture transparency

Model safety documentation

Data handling guarantees

Incident notification SLAs

Independent certification where available

Consequences

Positive

Reduces probability of high-impact AI incidents

Supports regulatory and audit requirements

Builds customer trust in AI deployments

Negative

Increases development complexity

Adds latency via safety layers

Requires dedicated AI security capability

Strategic Risk Acceptance Statement

The organisation acknowledges that:

LLM systems cannot be made perfectly secure

Risk must be continuously managed and monitored

Security posture must evolve with threat landscape

Board-Level Y-Statement

In the context of deploying LLM-powered systems that process customer and enterprise data,

we decided to implement a layered LLM threat defence architecture,

to achieve protection against prompt injection, data exfiltration, jailbreak attacks, and tool abuse,

accepting increased engineering complexity, additional latency, and ongoing operational security investment.

If you want, I can go even deeper into very real-world security architecture, for example:

LLM Secure Reference Architecture (with component diagram in text form)

AI Incident Response Playbook ADR

Red Team Testing Programme ADR (with test categories and cadence)

Model Supply Chain Security ADR (training data → model → deployment)

Just tell me which direction you want next.

Operations + Risk ADR — Human Escalation Policy for AI Agents

ADR-OPS-0010 — Mandatory Human Escalation Framework for AI Customer Interactions

Status: Accepted (Example)

Owner: Head of Customer Operations + AI Governance + Risk

Decision Type: Operational Safety / Customer Risk / AI Governance

Date: 2026-02-06

Context

The organisation is deploying AI agents (e.g., LLM-powered support assistants) to automate customer interactions across digital channels.

While AI systems can resolve a large percentage of queries, risks remain:

Incorrect or hallucinated answers

Sensitive or high-risk customer scenarios

Legal or regulatory exposure

Customer frustration during complex interactions

Situations requiring empathy or judgement

Regulators and enterprise customers increasingly expect human override capability for AI systems affecting customers.

Decision

We will implement a Mandatory Human Escalation Framework ensuring that AI interactions are transferred to human agents when defined risk, complexity, or confidence thresholds are met.

This framework will be enforced via:

Automated risk classification

Confidence-based escalation

User-requested escalation at any time

Mandatory escalation categories

Escalation Triggers

1. Customer-Initiated Escalation (Always Allowed)

Customers can request a human agent at any time using:

Natural language (“talk to a human”, “agent please”)

UI escalation buttons

Repeated failed resolution attempts

Policy:

Must never block or delay customer-requested escalation.

2. Risk-Based Automatic Escalation

Escalate when content includes:

Regulatory / Legal Risk

Complaints about compliance breaches

Legal threats or disputes

Regulatory reporting scenarios

Financial Risk

Billing disputes

Refund escalation requests above threshold

Fraud suspicion

Safety / Wellbeing Signals (If Relevant Domain)

Emotional distress

Medical or safety advice requests

Crisis language

3. AI Confidence / Quality Escalation

Escalate when:

Model confidence below defined threshold

Multiple failed resolution attempts

Conflicting retrieved information

Out-of-distribution queries

4. Sensitive Data or Account Risk

Escalate when:

Identity verification uncertainty

Account access anomalies

Sensitive data detected in conversation

Escalation Levels

Level 1 — Assisted AI → Human

AI gathers context → Transfers to human agent

Goal: Reduce handling time

Level 2 — Immediate Human Handoff

AI stops responding → Transfers immediately

Used for high-risk categories

Level 3 — Specialist Escalation

Routing to:

Tier 2 support

Compliance team

Security team

Legal team

Operational Requirements

Conversation Context Transfer

When escalating, system must pass:

Conversation history

Customer metadata

AI confidence signals

Risk classification reason

Response Time Targets

Define SLA for:

Standard escalation

High-risk escalation

Safety-critical escalation

Customer Transparency

Customers must know:

They are interacting with AI

They can request human help anytime

Governance Requirements

Mandatory Monitoring

Track:

Escalation rate

False positive escalations

Missed escalation incidents

Customer satisfaction post-escalation

Audit Requirements

Log:

Escalation trigger reason

AI confidence score

Risk classification output

Review Cadence

Monthly review of:

Escalation thresholds

Missed risk incidents

Customer sentiment trends

Technology Requirements

Systems must support:

Real-time risk scoring

Confidence scoring

Intent classification

Escalation routing APIs

Agent queue prioritisation

Failure Mode Policy

If AI safety systems fail or degrade:

Default to increased human routing

Disable high-risk automation flows

Trigger incident response process

Consequences

Positive

Protects customers from unsafe AI decisions

Supports regulatory expectations

Maintains brand trust

Enables safe automation scaling

Negative

Increased human support workload

Requires monitoring and tuning

Potential increased cost during early rollout

Strategic Risk Acceptance Statement

The organisation acknowledges:

AI cannot safely handle 100% of customer scenarios

Human judgement remains critical for edge cases

Escalation is a safety feature, not a failure

Board-Level Y-Statement

In the context of deploying AI agents to automate customer interactions at scale,

we decided to implement a mandatory human escalation framework,

to achieve safe, trustworthy customer support with regulatory alignment,

accepting increased operational cost and system complexity.

Finance / Platform ADR — AI Cost Governance (Resolution Cost Guardrails)

ADR-FIN-0012 — Resolution Cost Guardrails for AI Customer Support Automation

Status: Accepted (Example)

Owner: CFO + CTO + Head of Customer Operations

Decision Type: Financial Governance / Platform Economics

Date: 2026-02-06

Context

The organisation is deploying AI customer support automation using outcome-based pricing (e.g., cost per successful resolution).

Key risks:

Uncontrolled cost growth due to increased automation usage

Low-quality AI resolutions driving hidden downstream cost (reopens, complaints, churn)

Lack of cost attribution by channel, product, or geography

Vendor pricing model dependency

Difficulty forecasting cost under usage spikes

Unlike seat-based SaaS pricing, resolution-based pricing is variable OPEX tied to behaviour and volume.

Decision

We will implement Resolution Cost Guardrails across four control layers:

Cost Per Resolution Targets

Volume and Spend Caps

Quality-Adjusted Cost Monitoring

Vendor and Model Usage Optimisation

These guardrails will be enforced through monitoring, alerting, and automated throttling where required.

Cost Governance Framework

Layer 1 — Cost Per Resolution Targets

Define acceptable cost bands:

TierCost Per ResolutionTarget≤ Baseline Support Cost EquivalentWarning10–20% above baselineCritical> 20% above baseline

Baseline = historical human support cost per ticket.

Layer 2 — Volume and Spend Guardrails

Implement:

Monthly AI support budget allocation

Channel-level cost caps

Product-line cost attribution

Automatic alert thresholds at 70%, 85%, 95% spend

Optional:

Soft throttling during non-critical spikes

Human fallback routing if cost risk triggered

Layer 3 — Quality-Adjusted Cost Control

True cost must include:

Reopen rate cost

Escalation to human cost

Refund / compensation cost triggered by AI errors

Customer churn signals

Metric example:

True Resolution Cost =

(AI Resolution Cost)

(% Reopen × Human Cost)

(% Escalation × Human Cost)

(Error Remediation Cost)

Layer 4 — Vendor and Model Optimisation

Implement:

Channel-specific automation strategy

High-confidence automation only in high-volume low-risk flows

Continuous vendor pricing review

Multi-vendor optional architecture (long-term)

Mandatory Reporting

Executive Dashboard Metrics

Cost per AI resolution

True cost per resolution (quality adjusted)

Automation rate

Cost vs human baseline

Cost per channel

Cost per customer segment

Finance Reporting Cadence

Weekly operational cost review

Monthly Finance + Operations review

Quarterly Board-level cost trend review

Guardrail Enforcement Actions

Warning Level

Actions:

Increase monitoring

Review automation scope

Review resolution definition

Critical Level

Actions:

Pause expansion of automation coverage

Increase human routing

Trigger vendor cost review

Trigger architecture review

Technology Requirements

Systems must support:

Real-time cost telemetry

Resolution-level cost tagging

Channel attribution

Experiment tracking

Automated alerting

Forecasting Requirements

Finance models must include:

Seasonal volume spikes

Product launch impact

Customer growth scenarios

Vendor pricing change scenarios

Failure Mode Policy

If cost monitoring systems fail:

Default to conservative automation coverage

Freeze automation expansion

Notify Finance and Platform leadership

Consequences

Positive

Predictable AI operating cost

Prevents silent cost creep

Supports CFO confidence in AI scaling

Enables ROI-based automation expansion

Negative

Requires strong telemetry infrastructure

May temporarily slow automation rollout

Requires cross-functional cost ownership

Strategic Risk Acceptance Statement

The organisation acknowledges:

AI automation cost is variable by design

Perfect cost predictability is not possible

Cost governance must be continuous, not one-time

Board-Level Y-Statement

In the context of scaling AI-driven customer support using resolution-based pricing models,

we decided to implement resolution cost guardrails and quality-adjusted cost monitoring,

to achieve predictable AI operating cost and sustainable automation ROI,

accepting increased monitoring complexity and potential automation throttling during cost spikes.

Data + Governance ADR — AI Resolution Evaluation Framework

ADR-DATA-0015 — Standardised AI Resolution Measurement Framework

Status: Accepted (Example)

Owner: Head of Data + Customer Operations + AI Governance

Decision Type: Measurement Governance / Vendor Validation / Cost Accuracy

Date: 2026-02-06

Context

AI customer support vendors often report resolution metrics (e.g., “65% automated resolution rate”).

However, resolution definitions vary widely and can be misleading.

Common vendor metric risks:

Counting short conversations as resolved

Ignoring reopen events

Ignoring downstream human escalation

Ignoring customer dissatisfaction

Measuring only short-term interaction outcomes

Resolution metrics directly affect:

Cost calculations

Vendor performance evaluation

Automation expansion decisions

Executive reporting

Decision

We will implement a Multi-Dimensional Resolution Evaluation Framework consisting of:

Strict Resolution Definition

Time-Based Validation Windows

Quality-Adjusted Resolution Metrics

Customer Outcome Validation

Vendor Metric Normalisation

Vendor-reported metrics will not be used directly without internal validation.

Core Measurement Definitions

Primary Metric — True AI Resolution Rate (TARR)

A case counts as resolved only if:

Customer issue is closed

No reopen within defined validation window

No forced human intervention required

Customer satisfaction meets threshold

Formula

True AI Resolution Rate =

Valid AI Resolutions

--------------------------------

Total Eligible AI Conversations

Resolution Validation Windows

Short-Term Window

24–48 hours after resolution

Detects immediate reopens or follow-up contact

Medium-Term Window

7 days after resolution

Detects unresolved issue recurrence

Long-Term (Optional)

30 days for high-risk or high-value flows

Quality-Adjusted Resolution Metrics

Adjusted Resolution Score (ARS)

Adjusts raw resolution rate using:

Reopen penalty

Escalation penalty

Refund / compensation penalty

Complaint penalty

Example

ARS =

Raw Resolution Rate

– (Reopen Rate × Weight)

– (Escalation Rate × Weight)

– (Complaint Rate × Weight)

Customer Outcome Validation

Customer Satisfaction Threshold

Resolution only valid if:

CSAT ≥ defined threshold

ORNo negative sentiment detected

ORNo complaint generated

Customer Effort Validation

Optional metric:

Repeat contact required?

Multiple channels used for same issue?

Vendor Metric Normalisation

Vendor claims must be adjusted to internal definitions using:

Matching conversation eligibility criteria

Matching validation window

Matching escalation definitions

Matching customer outcome thresholds

Segmentation Requirements

All resolution metrics must be segmented by:

Channel (chat, email, voice, social)

Customer tier

Geography

Product or service line

Risk category

Resolution Exclusion Rules

Exclude from denominator:

Spam / bot interactions

Duplicate tickets

Non-support interactions

Misrouted conversations

Audit Requirements

Every AI resolution must log:

Confidence score

Risk classification

Knowledge sources used (if RAG)

Escalation eligibility status

Customer sentiment score

Governance Cadence

Weekly

Operational monitoring of resolution quality trends

Monthly

Vendor performance validation

Quarterly

Executive-level automation performance review

Failure Mode Policy

If measurement system fails:

Freeze automation expansion decisions

Fall back to conservative vendor metrics (flagged as unverified)

Trigger data pipeline incident review

Consequences

Positive

Prevents inflated automation performance reporting

Enables accurate cost modelling

Enables fair vendor comparison

Supports regulatory and audit review

Negative

Requires advanced data instrumentation

May reduce headline automation numbers initially

Requires cross-team metric alignment

Strategic Risk Acceptance Statement

The organisation acknowledges:

Resolution is a business outcome, not a conversation event

Vendor metrics will always differ from internal metrics

Measurement must evolve as AI capabilities evolve

Board-Level Y-Statement

In the context of evaluating AI customer support performance and vendor claims,

we decided to implement a strict multi-dimensional resolution evaluation framework,

to achieve accurate automation ROI measurement and customer outcome protection,

accepting increased data engineering and analytics complexity.

RedCloud — Extended Strategic + Platform ADR Set

ADR-007 — Open Commerce Network Architecture (Multi-Sided Trust Graph)

Status: Accepted

Owner: CTO + Chief Product Officer

Context

RedCloud is not just a marketplace — it is a trust network across:

Brands

Distributors

Micro-retailers

Financial partners

Logistics providers

Traditional marketplaces optimise transactions.

RedCloud must optimise trust-weighted trade flows.

Decision

The platform will model trade as a Trust Graph:

Nodes:

Trading entities

Warehouses

SKUs

Payment identities

Edges:

Trade relationships

Payment reliability

Fulfilment reliability

Data quality contribution

Rationale

In emerging markets:

Trust replaces formal infrastructure.

Trust must be:

Computable

Observable

Monetisable

Consequences

Positive:

Enables risk-aware recommendations

Enables financial scoring products

Enables fraud and anomaly detection

Negative:

Requires complex graph infrastructure

Requires strong entity resolution capability

ADR-008 — Offline-First Architecture for Emerging Market Connectivity

Status: Accepted

Context

Users operate in:

Intermittent connectivity environments

Low-cost Android devices

Variable power reliability

Decision

Red101 mobile platform will be Offline-First:

Local caching of product catalog

Local order queuing

Deferred sync

Conflict resolution engine

Rationale

If the system requires constant connectivity → it fails in core markets.

Consequences

Positive:

Higher adoption

More reliable order capture

Higher data completeness

Negative:

Sync conflict complexity

Requires distributed consistency model

ADR-009 — Payments Embedded Into Trade Flow (RedPay as Native Rail)

Status: Accepted

Context

Cash-heavy trade causes:

Theft risk

Settlement delay

Credit opacity

Decision

RedPay will be embedded into:

Order confirmation

Delivery verification

Trade credit scoring

Rationale

Payments data is the strongest trust signal in informal economies.

Consequences

Positive:

Enables credit scoring

Enables working capital products

Improves trade velocity

Negative:

Regulatory licensing complexity

Higher compliance overhead

ADR-010 — Market Intelligence Data Monetisation Boundary

Status: Accepted

Context

Trade data can be monetised via:

Demand indices

Price indices

Supply chain risk scoring

But must protect:

Individual retailer identity

Competitive distributor data

Decision

Market intelligence products will only use:

Aggregated

Anonymised

Statistically thresholded datasets

Rationale

Trust is the core product.

Data monetisation cannot erode ecosystem trust.

Consequences

Positive:

Enables financial partnerships

Enables data revenue streams

Negative:

Limits raw data monetisation speed

ADR-011 — Trust Score as First-Class Platform Primitive

Status: Accepted

Context

Trust cannot be an afterthought metric.

It must be embedded in:

Data

Recommendations

Payments

Identity

Decision

Every core entity will carry Trust attributes:

For Data:

Freshness

Coverage

Source reliability

For Entities:

Payment behaviour

Fulfilment reliability

Trade consistency

Rationale

In volatile markets, confidence > prediction accuracy.

Consequences

Positive:

Enables explainable AI

Enables safer automation

Negative:

Requires heavy metadata infrastructure

ADR-012 — RAG + Signal Verification Chain (Anti-Hallucination AI Architecture)

Status: Accepted

Context

Emerging market trade has high noise:

Missing transactions

Partial stock reporting

Informal substitutions

Decision

All AI assistants must use:

Step 1 — Retrieve canonical data

Step 2 — Verify signal completeness

Step 3 — Generate answer

Step 4 — Attach Trust explanation

Rationale

LLM = Translator of trade signals, not predictor of truth.

Consequences

Positive:

Fully auditable AI

High regulatory readiness

Negative:

Higher latency vs raw generation

ADR-013 — Decision Acceleration vs Decision Automation Boundary

Status: Accepted

Context

Full automation is dangerous in:

Cash-constrained retailers

Volatile supply chains

Decision

System defaults to:

Decision Suggestion → Human Confirmation → Optional Auto Mode (High Trust Only)

Rationale

RedCloud builds confidence amplification, not blind automation.

Consequences

Positive:

Higher adoption

Lower catastrophic decision risk

Negative:

Slower automation scaling

ADR-014 — Trade Identity and Entity Resolution Strategy

Status: Accepted

Context

Emerging market trade includes:

Multiple names per shop

Shared phone numbers

Informal distributor networks

Decision

Implement probabilistic entity resolution using:

Transaction patterns

Device fingerprint

Payment behaviour

Geo clustering

Rationale

Identity = foundation of trust scoring.

Consequences

Positive:

Stronger fraud detection

Better credit scoring

Negative:

Requires continuous model retraining

ADR-015 — Heuristic → ML → Autonomous Progression Model

Status: Accepted

Context

ML without trustable signals reduces adoption.

Decision

AI product maturity ladder:

Phase 1 — Heuristic Insight

Phase 2 — ML Augmented Insight

Phase 3 — ML Recommendation

Phase 4 — Assisted Automation

Phase 5 — Conditional Automation

Rationale

Adoption follows trust curve, not model sophistication.

Consequences

Positive:

Faster market penetration

Easier explainability

Negative:

Slower theoretical AI capability growth

ADR-016 — Regional Model Localisation Strategy

Status: Accepted

Context

Trade behaviours differ drastically by region:

Credit norms

Inventory risk tolerance

Pricing volatility

Decision

Models trained and calibrated per:

Country

Region

Trade cluster

Rationale

Global models erase local signal nuance.

Consequences

Positive:

Higher prediction quality

Higher adoption

Negative:

Higher MLOps overhead

Board-Level Meta Y-Statement

In the context of digitising fragmented global FMCG trade in emerging markets,

we decided to build a trust-first Open Commerce platform combining canonical data, explainable AI, and embedded payments,

to achieve reliable, scalable, and monetisable digital trade infrastructure,

accepting higher platform complexity and slower short-term AI automation velocity.